Open-source web scraping tools play a large part in helping gather data from the internet by crawling, scraping the web, and parsing out the data.

It’s difficult to say which tool is best for web scraping. So, let’s discuss some of the popular open source frameworks and tools used for web scraping and their pros and cons in detail.

Open-Source Web Scraping Tools: A Comparison Chart

Here is a basic overview of all the best open source web scraping tools and frameworks that are discussed in this article.

| Features/Tools | GitHub Stars | GitHub Forks | GitHub Open Issues | Last Updated | Documentation | License |

| Puppeteer | 86.3k | 8.9k | 297 | March 2024 | Excellent | Apache-2.0 |

| Scrapy | 50.5k | 10.3k | 432 | March 2024 | Excellent | BSD-3-Clause |

| Selenium | 28.9k | 7.9k | 120 | March 2024 | Good | Apache-2.0 |

| PySpider | 16.2k | 3.7k | 273 | August 2020 | Good | Apache-2.0 |

| Crawlee | 11.6k | 485 | 95 | March 2024 | Excellent | Apache-2.0 |

| NodeCrawler | 6.6k | 941 | 29 | January 2024 | Good | MIT |

| MechanicalSoup | 4.5k | 374 | 31 | December 2023 | Average | MIT |

| Apache Nutch | 2.8k | 1.2k | – | January 2024 | Excellent | Apache-2.0 |

| Heritrix | 2.7k | 768 | 37 | February 2024 | Good | Apache-2.0 |

| StormCrawler | 845 | 249 | 33 | February 2024 | Good | Apache-2.0 |

Note: Data as of 2024

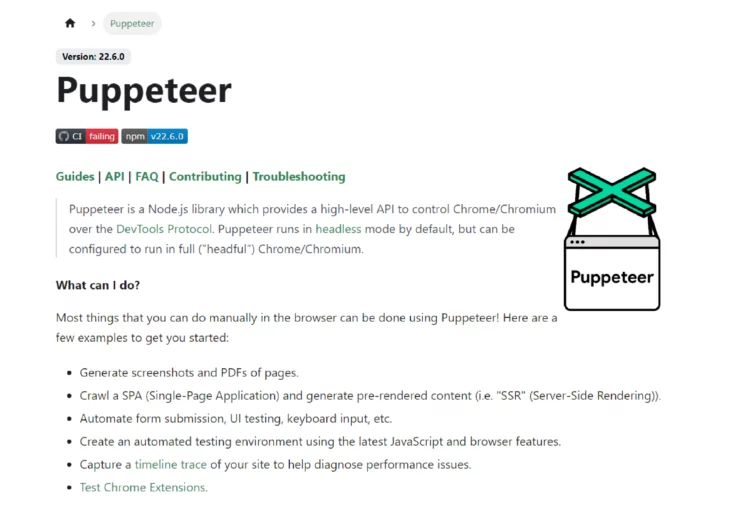

1. Puppeteer

Puppeteer is a Node library that controls Google’s Chrome in a headless mode. It allows operations without a GUI and is ideal for background tasks like web scraping, automated testing, and server-based applications. It also simulates user interactions, which is useful when data is dynamically generated through JavaScript.

Puppeteer exclusively targets Chrome, unlike Selenium WebDriver, which supports multiple browsers. It is particularly beneficial for tasks requiring interaction with web pages, such as capturing screenshots or generating PDFs.

Requires Version – Node v6.4.0, Node v7.6.0 or greater

Available Selectors – CSS

Available Data Formats – JSON

- With its full-featured API, it covers the majority of use cases

- The best option for scraping JavaScript websites on Chrome

- Only available for Chrome

- Supports only JSON format

Installation

Have Node.js and npm (Node Package Manager) installed on your computer, and then run the command:

npm install puppeteerBest Use Case

Use it when dealing with modern, dynamic websites that rely heavily on JavaScript for content rendering and user interactions.

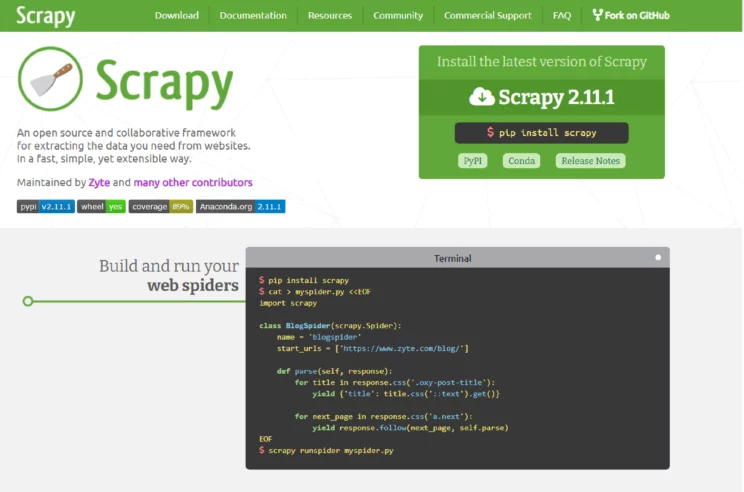

2. Scrapy

Scrapy is an open-source Python framework that offers tools for efficient data extraction, processing, and storage in different formats. It is built on the Twisted asynchronous framework and provides flexibility and speed for large-scale projects.

Scrapy is ideal for tasks ranging from data mining to automated testing, as it has features like CSS selectors and XPath expressions. It has compatibility across major operating systems, including Linux, Mac OS, and Windows.

Requirements – Python 2.7, 3.4+

Available Selectors – CSS, XPath

Available Data Formats – CSV, JSON, XML

Pros

- Suitable for broad crawling

- Easy setup and detailed documentation

- Active community

Cons

- No browser interaction and automation

- Does not handle JavaScript

Installation

If you’re using Anaconda or Miniconda, you can install the package from the conda-forge channel.

To install Scrapy using conda, run:

conda install -c conda-forge scrapy

Best Use Case

Scrapy is ideal for data mining, content aggregation, and automated testing of web applications.

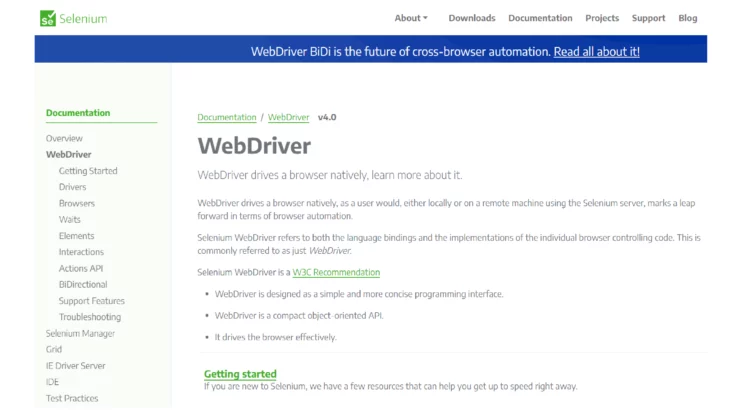

3. Selenium WebDriver

Selenium WebDriver is ideal for interacting with complex, dynamic websites by using a real browser to render page content. It executes JavaScript and handles cookies and HTTP headers like any standard browser, mimicking a human user.

The primary use of Selenium WebDriver is for testing. It scrapes dynamic content, especially on JavaScript-heavy sites, and ensures compatibility across different browsers. However, this approach is slower than simple HTTP requests due to the need to wait for complete page loads.

Requires Version – Python 2.7 and 3.5+ and provides bindings for languages JavaScript, Java, C, Ruby, and Python.

Available Selectors – CSS, XPath

Available Data Formats – Customizable

Pros

- Suitable for scraping heavy JavaScript websites

- Large and active community

- Detailed documentation makes it easy to grasp for beginners

Cons

- It is hard to maintain when there are any changes in the website structure

- High CPU and memory usage

Installation

Use your language’s package manager to install Selenium.

- For Python:

pip install selenium - For Java:

Add Selenium as a dependency in your project’s build tool (e.g., Maven or Gradle). - For Node.js:

npm install selenium-webdriver

Best Use Case

When the data you need to scrape is not accessible through simpler means or when dealing with highly dynamic web pages.

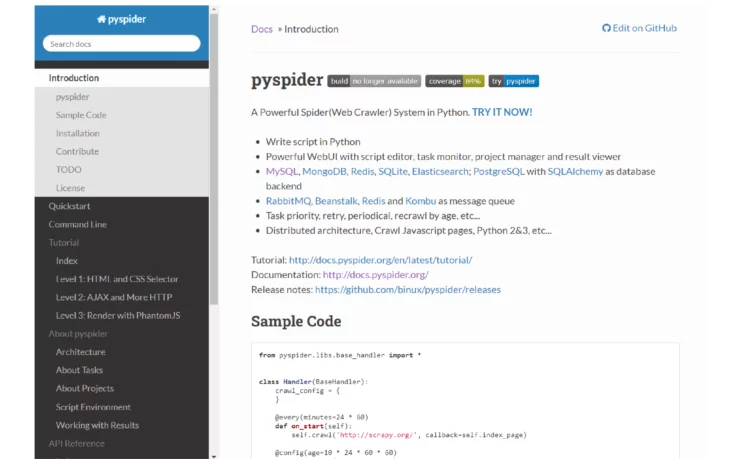

4. PySpider

PySpider is a web crawler written in Python. It is one of the free web scraping tools apart from Apify SDK that supports JavaScript pages and has a distributed architecture. It can store the data on a backend of your choosing, such as MongoDB, MySQL, Redis, etc. You can use RabbitMQ, Beanstalk, and Redis as message queues.

PySpider has an easy-to-use UI where you can edit scripts, monitor ongoing tasks, and view results. When working with a website-based user interface, you can consider PySpider. It also supports AJAX-heavy websites.

Requires Version – Python 2.6+, Python 3.3+

Available Selectors – CSS, XPath

Available Data Formats – CSV, JSON

Pros

- Facilitates more comfortable and faster scraping

- Powerful UI

Cons

- Difficult to deploy

- Steep learning curve

Installation

Before installing PySpider, ensure you have Python installed on your system.

pip install pyspiderPySpider is well-suited for large-scale web crawling.

5. Crawlee

Crawlee is one of the open-source web scraping tools that succeeds the Apify SDK. It is specifically designed for crafting reliable crawlers with Node.js. It disguises bots as real users with its anti-blocking features to minimize the risk of getting blocked.

As a universal JavaScript library, Crawlee supports Puppeteer and Cheerio. It fully supports TypeScript and is similar to the workings of the Apify SDK. Crawlee also includes all the necessary tools for web crawling and scraping.

Requirements – Crawlee requires Node.js 16 or higher

Available Selectors – CSS

Available Data Formats – JSON, JSONL, CSV, XML, Excel or HTML

Pros

- It runs on Node.js, and it’s built in TypeScript to improve code completion

- Automatic scaling and proxy management

- Mimic browser headers and TLS fingerprints

Cons

- Single scrapers occasionally break, causing delays in data scraping

- The interface is a bit difficult to navigate, especially for new users

Installation

Add Crawlee to any Node.js project by running:

npm install crawleeBest Use Case

If you need a better developer experience and powerful anti-blocking features.

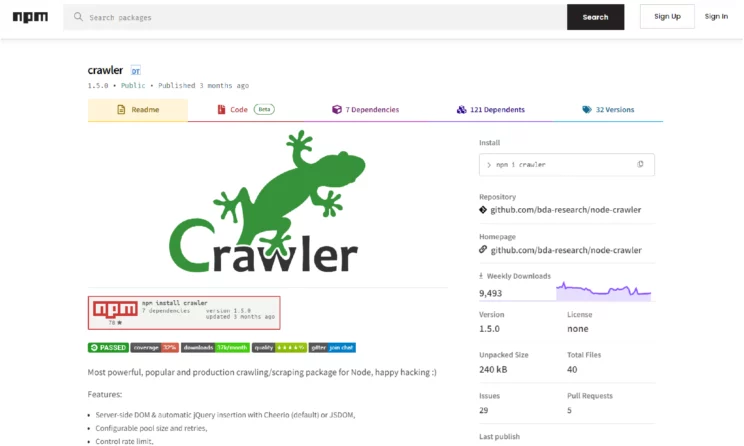

6. NodeCrawler

NodeCrawler is a popular web crawler for NodeJS, ideal for those who prefer JavaScript or are working on JavaScript projects. It easily integrates with JSDOM and Cheerio for HTML parsing. It is fully written in Node.js and supports non-blocking asynchronous I/O to streamline operations.

NodeCrawler has features for efficient web crawling and scraping, including DOM selection without regular expressions, customizable crawling options, and mechanisms to control request rate and timing.

Requires Version – Node v4.0.0 or greater

Available Selectors – CSS, XPath

Available Data Formats – CSV, JSON, XML

Pros

- Easy installation

- Different priorities for URL requests

Cons

- It has no promise of support

- Complexity in scraping modern web applications

Installation

Run the command in your terminal or at the command prompt.

npm install crawlerBest Use Case

NodeCrawler is used in scenarios that require handling complex navigation or extensive data extraction across a wide array of web resources.

7. MechanicalSoup

MechanicalSoup is a Python library designed to mimic human interaction with websites through a browser, using BeautifulSoup for parsing. It is ideal for data extraction from simple websites, handling cookies, automatic redirection, and filling out forms smoothly.

MechanicalSoup is for simpler web scraping where no API is available and minimal JavaScript is involved. If a website offers a web service API, it’s more appropriate to use that API directly instead of MechanicalSoup. For sites heavily reliant on JavaScript, you can use Selenium.

- Requires Version – Python 3.0+

- Available Selectors – CSS, XPath

- Available Data Formats – CSV, JSON, XML

Pros

- Preferred for fairly simple websites

- Support CSS and XPath selectors.

Cons

- Does not handle JavaScript

- MechanicalSoup’s functionality heavily relies on BeautifulSoup for parsing HTML

Installation

To install MechanicalSoup, you’ll need Python installed on your system and run the command:

pip install MechanicalSoupBest Use Case

It is best suited for web scraping from static websites and in situations where you need to automate the process of logging into websites.

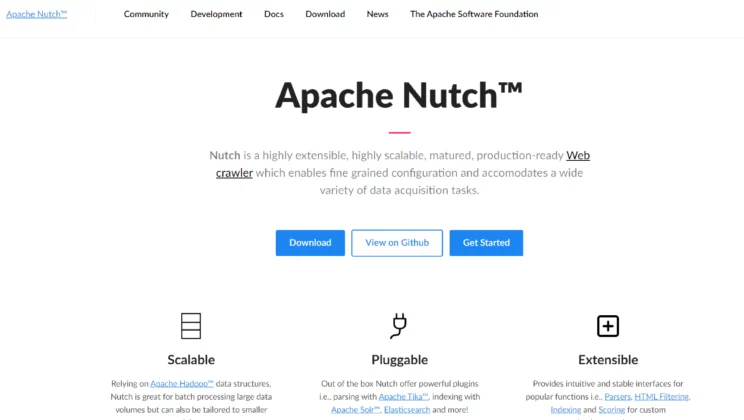

8. Apache Nutch

Apache Nutch is an established, open-source web crawler based on Apache Hadoop. It is designed for batch operations in web crawling, including URL generation, page parsing, and data structure updates. It supports fetching content through HTTPS, HTTP, and FTP and can extract text from HTML, PDF, RSS, and ATOM formats.

Nutch has a modular architecture so that it can enhance media-type parsing, data retrieval, querying, and clustering. This extensibility makes it versatile for data analysis and other applications, offering interfaces for custom implementations.

Requirements – Java 8

Available Selectors – XPath, CSS

Available Data Formats – JSON, CSV, XML

Pros

- Highly extensible and flexible system

- Open-source web-search software, built on Lucene Java

- Dynamically scalable with Hadoop

Cons

- Difficult to set up

- Poor documentation

- Some operations take longer as the size of the crawler grows

Installation

Ensure that you install Java Development Kit (JDK).

Nutch uses Ant as its build system. Install Ant Apache using your package manager.

apt-get install ant Go to the Apache Nutch official website and download the latest version of Nutch.

Best Use Case

Nutch is useful when there is a need to crawl and archive websites. It can create snapshots of web pages that can be stored and accessed later.

9. Heritrix

Heritrix is a Java-based web crawler that was developed by the Internet Archive. It is engineered primarily for web archiving. It operates in a distributed environment and is scalable with pre-determined machine numbers. It features a web-based user interface and an optional command-line tool for initiating crawls.

Heritrix respects robots.txt and meta robots tags to ensure ethical data collection. It is designed to collect extensive web information, including domain names, site hosts, and URI patterns. Heritrix requires some configuration for larger tasks but remains highly extensible for tailored web archiving needs.

Requires Versions – Java 5.0+

Available Selectors – XPath, CSS

Available Data Formats – ARC file

Pros

- Excellent user documentation and easy setup

- Mature and stable platform

- Good performance and decent support for distributed crawls

- Respects robot.txt

- Supports broad and focused crawls

Cons

- Not dynamically scalable

- Limited flexibility for non-archiving tasks

- esource-intensive

Installation

Download the latest Heritrix distribution package linked to the Heritrix releases page.

Best Use Case

The use of Heritrix is in the domain of web archiving and preservation projects.

10. StormCrawler

StormCrawler is a library and collection of resources that developers can use to build their own crawlers. The framework is based on the stream-processing framework Apache Storm. All operations, like fetching URLs, parsing, and constantly indexing, occur at the same time, making the crawling more efficient.

StormCrawler comes with modules for commonly used projects such as Apache Solr, Elasticsearch, MySQL, or Apache Tika. It also has a range of extensible functionalities to do data extraction with XPath, sitemaps, URL filtering, or language identification.

Requirements – Apache Maven, Java 7

Available Selectors – XPath

Available Data Formats – JSON, CSV, XML

Pros

- Appropriate for large-scale recursive crawls

- Suitable for low-latency web crawling

Cons

- Does not support document deduplication

- You may need extra tools to specifically extract data from pages

Installation

Install Java JDK 8 or newer on your system.

StormCrawler uses Maven for its build system. Install Maven by following the instructions on the Apache Maven website.

Initialize a new Maven project by running

mvn archetype:generate -DarchetypeGroupId=com.digitalpebble.stormcrawler -DarchetypeArtifactId=storm-crawler-archetype -DarchetypeVersion=LATESTBest Use Case

To build high-performance web crawlers that need to process a large volume of URLs in real-time or near-real-time.

Wrapping Up

This article has given you an overview of the different available tools and frameworks from which you can choose accordingly. Before you begin web scraping, you need to consider two factors: the scalability of the project and measures to not get blocked by websites.

For specific use cases like web scraping Amazon product data or scraping Google reviews, you could make use of ScrapeHero Cloud. These are ready-made web scrapers that are easy to use, free of charge up to 25 initial credits, and no coding is involved from your side.

If you have greater scraping requirements, it’s better to use ScrapeHero web scraping services. As a full-service provider, we ensure that you save your time and get clean, structured data without any hassles.

Frequently Asked Questions

It often depends on your specific needs, programming skills, and the complexity of the tasks you intend to perform. To handle moderate web scraping, you can use BeautifulSoup. If you need to interact with JavaScript-heavy sites, then go for Selenium.

The best web scraping tool varies based on specific project requirements, your technical background, and the particular challenges of the web content you aim to scrape. For example, to scrape JavaScript-heavy websites, you can use Playwright.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data