Which is the best library used in Python for web scraping? This question is a bit tricky, as Python offers several libraries for web scraping to create scrapers. Effective web scraping Python libraries offer speed, scalability, and the ability to navigate through various types of web content.

In this article, let’s explore some of the top Python web scraping libraries and frameworks and evaluate their strengths and weaknesses so you can choose the right one for your needs.

Here’s a list of some commonly used Python web scraping libraries and frameworks:

- urllib

- Python Requests

- Selenium

- BeautifulSoup

- LXML

- Scrapy

1. urllib

urllib is a standard Python library with several modules for working with URLs (Uniform Resource Locators) and is used for web scraping. It also offers a slightly more complex interface for handling common situations – like basic authentication, encoding, cookies, proxies, and so on. These are provided by objects called handlers and openers.

- urllib.request for opening and reading URLs

- urllib.error containing the exceptions raised by urllib.request

- urllib.parse for parsing URLs

- urllib.robotparser for parsing robots.txt files

Pros

- Included in the Python standard library

- It defines functions and classes to help with URL actions (basic and digest authentication, redirections, cookies, etc)

Cons

- Unlike Python Requests, while using urllib you will need to use the method urllib.encode() to encode the parameters before passing them

- Complicated when compared to Python Requests

Installation

Since urllib is already included with your Python installation, you don’t need to install it separately.

Best Use Case

If you need advanced control over the requests you make

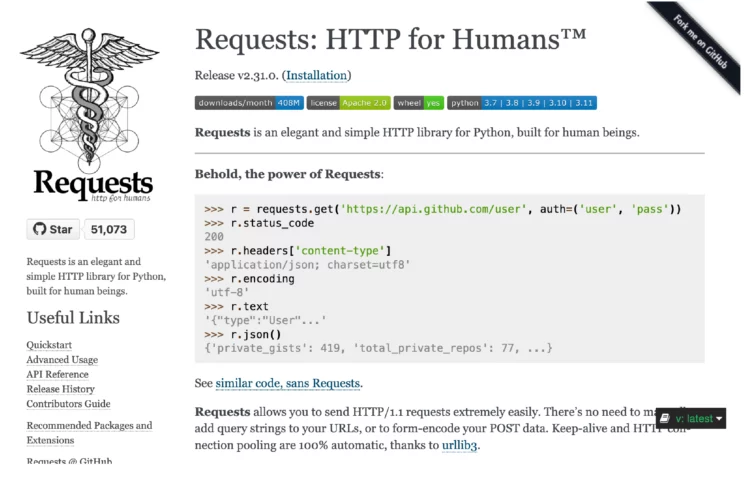

2. Requests

Requests is an HTTP library for Python that is used for web scraping. It allows you to send organic, grass-fed requests without the need for manual labor.

Pros

- Easier and shorter codes than urllib

- Thread-safe

- Multipart file uploads and connection timeouts

- Elegant key/value cookies and sessions with cookie persistence

- Automatic decompression

- Basic/digest authentication

- Browser-style SSL verification

- Keep-alive and connection pooling

- Good documentation

- There is no need to manually add query strings to your URLs.

- Supports the entire Restful API, i.e., all its methods: PUT, GET, DELETE, and POST

Cons

If your web page has JavaScript hiding or loading content, then Requests might not be the way to go.

Installation

You can install requests using conda as :

conda install -c anaconda requestsand pip using:

pip install requestsBest Use Case

If you are a beginner and use Python for web scraping where no JavaScript elements are included

3. Selenium

Selenium is an open-source web development tool that automates browsers based on Java. But you can access it via the Python package Selenium. Though it is primarily used for writing automated tests for web applications, it has come to some heavy use in web scraping, especially for pages that have JavaScript on them.

Pros

- Beginner-friendly

- You get the real browser to see what’s going on (unless you are in headless mode)

- Mimics human behavior while browsing, including clicks, selection, filling out text boxes, and scrolling

- Renders a full webpage and shows HTML rendered via XHR or JavaScript

Cons

- Very slow

- Heavy memory use

- High CPU usage

Installation

To install this package with conda run:

conda install -c conda-forge selenium Using pip, you can install it by running the below command on your terminal.

pip install seleniumBut you will need to install the Selenium Web Driver or Geckodriver for the Firefox browser interface. Failure to do so results in errors.

Best Use Case

When it’s necessary to scrape websites that hide data behind JavaScript.

4. The Parsers

- BeautifulSoup

- LXML

Once the HTML content is obtained, instead of using regular expressions, it’s advisable to use parsers for data extraction. This is because HTML’s structured nature can lead to errors. Use of parsers for data extraction enhances maintainability by using HTML’s inherent structure, avoiding the pitfalls of text-based parsing.

What are Parsers?

A parser is simply a program that can extract data from HTML and XML documents. They parse the structure into memory and facilitate the use of selectors (either CSS or XPath) to easily extract the data.

The advantage of parsers is that they can fix errors in HTML, like unclosed tags and invalid code, making it easier to extract data. However, the downside is that they usually require more processing power.

5. BS4

BeautifulSoup (BS4) is one among the prominent Python libraries for web scraping that can parse data. The content you are parsing might belong to various encodings. BS4 automatically detects it. BS4 creates a parse tree, which helps you navigate a parsed document easily and find what you need.

Pros

- Easier to write a BS4 snippet than LXML

- Small learning curve, easy to learn

- Quite robust

- Handles malformed markup well

- Excellent support for encoding detection

Cons

- If the default parser chosen for you is incorrect, they may incorrectly parse results without warnings, which can lead to disastrous results

- Projects built using BS4 might not be flexible in terms of extensibility

- You need to import multiprocessing to make it run quicker

Installation

To install this package with conda run:

conda install -c anaconda beautifulsoup4

Using pip, you can install using

pip install beautifulsoup4

Best Use Case

- When you are a beginner to web scraping

- If you need to handle messy documents, choose BeautifulSoup

6. LXML

LXML is a feature-rich library for processing XML and HTML in the Python language. It’s known for its speed and ease of use, providing a very simple and intuitive API for parsing, creating, and modifying XML and HTML documents, which makes LXML a popular library used for web scraping.

Pros

- It is known for its high performance

- Supports a wide range of XML technologies, including XPath 1.0, XSLT 1.0, and XML Schema

- Offers a Pythonic API, making it easy to navigate, search, and modify the parse tree

Cons

- The official documentation isn’t that friendly, so beginners are better off starting somewhere else

- Installing LXML can be more complex than installing pure Python libraries

- It is more memory-intensive than its alternatives

Installation

With conda run, you can install using,

To install this package with conda run:

conda install -c anaconda lxml You can install LXML directly using pip,

sudo apt-get install python3-lxmlBest Use Case

If you need speed, go for LXML

Wrapping Up

Python web scraping libraries cannot avoid bot detection while scraping. This makes web scraping difficult and stressful. Also, according to your specific needs and requirements, you must choose which Python web scraping library and framework works for you.

If you have simple web scraping needs, it is advisable to use ScrapeHero scrapers from ScrapeHero Cloud. You could try them out, as they do not require coding skills and are very cost-effective.

For recurring or large web scraping projects where data needs to be scraped consistently, we recommend ScrapeHero services.

Frequently Asked Questions

There are many Python web scraping libraries available and choosing the best among them depends on your specific needs. If you are a beginner, use BeautifulSoup. For complex scraping projects, use LXML. If you want to scrape JavaScript-heavy sites, then use Selenium, Pyppeteer, or Playwright.

The Python web scraping framework that you must choose depends on the complexity of the websites you’re dealing with, the volume of data you need to extract, and whether you need to handle JavaScript-heavy sites. Some options are Puppeteer, Playwright, or Selenium.

Some Python web scraping libraries used apart from the common ones include Pandas, PyQuery, MechanicalSoup, and Playwright.

It depends on your needs. Both Selenium and BeautifulSoup are used in Python for web scraping Selenium is used for scraping dynamic, interactive websites, while BeautifulSoup extracts specific data from HTML. They are often combined to efficiently handle complex web scraping.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data