Data is everywhere but most of it is unusable because it is not in a format that can be used. Data extraction services help tap the vast data resources available online or within internal sources and extract the data so that it can be used to benefit the business. This post is a data extraction services guide to help you with your data management.

The data could be used for all kinds of purposes from increasing sales, decreasing expenses, getting to know your customers better, increasing employee productivity, enhancing organizational efficiency and provide insights and answer easy questions with hard to derive answers.

Most of these questions are very simple such as “who is our most valued customer” to “which product has the potential to be a best seller” to “who is the best person to promote to this job“.

The answers are unfortunately not so simple.

First you need the data to make these decisions and then you need the data analytics capabilities to derive those answers.

We will focus on the former problem in this article – How do you extract the data from many available sources.

You can extract data using web scraping tools – Find the best tool to get started with data scraping

Step 1: Identify and classify the data sources

In our data extraction services guide, prior to gathering any data, you need to identify the sources. Then the sources need to be classified in ways such that they can be organized, documented, and used effectively.

The sources could be internal or external.

Internal data sources would be private data sources to the organization and could be actual databases or data stores, wikis, blogs, internal documents and file shares, emails, collaboration tools such as SharePoint or CRM tools, analytics tools and other data repositories that are based in the cloud. With the ongoing push to Software as a Service (SaaS) tools, data is increasingly present in these SaaS applications.

External data sources are primarily on the Internet and for the most part publicly available.

Another way of classifying the sources along a different dimension would be to classify them as authoritative or supplemental sources.

Authoritative sources are the primary sources of data – usually it is where the data originates and the data is clean enough that it can be relied upon as a reliable source.

Supplemental sources of data augment the data and provide additional data points to authoritative sources.

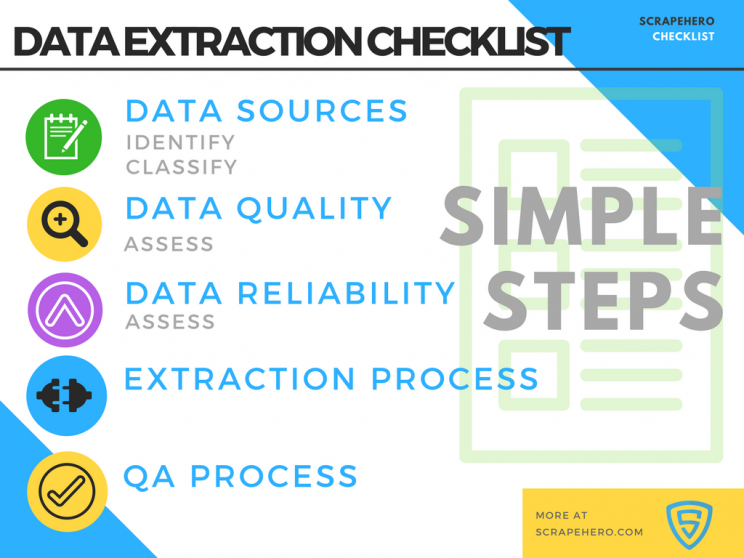

Data extraction services guide and checklist

Step 2: Assess the data quality

Data quality is critical for the usage of data. Poor quality data is not of much use other than for exploratory analysis. Various simple solutions or complex techniques can be used to assess the data.

Usually you will need a sample of the data from that source to even perform the analysis. The samples can be gathered by copying and pasting information in some simple cases or using the export capabilities of a data source if it exists. If these methods are not available or the copying and pasting is not feasible, data extraction service companies such as ScrapeHero can gather samples for you for a small fee.

Once the quality is assessed and you feel comfortable with the data being useful you need to determine if there any additional and alternative sources of the data and if there are any sources that can be used as supplemental sources to fill in holes in the data or used to increase the quality of the data.

Expert Tip: Even poor quality data can be useful and just because the quality of data is poor doesn’t mean it can’t be useful – it all depends on the use case.

Step 3: Gauge the reliability of the data

This step is often overlooked, however to rely on the data every day, the data needs to be reliably extracted in most cases. There could be cases where the reliability may not be critical but it is still something that needs to be analyzed.

Reliability of the data can be broken down into two main areas:

- Reliability of the data extraction process

- Reliability of the data itself

The reliability of the process depends on various factors and companies that invest heavily in it end up dealing with significantly better return from their investments in the data extraction process. If the data cannot be gathered reliably on whatever schedule it needs to be gathered due to breakdowns in technology or blocking measures used by the data sources, it significantly decreases the reliance on this data.

The reliability of the data itself is critical in the entry process, especially if there are few sources of the data to begin with. If there is only one source of data and the data ends up having errors as a rule or frequent errors trying to build a useful service on top of such data won’t be beneficial.

Step 4: Create an automated data extraction process

Quite often we see that people want to start at this step without giving much thought to the steps prior to this step.

Eventually, they do have to go back and consider the items discussed in the previous steps, so for better outcomes, it is best to start at Step 1 and not skip to Step 4.

The data extraction is a combination of defined processes, assigned people and for a large part, the technology that performs the eventual extraction.

Here are some of the main sub-steps in this step:

- Architect the overall process by identifying the workflow at the highest level, eventually drilling down to a much finer level for each process

- Identify areas that can be automated through a vendor, business, or a technology

- Identify tools that will help manage the process

- Identify manual processes that need to be executed

- Identify the people or management running and managing the manual steps

- Identify the communication mechanisms and tool

- Identify data destinations

- Identify and build data transformation steps and check how many of them can be accomplished by ETL tools

- Identify and build alerting mechanisms to handle exceptions and other notifications

- Start building, buying software, evaluating and hiring vendors

- Integrate the various components to build the final solution

- Test individual steps and test the overall process

- Perform stress tests with your anticipated peak loads

- Deploy the overall process

- Identify areas that can be automated in the subsequent phases of the process

Step 5: Build an automated Quality Assurance process

Next in our data extraction services guide is Quality Assurance (QA) is the final step that ensures faith in the system you just built. A step overlooked by some at their own peril.

It never is a question of “if things will go wrong” it is always a question of “when things will go wrong“

QA helps mitigate some of the risks associated with the unexpected.

The QA process can be basic to begin, but has to be one that evolves quickly over time. It can start with being a manual process, but for it to be effective it needs to be automated in part or to a large extent.

The QA process in itself is a very detailed and complex process but we will attempt to provide some of the high level building blocks here.

- Identify what can go wrong, whether it is the process or the technology or the data

- Identify the checks to gauge data integrity and then codify these checks

- Look at data through various lenses and across various dimensions – the more you peer into data, the better your checks

- Identify and implement algorithms and formulae that smarter people prior to us have already developed to help assess the data

- Assign a level of confidence to your checks – not every potential issue identified by QA is a real issue especially with internet sourced data

- Decrease the number of alerts every month till you are left with only the paws that are worth waking up for

- Optimize and automate the process repeatedly

Step 6 and beyond

Use the data, collect and analyze the data and present it for human consumption. Iterate and improve – the work never stops.

Checklist

- Identify data sources

- Classify data sources

- Assess Data Quality

- Assess Data Reliability

- Create the Extraction process

- Create the QA process

- Consume and use the data

We hope this data extraction services guide has helped you.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data