The volume of data on the web is multiplying daily, and it’s become almost impossible to scrape this amount manually. Hence, web-scraping tools and software have become increasingly popular and valuable to all, from students to enterprises.

Whether it is real estate listings, seeking industry insights, comparing prices, or generating leads, data scraping tools and software can automate the task of collecting the raw data and providing structured data in your desired format.

This blog contains a list of the 15 best web scraping tools and software in 2024, their major features, and pricing.

Before we get into finding out which software and tools are best for data scraping, let us understand why you need a web scraping tool or software.

Why Do You Need Web Scraping Tools and Software?

Data scraping tools are the most efficient means of data extraction. Let’s see why:

- Automation: Web scraping tools eliminate the inefficiency of manual data collection, freeing you to focus on analysis and insights.

- Scalability: These tools can effortlessly handle large datasets and complex websites, vastly exceeding the limitations of manual data gathering.

- Accuracy: By eliminating human error, web scraping tools ensure consistent and reliable data extraction.

- Flexibility: Different tools cater to various needs, from simple point-and-click interfaces for beginners to powerful programming frameworks for advanced users.

What are the Best Web Scraping Software and Tools in 2024?

Web scraping software and tools are crucial for anyone looking to gather data. Given below is a curated list of the best web scraping tools: free, open source and others.

ScrapeHero Cloud

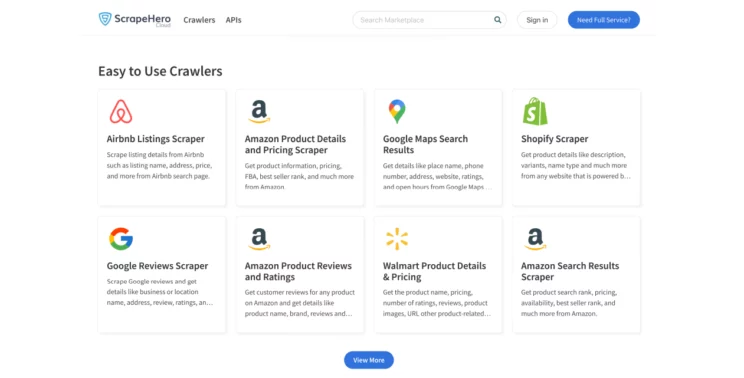

ScrapeHero Cloud is an online marketplace by ScrapeHero that offers a hassle-free web scraping experience for people with web data extraction requirements. With years of experience in web scraping services, we have used our extensive expertise to develop a number of user-friendly web scrapers.

On ScrapeHero Cloud, you can access a suite of web scraping tools like pre-built crawlers and APIs designed to effortlessly extract data from popular websites like Amazon, Google, Walmart, and many others.

Features

- ScrapeHero Cloud DOES NOT require you to download any data scraping tools or software and spend time learning to use them.

- ScrapeHero Cloud contains browser-based web scrapers, which can be used from any browser.

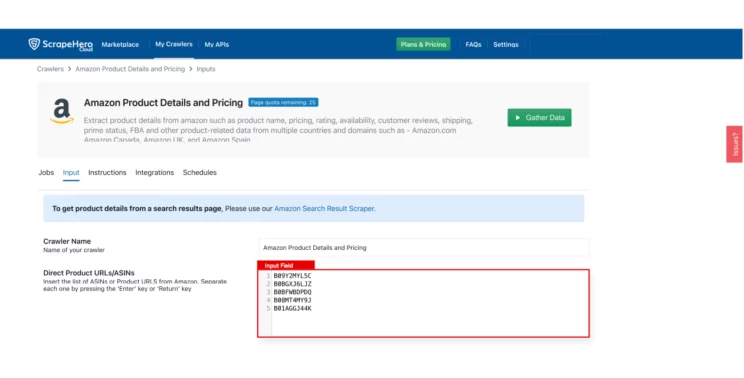

- No programming knowledge is required to use the data scrapers on ScrapeHero Cloud. With the platform, web scraping is as simple as ‘click, copy, paste, and go.’

- The following are the steps to set up a data scraper on ScrapeHero Cloud:

- Create an account

- Select the web scraper you wish to run.

- Provide input and click ‘Gather Data.’ The crawler will then be up and running.

- The pre-built crawlers are highly user-friendly, speedy, and affordable.

- ScrapeHero Cloud web scrapers support data export in JSON, CSV, and Excel formats.

- The platform offers an option to schedule data scrapers and delivers dynamic data directly to your Dropbox; this way, you can keep your data up-to-date.

- The crawlers have auto-rotate proxies and can run multiple crawlers in parallel. This ensures cost-effectiveness and flexibility.

- ScrapeHero Cloud also has custom plans in case you do not find your particular use case in the list of web scrapers.

Pricing

ScrapeHero Cloud follows a tiered subscription model ranging from free to $3.75 for every 1000 pages monthly. The free trial version allows you to try out the web scrapers for their speed and reliability before signing up for a plan.

ScrapeHero Cloud is definitely on the more affordable end of this list, given the high quality and vast quantity of data delivered.

Scrapy

Scrapy is an open-source web scraping framework in Python used to build web scrapers. It gives you all the tools to efficiently extract data from websites, process them, and store them in your preferred structure and format.

Features

- Scrapy is built on top of a Twisted asynchronous networking framework.

- You can export data into JSON, CSV, and XML formats.

- Scrapy is popular for its ease of use, detailed documentation, and active community.

- It runs on Linux, Mac OS, and Windows systems.

Pricing

Since Scrapy is an open-source framework, it is available as a free web scraping tool.

Web Unlocker- Bright Data

Bright Data’s Web Unlocker is a web scraping tool that scrapes data without getting blocked. The tool is designed to take care of proxy and unblock infrastructure for the user.

Features

- Web Unlocker can handle site-specific browser user agents, cookies, and captcha solving.

- Web Unlocker scrapes data from sites with automated IP address rotation.

- Web Unlocker adjusts in real-time to stay undetected by bots constantly developing new methods to block users.

- They also have live customer support 24/7.

Pricing

Web Unlocker follows a tiered subscription model ranging from a ‘pay as you go’ option to enterprise-level custom pricing. The price starts at $3/ CPM for the lowest tier.

Web Unblocker- Oxylabs

Web Unblocker by Oxylabs is an AI-augmented web scraping tool. It manages the unblocking process and enables easy data extraction from websites of all complexities.

Features

- Web Unblocker offers a proxy-like integration and supports JavaScript rendering.

- This data scraping tool has a convenient dashboard to manage and track your usage statistics.

- Web Unblocker lets you extend your sessions with the same proxy to make multiple requests.

Pricing

Web Unblocker offers a one-week free trial for users to test the tool. Beyond that, pricing starts at $75/month for 5 GB.

Octoparse

Octoparse is a visual web data extraction software designed specifically for non-coders. Its point-and-click interface lets you easily choose the fields you need to scrape from a website.

Features

- Octoparse offers scheduled cloud extraction wherein dynamic data is extracted in real-time.

- Octoparse has built-in Regex and XPath configurations to automate data cleaning.

- Octoparse provides cloud services and IP Proxy Servers to bypass ReCaptcha and blocking.

- This web scraping tool has an advanced mode that enables the customization of a data scraper to extract target data from complex sites.

Pricing

Octoparse has a free version of 10 tasks per account. The higher tiers range from $75 to $208 per month. They have a custom enterprise plan as well.

Puppeteer

Puppeteer is a Node library that offers a powerful yet user-friendly API for managing Google’s headless Chrome browser. A headless browser refers to a browser that can communicate with websites but doesn’t display a graphical user interface (GUI). The web scraping tool will operate quietly in the background, executing actions according to instructions provided by an API.

Features

- Puppeteer is an open-source data scraping tool that is useful for extracting information that relies on API data and JavaScript code.

- When you open a web browser, Puppeteer can take screenshots of web pages that are visible by default.

- Puppeteer automates form submission, UI testing, keyboard input, etc.

- This web scraping tool lets you create an automated testing environment using the latest JavaScript and browser features.

Pricing

Puppeteer is an open-source tool and thus is a free web scraping tool.

Playwright

Playwright is a Node library developed by Microsoft, designed for automating web browsers. It allows you to write code that can initiate a web browser, employ automation scripts to visit websites, input text, click buttons, and extract data from the internet.

Features

- Playwright was developed to enhance automated UI testing by reducing unpredictability, speeding up execution, and providing in-depth insights into browser behavior.

- Playwright offers cross-browser support, enabling it to operate with Chromium, WebKit, and Firefox.

- It integrates with continuous integration platforms like Docker, Azure, CircleCI, and Jenkins.

Pricing

Like Puppeteer, Playwright is also an open-source library and is thus a free web scraping tool.

Cheerio

Cheerio is a library that parses and manipulates HTML and XML documents. If you’re making a web scraper using JavaScript, Cheerio API makes it quicker, which makes parsing, manipulating, and rendering efficient.

Features

- Cheerio allows the use of jQuery syntax while working with the downloaded data.

- Cheerio is a fast web scraping tool because it does not interpret the result as a web browser, produce a visual rendering, apply CSS, load external resources, or execute JavaScript.

Pricing

Cheerio is a free and open-source web scraping tool.

Parsehub

Parsehub is an easy-to-use web scraping tool that crawls single and multiple websites. The easy, user-friendly web app can be built into the browser and has extensive documentation.

Features

- Parsehub is a web scraping tool that can handle websites that use JavaScript, AJAX, and other features like cookies, sessions, and automatic redirections.

- Parsehub uses machine learning to parse the most complex sites and generates the output file in JSON, CSV, Google Sheets, or through API.

- It can deal with web pages that have a lot of content on one page (like infinite scrolling), pop-up windows, and menus.

- Parsehub allows you to see the collected data in Tableau, a program for visualizing data.

Pricing

Parsehub’s free version has a limit of 5 projects with 200 pages per run. With a paid subscription, you get upto 120 private projects with unlimited pages per crawl and IP rotation. They also provide custom enterprise-level pricing.

Web Scraper.io

Web Scraper.io is an easy-to-use, highly accessible web scraping extension that can be added to Firefox and Chrome. Web Scraper lets you extract data from websites with multiple levels of navigation. It also offers Cloud to automate web scraping.

Features

- Web Scraper has a point-and-click interface that ensures easy web scraping.

- Web Scraper provides complete JavaScript execution, waiting for Ajax requests, pagination handlers, and page scroll down.

- Web Scraper also lets you build Site Maps from different types of selectors.

- You can export data in CSV, XLSX, and JSON formats or via Dropbox, Google Sheets, or Amazon S3.

Pricing

The Web Scraper Extension is a free web scraping tool and provides local support. The pricing ranges from $50 to $300 monthly for more capabilities, including cloud and parallel tasks.

Apify

Apify is a cloud-based web data extraction platform that offers ready-made web scraping tools and custom scraping solutions. You can build scraping bots without coding, schedule scraping tasks, and manage scraped data using this web scraping tool.

Features

- Apify lets you create scraping bots without coding through a drag-and-drop interface.

- Apify has a public scraper library where you can access and use pre-built scrapers for popular websites.

- It has a versatile actor system for various web scraping and automation tasks.

- This web scraping tool can be connected with popular platforms like Zapier, Google Sheets, and Slack for streamlined workflows.

Pricing

Apify offers a free plan with limited resources. Premium plans start from a basic tier and extend to custom enterprise solutions, with prices varying based on resource usage.

Browse AI

Browse AI provides AI-powered web scraping with advanced features like dynamic rendering, JavaScript execution, and anti-bot detection bypass. It offers both a visual scraper builder and a coding interface for experienced users.

Features

- This web scraping tool can bypass advanced bot detection countermeasures to avoid getting blocked.

- You can access Browse AI’s functionality through a robust API for seamless integration with your applications.

Pricing

Browse AI allows a free trial; paid plans start at $19/month with different resource allocations.

SerpAPI

SerpAPI focuses on search engine result page (SERP) scraping, providing access to search results from various engines like Google, Bing, and DuckDuckGo. You can extract organic and paid search results, analyze SERP features, and track keyword rankings using this web data extraction tool.

Features

- SerpAPI can extract both organic and paid search results, including titles, URLs, snippets, and ad details.

- This web scraper can be used to track keyword rankings over time and across different search engines and locations to monitor SEO performance.

- It lets you access real-time search results through SerpAPI’s powerful API for instant data retrieval.

Pricing

SerpAPI’s free plan has limited features; paid plans start at $50/month for developers.

Clay.com

Clay.com offers a visual web scraping tool with a focus on ease of use and automation. It’s well-suited for non-technical users who need to scrape data from simple websites.

Features

- Clay.com has a point-and-click functionality that lets you extract the data with just a few clicks.

- You can schedule scraping tasks to run automatically at specific intervals, ensuring you never miss valuable data updates.

- It is possible to export scraped data in various formats like CSV, JSON, and Excel.

- You can connect Clay.com with popular tools like Google Sheets and Zapier.

Pricing

Clay.com has plans that cater to a range of users, from individuals to large enterprises, with pricing reflecting the level of resources and features provided.

Selenium

Selenium is an open-source tool primarily used for web browser automation and is also suitable for web scraping, especially for experienced developers. It provides granular control over browser automation and supports various programming languages like Python, Java, and C#.

Features

- Control web browsers like Chrome, Firefox, and Edge programmatically for precise scraping tasks.

- Execute JavaScript code on scraped pages to access dynamic content and hidden data.

- Run scraping tasks in the background without opening a browser window for increased efficiency.

- Enjoy extensive customization options and granular control over scraping behavior for advanced web data extraction needs.

- Leverage the power of an open-source framework with a large community of developers for support and guidance.

Pricing

Selenium is a free web scraping tool but it requires a good amount of coding knowledge and setup effort, as it is a sophisticated framework for browser automation.

Conclusion

The scale and complexity of data online can be overwhelming, especially for someone without technical expertise. Web scraping tools and software are handy if the data requirement is small and the source websites aren’t complicated.

As this list demonstrates, the ideal web scraping solution depends on your specific needs and technical know-how.

If you’re a beginner seeking basic data extraction from straightforward websites, user-friendly point-and-click tools like ScrapeHero Cloud might be perfect. These tools require minimal coding and let you focus on getting the data you need without dealing with complexities.

On the other hand, web scraping tools and software cannot handle large-scale web scraping, complex logic, bypassing captcha, and do not scale well when the volume of websites is high. A full-service web scraping provider is a better and more economical option in such cases.

Even though these web scraping tools easily extract data from web pages, they come with their limits. In the long run, programming is a better way to scrape data from the web as it provides more flexibility and attains better results.

But if you aren’t proficient in programming, your needs are complex, or you require large volumes of data to be scraped, a web scraping service will suit your requirements and make the job easier.

You can save time and obtain clean, structured data by trying ScrapeHero out – we are a full-service provider that doesn’t require using any tools, and all you get is clean data without any hassle.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data