Web Scraping Software

Web scraping is widely used for collecting data across the Internet. Web Scraping Software and Tools power companies of all sizes everyday – Fortune 500 to small startups and small businesses by providing them all kinds of data from across the web

Popular Methods for Web Scraping

Software

There are a significant amount of Free and Commercial web scraping tools available. They can be installed on the desktop, as browser extensions (such as Chrome and Firefox extension) and are also known as Data Extraction Software or Tools

Services

Companies that provide all levels of services around Web scraping. From partial to full-service options. Web Scraping tool companies with their own tools wrap services around their tools or full-service companies that are tool agnostic and provide the most flexibility

Scripts

A mixture of code and scripts similar to what we provide in our tutorials can be run for smaller web scraping projects. Python is a favorite language for such scraping scripts but other languages such as PHP, Ruby, Perl, etc are also popular

Web Scraping Software or Tools

Web Scraping tools and software are available freely on the Internet, some of them are open source and free with various licensing schemes and some of them are commercial. There are also a lot of programming scripts and packages available on code repositories such as Github. In fact, the huge volume of choices itself requires a significant effort to decide which tool to use.

The two most popular web scraping or data extraction software or tools are

- Desktop software – software packages that you download from the Internet, install on your local computer desktop and run locally

- Hosted / Cloud software or Software as a Service – software you signup online and use online and pay for usage

Both of these methods have a very low barrier to entry. The process is designed to be very simple – go online, get a credit card, and purchase the software or just download them for free (in case of open source software and scripts).

The learning curve is extremely steep

You have to first understand the tool creator’s logic and then fit the paradigm and thought process of the software creator. You have to finally get used to arcane terminology to describe the various elements, workflows, navigation, logic, and process that goes into successfully scraping data from a web site.

Don’t want to code? ScrapeHero Cloud is exactly what you need.

With ScrapeHero Cloud, you can download data in just two clicks!

Desktop Software

Let’s take this example of a very popular software used for web scraping (they will stay unnamed because they are not alone – everyone has a similar problem).

They have a whole section devoted to video tutorials.

Who doesn’t like videos – we all watch them. But the problem is that these videos are not entertainment videos, instead they talk about things like Agents, Collections, Jobs, XPaths, Lists (seems like an important concept and no, it is not a grocery list).

Lost already! Well, you are not alone.

Most people give up after playing with this for a little bit, watching a few videos, making a quick scraper from an example.

Even fairly technical people have a very tough time navigating and fitting the paradigm.

Software as a Service (SaaS)

Software as a Service tools does not require you to download or install software on your desktop. The service is run in the cloud or hosted data centers.

They may require the use of Chrome or Firefox extensions which do require minimal installation.

However, we have the same steep learning curve.

Here is an example of a SaaS service tutorial (which will stay unnamed to prevent picking on it)

The best way to extract data that is spread out across many pages of a site is by building a Crawler. Based on your training, a Crawler travel to every page of that site looking for other pages that match. Crawlers are best used for when you want lots of data, but don’t know all the URLs for that site.

The key thing to note here is the part in bold – “Based on your training”. What that means is there is a lot of work that will go into it.

If you are prepared to do this all yourself and also ready to fix these scripts and jobs when they break in the middle of a busy day, then this approach might work for you.

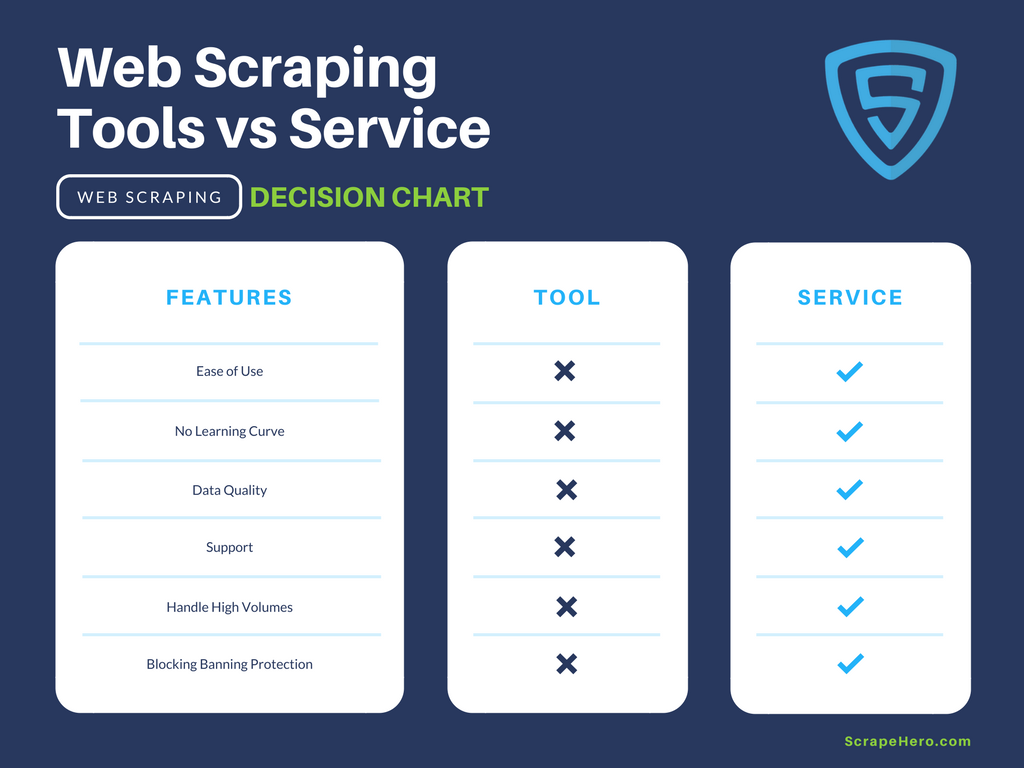

If you have the time, skills, and determination, Web Scraping Software and Tools can work for you.

However, if your business does not involve Web Scraping, it is better to save yourself the trouble and use an Enterprise Web Scraping Service instead.

We can help with your data or automation needs

Contact us to schedule a brief, introductory call with our experts and learn how we can assist your needs.