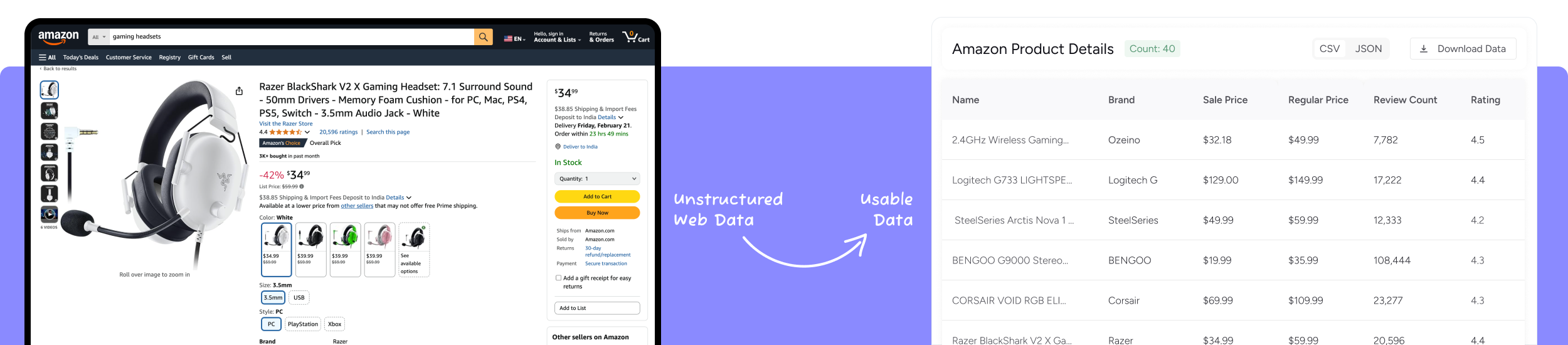

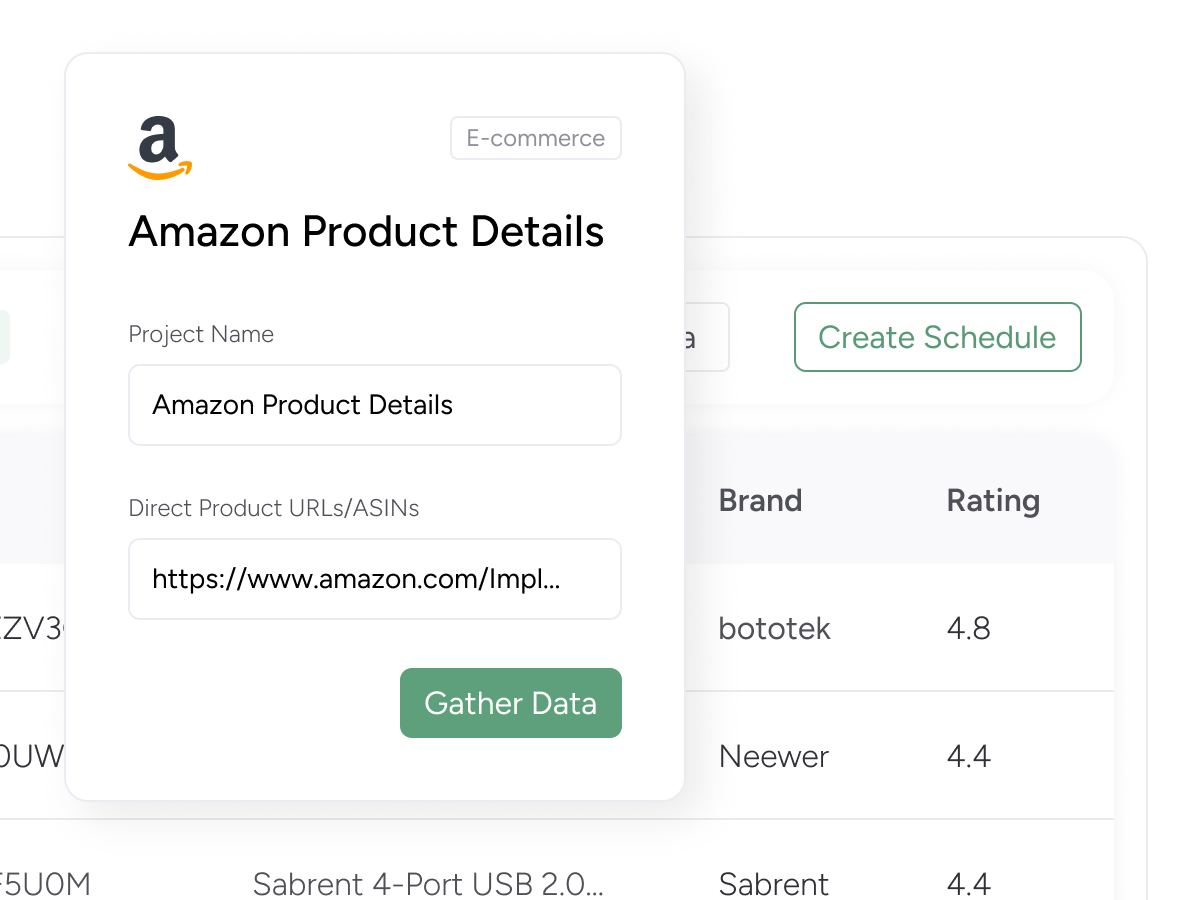

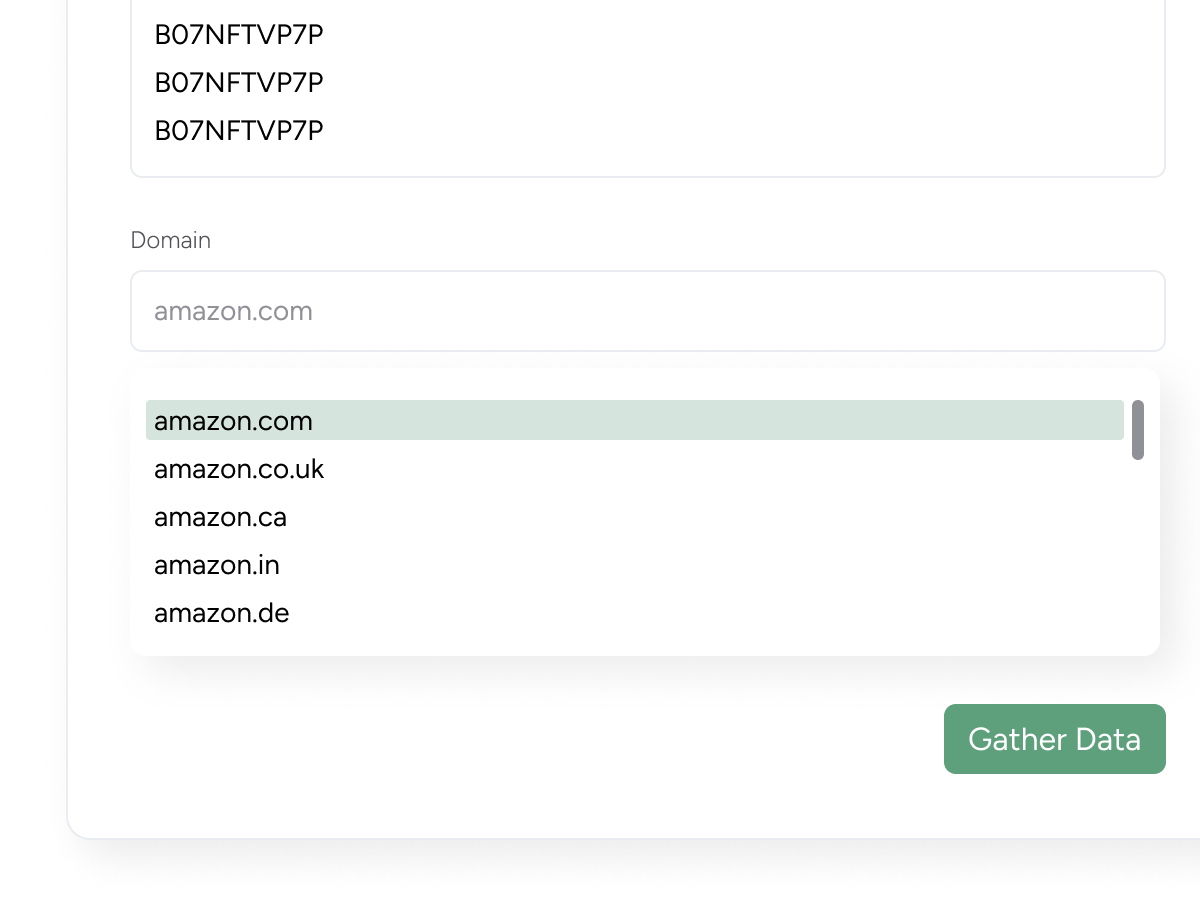

Extract product data from Amazon. Gather product details such as pricing, availability, rating, best seller rank, and 25+ data points from the Amazon product page.

It’s as easy as Copy and Paste

Download the data in Excel, CSV, or JSON formats. Link a cloud storage platform like Dropbox to store your data.

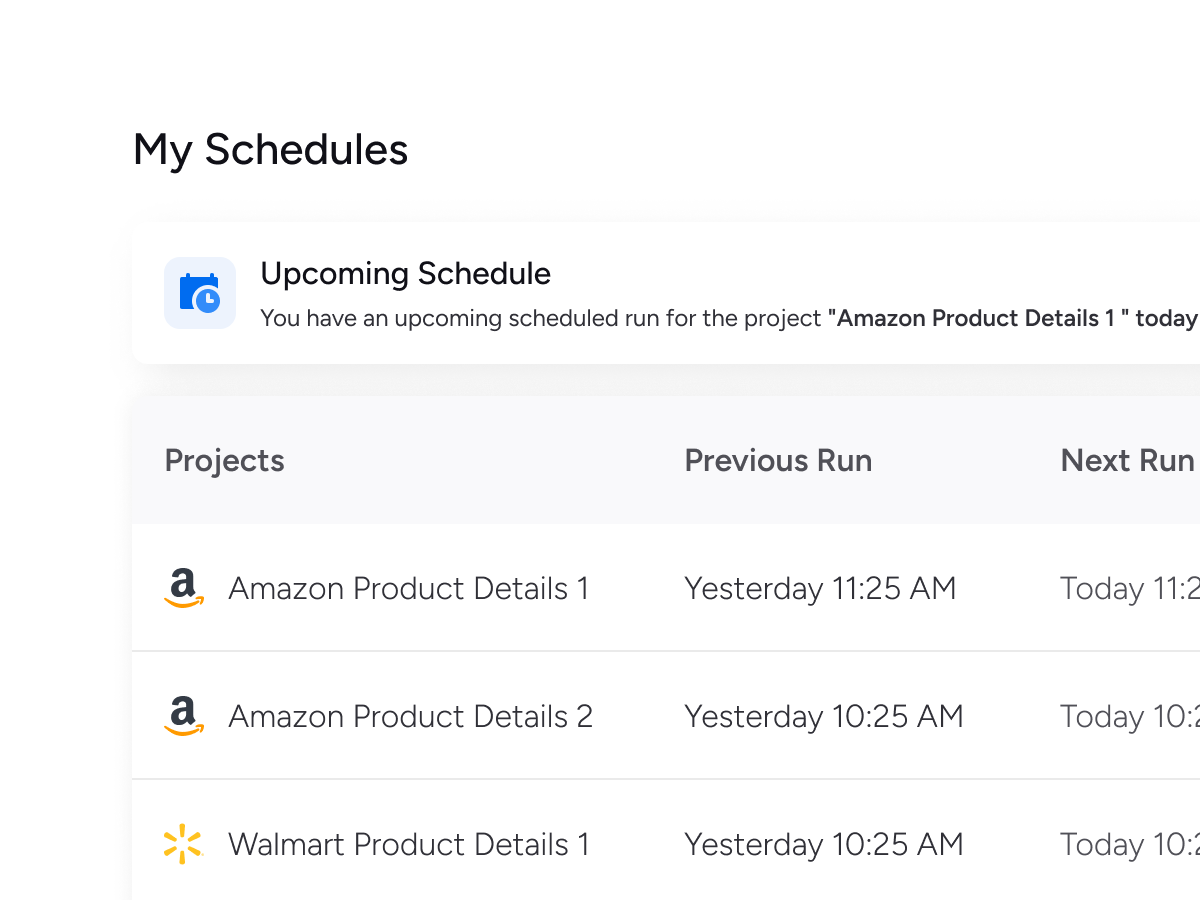

Schedule automated crawls to deliver fresh data to Dropbox, AWS S3, Google Drive, or to your app via API. Choose hourly, daily, or weekly updates to stay current.

| URL | ASIN | Name | Brand | Seller | Seller URL | Review Count | Category | Rating | Currency | Sale Price | Regular Price | Shipping Charge | Small Description | Full Description | Availability Status | Available Quantity | Model | Attributes | Product Category | Product Information | Highlighted Specifications | Image URLs | Variation Asin | Product Variations | Frequently Bought Together | Product Reviews | Sponsored Products | Rating Histogram |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| https://www.amazon.com/Ozeino-2-4GHz-Wireless-Gaming-Headset/dp/B0C4F9JGTJ/ref=sr_1_3?crid=2YJWXJ9P0M24X&dib=eyJ2IjoiMSJ9.oX9G_sVgqcJJO9XV5pAoCyLrrwpKF-Gnu_6hhezCxnolWNSnxVSiZ3y4jk5rbStSX3Z4dcJQcG4aQaPjflLCG_D0BS2Hcdv27z4Jz2cuovYlJqKvZgLDNQaX9XLAvVnGTwuY6idKjb9EuQZKL2ifD5GJp3VQwej_FMDNQobKf4hCFtvtZBgulAkeJXMmSlnipwL-AUILX0MPaMU-sjjOve-PfeaGxim_AYp8kiM-jyQ.UvPT60Xyv4Hq2ekKXmkKU_towb_KESzBBlDsMMWs-34&dib_tag=se&keywords=gaming%2Bheadphones&qid=1739906138&sprefix=gaming%2Bheadphones%2Caps%2C299&sr=8-3&th=1 | B0C4F9JGTJ | 2.4GHz Wireless Gaming Headset for PC, Ps5, Ps4 - Lossless Audio USB & Type-C Ultra Stable Gaming Headphones with Flip Microphone, 40-Hr Battery Gamer Headset for Switch, Laptop, Mobile, Mac | Ozeino | WU MEIJIAO storeWU MEIJIAO store | https://www.amazon.com/gp/help/seller/at-a-glance.html/ref=dp_merchant_link?ie=UTF8&seller=A3AUHWT8COGTZ8&asin=B0C4F9JGTJ&ref_=dp_merchant_link&isAmazonFulfilled=1 | 7,920 | [{"level": 1, "name": "Video Games", "url": "/computer-video-games-hardware-accessories/b/ref=dp_bc_aui_C_1?ie=UTF8&node=468642"}, {"level": 2, "name": "Legacy Systems", "url": "/Systems-Games/b/ref=dp_bc_aui_C_2?ie=UTF8&node=294940"}, {"level": 3, "name": "PlayStation Systems", "url": "/b/ref=dp_bc_aui_C_3?ie=UTF8&node=23563592011"}, {"level": 4, "name": "PlayStation", "url": "/PlayStation-Games/b/ref=dp_bc_aui_C_4?ie=UTF8&node=229773"}, {"level": 5, "name": "Accessories", "url": "/Accessories-PlayStation-Hardware/b/ref=dp_bc_aui_C_5?ie=UTF8&node=15891181"}] | 4.4 | USD | 32.08 | 49.99 | - | 【Amazing Stable Connection-Quick Access to Games】Real-time gaming audio with our 2.4GHz USB & Type-C ultra-low latency wireless connection. With less than 30ms delay, you can enjoy smoother operation and stay ahead of the competition, so you can enjoy an immersive lag-free wireless gaming experience. - 【Game Communication-Better Bass and Accuracy】The 50mm driver plus 2.4G lossless wireless transports you to the gaming world, letting you hear every critical step, reload, or vocal in Fortnite, Call of Duty, The Legend of Zelda and RPG, so you will never miss a step or shot during game playing. You will completely in awe with the range, precision, and audio quality your ears were experiencing. - 【Flexible and Convenient Design-Effortless in Game】Ideal intuitive button layout on the headphones for user. Multi-functional button controls let you instantly crank or lower volume and mute, quickly answer phone calls, cut songs, turn on lights, etc. Ease of use and customization, are all done with passion and priority for the user. - 【Less plug, More Play-Dual Input From 2.4GHz & Bluetooth】 Wireless gaming headset adopts high performance dual mode design. With a 2.4GHz USB dongle, which is super sturdy, lag<30ms, perfectly made for gamers. Bluetooth mode only work for phone, laptop and switch. And 3.5mm wired mode (Only support music and call). - 【Wide Compatibility with Gaming Devices】Setup the perfect entertainment system by plugging in 2.4G USB. The convenience of dual USB work seamlessly with your PS5,PS4, PC, Mac, Laptop, Switch and saves you from swapping cables. - Bluetooth function CANT'T directly SUPPORT with Mac, Ps5, Ps4,Pc, additional USB Bluetooth Adapter is required (not included). Recommend to use the 2.4G mode, it easy and amazing connect. - The 2 in 1 USB transmitter needs to be combined to connect for Ps/Ps2/Ps5/Ps4/PC/Laptop/Mac, can't be used separately. NOT:OW810 wireless gaming headset NOT Compatible with Xbox. | Previous page Next page 1 Quick Flexible Control 2 Noise Cancelling Mic 3 Amazing Battery Life 4 Soft Memory Foam If low power remind, How long can it use? . If remind low power, at least 10-20 mins left . And we suggest you can charge for 10 mins, then you can play at least for 100 mins . 10 mins charged, 100 mins used Can I use Bluetooth mode with PC and PS5/4? Bluetooth function doesn't directly support PS5, PS4,PC, additional 「USB Bluetooth Adapter」 is required (not included), recommend to use the 2.4G USB mode in PS5, PS4, PC devices. How do I mute the microphone? 1. Turn on/off the mic : Double-click the button "+" . 2. Check the mute setting of your device. 3. Directly to ask seller. (We always here) How to connect the PS5/PS4? 1.Connect the USB into USB port on PS4/5 main box 2.Setting headset to 2.4GHz mode 2.Console settings: Main menu-Settings-Devices-Audio-Audio devices-Set input & output device to OW810-Set output to headphones to all audion-Set volume control to 100% Is this headset with wired mode? · We attached a 3.5mm cable, when headset is low power, wired mode could be used for urgent · Note: Our 3.5mm cable & USB transmiter not compatible with xbox series s. | In Stock | - | OW810 | color: White | Video Games > Legacy Systems > PlayStation Systems > PlayStation > Accessories | [{"ASIN": "B0C4F9JGTJ", "Age Range (Description)": "Adult", "Amazon Best Sellers Rank": "#23 in Video Games , #1 in PlayStation 2 Accessories , #1 in PlayStation Accessories , #3 in PlayStation 5 Headsets", "Audio Driver Size": "50 Millimeters", "Audio Driver Type": "Dynamic Driver", "Audio Latency": "20 Milliseconds", "Batteries": "1 Lithium Metal batteries required. (included)", "Battery Life": "40 Hours", "Bluetooth Range": "15 Feet", "Bluetooth Version": "5.3", "Cable Feature": "Detachable", "Charging Time": "2.98 Hours", "Compatible Devices": "playstation_2, PlayStation_5, playstation, nintendo_switch, playstation_4", "Connectivity Technology": "Wireless", "Control Method": "Touch", "Control Type": "Volume Control", "Controller Type": "Touch", "Country of Origin": "China", "Date First Available": "May 8, 2023", "Earpiece Shape": "Circle", "Headphones Jack": "3.5 mm Jack", "Included Components": "1* Wireless Gaming Headset Black, 1* USB & Type -C Transmitter, 1* User Manual, 1* 3.5 mm Aux Cable & Charging Cable", "Is Autographed": "No", "Item Weight": "1.08 pounds", "Item model number": "OW810", "Manufacturer": "Ozeino", "Material": "Plastic", "Model Name": "OW810", "Noise Control": "Sound Isolation", "Number of Items": "1", "Package Type": "Standard Packaging", "Product Dimensions": "5.91 x 3.94 x 7.87 inches", "Recommended Uses For Product": "Gaming", "Specific Uses For Product": "Enjoy the game, music", "Style": "Modern", "Theme": "Video Game", "Unit Count": "1.0 Count", "Wireless Communication Technology": "USB-C&A,Bluetooth"}] | [{"Brand": "Ozeino", "Color": "White", "Ear Placement": "Over Ear", "Form Factor": "Over Ear", "Impedance": "32 Ohm"}] | https://m.media-amazon.com/images/I/71nUYWaf0RL._AC_SL1500_.jpg, https://m.media-amazon.com/images/I/816uvsDE8rL._AC_SL1500_.jpg, https://m.media-amazon.com/images/I/81RD5G0eFfL._AC_SL1500_.jpg, https://m.media-amazon.com/images/I/71Lw8KkOo2L._AC_SL1500_.jpg, https://m.media-amazon.com/images/I/7121w4lIQvL._AC_SL1500_.jpg, https://m.media-amazon.com/images/I/81FiSCzfkZL._AC_SL1500_.jpg | [{"asin": "B0DR8YG8D5", "color_name": "Red"}, {"asin": "B0DR8WLWDF", "color_name": "Blue"}, {"asin": "B0C4F9JGTJ", "color_name": "White"}, {"asin": "B0C49F6NG6", "color_name": "Black"}, {"asin": "B0DF7D1WLM", "color_name": "Pink"}, {"asin": "B0DJR519T2", "color_name": "Camouflage"}, {"asin": "B0D95Y2Z1K", "color_name": "Yellow"}] | [{"color_name": ["Black", "Blue", "Camouflage", "Pink", "Red", "White", "Yellow"]}] | [{"link": "https://www.amazon.com/Gtheos-Bluetooth-Headphones-Detachable-Microphone/dp/B0B4B2HW2N/ref=pd_bxgy_d_sccl_1/138-1533047-4336725?pd_rd_w=0e6MV&content-id=amzn1.sym.de9a1315-b9df-4c24-863c-7afcb2e4cc0a&pf_rd_p=de9a1315-b9df-4c24-863c-7afcb2e4cc0a&pf_rd_r=3HYQN9N1DWJ06DEX71N8&pd_rd_wg=oBtem&pd_rd_r=6a03dfba-02b7-4e7d-b756-9adaa7f5ce50&pd_rd_i=B0B4B2HW2N&psc=1", "price": "$33.99", "title": "Gtheos 2.4GHz Wireless Gaming Headset for PS5, PS4 Fortnite & Call of Duty/FPS Gamers, PC, Nintendo Switch, Bluetooth 5.3 Gaming Headphones with Noise Canceling Mic, Stereo Sound, 40+Hr Battery -White"}, {"link": "https://www.amazon.com/OIVO-Controller-Compatible-Playstation-Indicators/dp/B08LZGPPBH/ref=pd_bxgy_d_sccl_2/138-1533047-4336725?pd_rd_w=0e6MV&content-id=amzn1.sym.de9a1315-b9df-4c24-863c-7afcb2e4cc0a&pf_rd_p=de9a1315-b9df-4c24-863c-7afcb2e4cc0a&pf_rd_r=3HYQN9N1DWJ06DEX71N8&pd_rd_wg=oBtem&pd_rd_r=6a03dfba-02b7-4e7d-b756-9adaa7f5ce50&pd_rd_i=B08LZGPPBH&psc=1", "price": "$19.99", "title": "OIVO PS5 Controller Charger PS5 Accessories Kits with Fast Charging AC Adapter, Controller Charging Stand for PlayStation 5, Docking Station Replacement for DualSense Charging Station"}] | [{"author_name": "Charity Wiberg", "author_profile": "https://www.amazon.com/gp/profile/amzn1.account.AEJZQCDLKS2BWTY4V4LETRFFF6YQ/ref=cm_cr_dp_d_gw_pop?ie=UTF8", "badge": "Verified Purchase", "rating": "5.0", "review_text": "I have been using this headset for a couple months now and can say I am VERY satisfied. At a budget-friendly price, they deliver impressive sound performance. The build quality feels sturdy, yet lightweight, making them durable without being heavy on the head. They are also extremely comfortable will nice ear muffs. While also having a nice clean color for both the base and LED\u2019s. Its Bluetooth is amazing. I was able to connect it to a Roku TV, laptop, iPhone, and iPad. It remains well connected even after traveling a far distance away from a device. Allowing for music sessions while cleaning. Buttons were easy to use a simple power on and volume adjustment system that was straightforward to use. I overall I recommend these headphones. They have good sound quality for games and it\u2019s good quality for the price.", "review_title": "Affordable, Quality, And Comfort", "review_url": "https://www.amazon.com/gp/customer-reviews/R2MN7XJDFQDWED/ref=cm_cr_dp_d_rvw_ttl?ie=UTF8&ASIN=B0C4F9JGTJ", "reviewed_date": "January 09, 2025", "reviewed_product_variant": "Color: White"}, {"author_name": "Marjorie", "author_profile": "https://www.amazon.com/gp/profile/amzn1.account.AFPQY5LOYGKB6FYKTSFJPQIXHI5Q/ref=cm_cr_dp_d_gw_tr?ie=UTF8", "badge": "Verified Purchase", "rating": "5.0", "review_text": "I bought this Bluetooth headset for my son as a Christmas gift to use with his PS4, and it has exceeded all expectations! He absolutely loves it and says it\u2019s the best headset in the world. The sound quality is incredible, with crystal-clear audio and great bass, making his gaming experience immersive and enjoyable. The mic picks up his voice perfectly, so he has no trouble communicating with his friends during multiplayer games. The headset is also super comfortable, even during long gaming sessions, and the battery life is outstanding\u2014it lasts all day without needing a recharge. Setup was quick and easy, and it connected to his PS4 seamlessly. He hasn\u2019t stopped raving about it, and I\u2019m thrilled I found something he enjoys so much. Highly recommend this headset to any gamer out there\u2014it\u2019s worth every penny!", "review_title": "Best headset ever", "review_url": "https://www.amazon.com/gp/customer-reviews/R10ONB3FT7J2MM/ref=cm_cr_dp_d_rvw_ttl?ie=UTF8&ASIN=B0C4F9JGTJ", "reviewed_date": "December 29, 2024", "reviewed_product_variant": "Color: White"}, {"author_name": "Professor Anthrax & The Witch", "author_profile": "https://www.amazon.com/gp/profile/amzn1.account.AEXAODXMPHBNDELU76IBTQB2RD2A/ref=cm_cr_dp_d_gw_pop?ie=UTF8", "badge": "Verified Purchase", "rating": "5.0", "review_text": "These belong to my wife. She has had these headphones for over six months of constant use. She works a job that requires 8-11 hours per day of use or standby (powered on). She averages 2-4 hours per day of talk time. She tells me these headphones are, and continue to be, very comfortable to use; and the sound quality through the headphones is excellent, with no distortion. She says the microphone gain is also good; she has never had anyone complain they couldn't hear her, like her original headset. Even after over six months of use they are in excellent condition, showing no signs of wear. She uses them primarily with the 2.5 GHz connection, although the few times she used the Bluetooth connection they have also connected quickly and flawlessly. She never had an issue with them running out of charge while working; although she does plug them in each day after work. Overall a decent quality product for the price.", "review_title": "Comfortable, Good sound quality, Good Microphone quality, Good battery life.", "review_url": "https://www.amazon.com/gp/customer-reviews/R3L9XNTV2NJH2P/ref=cm_cr_dp_d_rvw_ttl?ie=UTF8&ASIN=B0C4F9JGTJ", "reviewed_date": "February 04, 2025", "reviewed_product_variant": "Color: Black"}, {"author_name": "Cass", "author_profile": "https://www.amazon.com/gp/profile/amzn1.account.AG6IGXL5SWAOVBOLZ3X7LQOPMDDQ/ref=cm_cr_dp_d_gw_tr?ie=UTF8", "badge": "Verified Purchase", "rating": "5.0", "review_text": "Bought these for my husband so he can ignore his wife and kids with ease (lol) while playing his games. They have great noise cancellation, and he says the mute feature button for the mic is \u201cexclusive\u201d and easy to use quickly while dunking on his opponents or trying to cut off the sound of children screaming in the background. The led lights are sick. Bluetooth is easy to set up and pairs effortlessly. Battery life is incredible. All around good buy. They\u2019ve outlasted several other inferior products by a mile and is quite durable.", "review_title": "Extraordinary Headphones, with great quality at a great price!", "review_url": "https://www.amazon.com/gp/customer-reviews/R1F19H0FUUPW1A/ref=cm_cr_dp_d_rvw_ttl?ie=UTF8&ASIN=B0C4F9JGTJ", "reviewed_date": "January 08, 2025", "reviewed_product_variant": "Color: White"}, {"author_name": "Jennifer", "author_profile": "https://www.amazon.com/gp/profile/amzn1.account.AHGNQVNCPGABQ3XZHGKZTDILTCHA/ref=cm_cr_dp_d_gw_tr?ie=UTF8", "badge": "Verified Purchase", "rating": "5.0", "review_text": "I recently purchased this headset for my son, and it\u2019s been an absolute game-changer! It works flawlessly with the PS5, delivering great sound quality and crystal-clear communication. The noise-cancellation feature really helps him immerse in the game without distractions, and the comfort level is top-notch\u2014perfect for those long gaming sessions. The durability is impressive as well. After a few weeks of heavy use, it\u2019s holding up great, which is a relief for me as a parent. The built-in mic works perfectly for in-game chats, making it easy to communicate with friends or teammates. Overall, this headset has exceeded expectations. Highly recommend it for anyone looking for a reliable, high-quality option for PS5 gaming!", "review_title": "Perfect headset", "review_url": "https://www.amazon.com/gp/customer-reviews/RKJ2UD7FJ7BF3/ref=cm_cr_dp_d_rvw_ttl?ie=UTF8&ASIN=B0C4F9JGTJ", "reviewed_date": "December 30, 2024", "reviewed_product_variant": "Color: White"}, {"author_name": "Miranda Ryan", "author_profile": "https://www.amazon.com/gp/profile/amzn1.account.AEMVKC2MVQGYU5SULN3VESBGEFSQ/ref=cm_cr_dp_d_gw_tr?ie=UTF8", "badge": "Verified Purchase", "rating": "4.0", "review_text": "Like all the other reviews posted for this product, I've been impressed with the sound, functionality, and comfort the headset provides. Soft padding for the ears and the top of the head makes you forget you're wearing a headset, and the button commands for a quick mute, or to change songs, play songs, etc, are easy to map out and memorize once they're on your head. Unfortunately, after only 3-4 months of owning the product, the mic has come loose, and my party/call members are complaining of static through the mic. The mic is busted now, but the sound quality still lives; so no more calls for these headphones. 🙁 If you decide to get these, note that the reviews are accurate; I've been incredibly happy with the performance and comfort -- just, treat the mic real gently, or you might put it in an early grave.", "review_title": "Fragile Mic", "review_url": "https://www.amazon.com/gp/customer-reviews/R2KHAKAG2CRBU0/ref=cm_cr_dp_d_rvw_ttl?ie=UTF8&ASIN=B0C4F9JGTJ", "reviewed_date": "January 15, 2025", "reviewed_product_variant": "Color: White"}, {"author_name": "Jordan Baker", "author_profile": "https://www.amazon.com/gp/profile/amzn1.account.AGM47O2TFY7TOLBNPQDWLI7HYBWQ/ref=cm_cr_dp_d_gw_pop?ie=UTF8", "badge": "Verified Purchase", "rating": "5.0", "review_text": "I like the say on the low end of headsets because I do not want to spend $100-$200 in my opinion. These good great for gaming and they are builds with arguably good quality. For the price these are pretty good and I believe they have a multi switch so you can plug the usb like I did into your control and the smaller into your computer Vic Versa. Lastly just love the way they feel on my head and really comfortable at that as well. Sound quality is great and I can shake my head around and they won\u2019t come off. Has a wireless or wire mode is is a plus and also again everthing your getting at this price is probably one of the better headphones on Amazon you can buy at a reasonable good price .", "review_title": "Good Gaming Headset for the price", "review_url": "https://www.amazon.com/gp/customer-reviews/R35217UUCTC5SE/ref=cm_cr_dp_d_rvw_ttl?ie=UTF8&ASIN=B0C4F9JGTJ", "reviewed_date": "January 17, 2025", "reviewed_product_variant": "Color: White"}, {"author_name": "Roger Handt", "author_profile": null, "badge": "Verified Purchase", "rating": "5.0", "review_text": "Das Headset ist stabil verarbeitet und es ist aufgrund des relativ geringen Gewichts sehr angenehm zu tragen. Ich habe relativ gro\u00dfe Ohren und deshalb Probleme, ein passendes Headset zu finden. Mit diesem habe ich keine Probleme. Zur technischen Seite: Die WiFi-Konnektivit\u00e4t funktioniert hervorragend, sowohl mit dem beiliegenden Adapter als auch per Bluetooth mit dem Smartphone gekoppelt. Das Noise-Canceling ist solide, der Kopfh\u00f6rer umschlie\u00dft die Ohren vollst\u00e4ndig und einigerma\u00dfen stramm, so werden Umgebungsger\u00e4usche eh schon gut ged\u00e4mpft. Die Akkulaufzeit hab ich noch nicht vollst\u00e4ndig ausgereizt, aber auch eine lange Gaming-Session \u00fcber hat der Akku bisher gut gehalten. Die Mikrofon-Qualit\u00e4t ist gem\u00e4\u00df meiner Mitspieler auch sehr solide. Wer ein g\u00fcnstiges Headset sucht, ist mit diesem Modell in jedem Fall gut beraten.", "review_title": "Sehr gute Preis/Leistung!", "review_url": null, "reviewed_date": "January 25, 2025", "reviewed_product_variant": "Color: White"}, {"author_name": "Mickael", "author_profile": null, "badge": "Verified Purchase", "rating": "5.0", "review_text": "Ce casque OZeing est une vraie surprise ! La connexion 2.4 GHz en Wi-Fi est ultra stable, sans latence, ce qui est parfait pour le gaming. Le son est clair, bien \u00e9quilibr\u00e9, avec des basses immersives qui donnent une vraie profondeur aux jeux. Le micro est \u00e9galement de bonne qualit\u00e9, avec une voix nette et sans bruit parasite. C\u00f4t\u00e9 confort, rien \u00e0 redire : les coussinets sont doux et agr\u00e9ables m\u00eame apr\u00e8s plusieurs heures de jeu. Et l\u2019autonomie est excellente, ce qui \u00e9vite d\u2019avoir \u00e0 le recharger trop souvent. Bref, un excellent rapport qualit\u00e9-prix pour ceux qui cherchent un casque sans fil performant et abordable. Je recommande sans h\u00e9siter.", "review_title": "Excellent casque gaming sans fil !", "review_url": null, "reviewed_date": "January 15, 2025", "reviewed_product_variant": "Color: White"}, {"author_name": "Tina", "author_profile": null, "badge": "Verified Purchase", "rating": "5.0", "review_text": "Dieses Headset hat mich wirklich \u00fcberzeugt und geh\u00f6rt definitiv zu den besten, die ich bisher genutzt habe. *Schnelle Lieferung*: Die Lieferung war unglaublich flott! Innerhalb k\u00fcrzester Zeit war das Headset bei mir, sicher verpackt und in einwandfreiem Zustand. So muss es sein! *Stabil und hochwertig*: Die Verarbeitung ist top. Das Headset f\u00fchlt sich sehr robust an und macht den Eindruck, dass es auch bei h\u00e4ufiger Nutzung lange h\u00e4lt. *Exzellente Klang- und Sprachqualit\u00e4t*: Der Sound ist ein echtes Highlight \u2013 detailliert, kraftvoll und perfekt abgestimmt. Egal ob f\u00fcr Gaming, Musik oder Filme, der Klang ist einfach fantastisch. Das Mikrofon liefert zudem eine klare \u00dcbertragung ohne St\u00f6rger\u00e4usche, was gerade beim Spielen mit Freunden super wichtig ist. *Modernes Design*: Das Headset sieht richtig gut aus! Es kombiniert ein modernes, ansprechendes Design mit hohem Tragekomfort. Selbst nach Stunden bleibt es bequem und angenehm zu tragen. Alles in allem bin ich mehr als zufrieden. Dieses Headset ist f\u00fcr jeden Gamer, der Wert auf Qualit\u00e4t und Design legt, eine ausgezeichnete Wahl. Von mir gibt es volle 5 Sterne!", "review_title": "Perfektes Gaming-Headset \u2013 klare Empfehlung!", "review_url": null, "reviewed_date": "December 11, 2024", "reviewed_product_variant": "Color: Black"}, {"author_name": "Nikkolos", "author_profile": null, "badge": "Verified Purchase", "rating": "5.0", "review_text": "Au premier abord,j'ai voulu le renvoyer ,mais apr\u00e8s comparaison avec un jbl d' un prix plus \u00e9lev\u00e9e : r\u00e9sultat = y a pas photo ! c'est un super casque ! Compatible avec tout ( ma tv , console ps5/ps4, mon t\u00e9l\u00e9phone, d'une simplicit\u00e9 enfantine pour se connecter \u00e0 tout ses appareils. Le son est excellent, immersif et les basses profondes sans Pass\u00e9s au dessus des aigu\u00ebs et m\u00e9dium. Plage de fr\u00e9quences excellente je suis bluff\u00e9 ! Pour une utilisation domestique sur console, regarder un film sur tv et \u00e9couter la musique sur you tube par ex. C'est le Top ! J'ai des casques haut de gammes, Marshall, jbl , Bose ect....il a rien \u00e0 leurs envier ,ce casque est vraiment tr\u00e8s bon ! Ne sature pas lvl \u00e0 fond. N'h\u00e9sitez pas et l' \u00e9coutez !!! Vous serez pas d\u00e9\u00e7u et apr\u00e8s 10h00 d'\u00e9coute il fonctionne encore ! Qe recharge super vite(2 h00) mais en 10 min de charges vous avez d\u00e9j\u00e0 minimum 2 h00 d'\u00e9coute. J'ai v\u00e9rifi\u00e9 ! J'ai rajouter une mousse sur l'arceau de t\u00eate pour am\u00e9liorer son confort et il me fait pas mal aux oreilles ni \u00e0 la t\u00eate ! Le micro r\u00e9tractable est super bien bien pens\u00e9 et les boutons sont simples et intuitifs ( pas besoins d'un bac + 5 ) . On vois que c'est un casque consu par un des gamers, qui aime la qualit\u00e9 du son . Avec ps5 j'ai le son 3D Sony qui est excellent. ( sur ps5 tout les casques milieu de game sont compatible 3D) pas besoin du casque officiel c tjrs bon \u00e0 savoir. Bon jeu et bonne \u00e9coute surtout !", "review_title": "Rapport qualit\u00e9 prix g\u00e9niale", "review_url": null, "reviewed_date": "September 21, 2024", "reviewed_product_variant": "Color: Black"}, {"author_name": "Sandrine S", "author_profile": null, "badge": "Verified Purchase", "rating": "5.0", "review_text": "Bon son, confortable, beau design, fonctionne en Bluetooth ou 2.4G avec cl\u00e9 USB. Tr\u00e8s bon rapport qualit\u00e9/ prix.", "review_title": "Tr\u00e8s bon casque gaming.", "review_url": null, "reviewed_date": "January 14, 2025", "reviewed_product_variant": "Color: Black"}] | [{"asin": "B0DJW2X8BX", "image_url": "https://m.media-amazon.com/images/I/41ofZctOgqL._AC_UF480,480_SR480,480_.jpg", "price": "$39.99", "product_name": "Wireless Gaming Headset for PS5, PS4, Elden Ring, PC, Mac, Switch, Bluetooth 5.3 Ga...", "rating": "5", "reviews": "534", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDY2MTMzMTYwNTIwMjo6Ojo&url=%2Fdp%2FB0DJW2X8BX%2Fref%3Dsspa_dk_detail_0%3Fpsc%3D1%26pd_rd_i%3DB0DJW2X8BX%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWM"}, {"asin": "B0D69Z3QR4", "image_url": "https://m.media-amazon.com/images/I/41Rjxnr8aUL._AC_UF480,480_SR480,480_.jpghttps://m.media-amazon.com/images/I/216-OX9rBaL.png", "price": "$32.99", "product_name": "3D Stereo Wireless Gaming Headset for PC, PS4, PS5, Mac, Switch, Mobile | Bluetooth...", "rating": "4", "reviews": "400", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDY0Nzk1NzI0NjUwMjo6Ojo&url=%2Fdp%2FB0D69Z3QR4%2Fref%3Dsspa_dk_detail_1%3Fpsc%3D1%26pd_rd_i%3DB0D69Z3QR4%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWM"}, {"asin": "B09TB15CTL", "image_url": "https://m.media-amazon.com/images/I/41o-o1sxliL._AC_UF480,480_SR480,480_.jpg", "price": "$16.99", "product_name": "Gaming Headset for PC, Ps4, Ps5, Xbox Headset with 7.1 Surround Sound, Gaming Headp...", "rating": "4", "reviews": "5,095", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDQ4NDU3MTU0NzcwMjo6Ojo&url=%2Fdp%2FB09TB15CTL%2Fref%3Dsspa_dk_detail_2%3Fpsc%3D1%26pd_rd_i%3DB09TB15CTL%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWMhttps://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDQ4NDU3MTU0NzcwMjo6Ojo&url=%2Fdp%2FB09TB15CTL%2Fref%3Dsspa_dk_detail_2%3Fpsc%3D1%26pd_rd_i%3DB09TB15CTL%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWM"}, {"asin": "B0BFR75H2G", "image_url": "https://m.media-amazon.com/images/I/41LAVzxA7pL._AC_UF480,480_SR480,480_.jpg", "price": "$59.99", "product_name": "SOMIC G810 Wireless Headset 2.4G Low Latency Headset for PC PS4 PS5 Laptop, Bluetooth 5.2 Wireless Headphone with Built-in Mic, 50H Playtime, RGB Light Foldable for Gamer (Xbox Only Work in Wired)", "rating": "4", "reviews": "278", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjIwMDE2MDI4MTQ2NjM5ODo6Ojo&url=%2Fdp%2FB0BFR75H2G%2Fref%3Dsspa_dk_detail_3%3Fpsc%3D1%26pd_rd_i%3DB0BFR75H2G%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWM"}, {"asin": "B0D3HV5M3Y", "image_url": "https://m.media-amazon.com/images/I/41MUVUXwcyL._AC_UF480,480_SR480,480_.jpg", "price": "$29.99", "product_name": "Wireless Gaming Headset for PC, PS4, PS5, Mac,Switch,2.4GHz USB Gaming Headset with...", "rating": "4", "reviews": "359", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDY2ODU1OTI5NDcwMjo6Ojo&url=%2Fdp%2FB0D3HV5M3Y%2Fref%3Dsspa_dk_detail_4%3Fpsc%3D1%26pd_rd_i%3DB0D3HV5M3Y%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWM"}, {"asin": "B0878W5F4X", "image_url": "data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAoAAAAYCAYAAADDLGwtAAAJT3pUWHRSYXcgcHJvZmlsZSB0eXBlIGV4aWYAAHjarZhrkuM4DoT/8xR7BIIvkMfhM2JusMffD5Sruh5dMz0RW26bMi1RIDKRCbXb//3ruP/wF0rwLmWtpZXi+UsttdA5qP75a/dTfLqfz19/jfJ53snbRYGpyBifr/q6QDrz+dcFb6fL+Dzv6uuXUF8LyfvC9y/ane14fQyS+fDMS3ot1PZzUFrVj6GO10LzdeIN5fVO72E9g313nyaULK3MjWIIO0r09zM9EUR7S+yMz2cNdmTHIRZ3p9prMRLyaXtvo/cfE/QpyeWVS/c1++9HX5If+ms+fslleVuo/P4HyV/m4/ttwscbx9eRY/rTDyzUvm3n9T5n1XP2s7ueChktL0bdZMvbMpw4SHm8lxVeyjtzrPfVeFUIOYF8+ekHrylNAqgcJ0mWdDmy7zhlEmIKOyhjCBOgbK5GDS3Mi1iyl5ygscUVK5jNsF2MTIf3WOTet937TVi//BJODcJiwiU/vtzf/fhvXu6caSkSX588wQviCsZrwjDk7JOzAETOC7d8E/z2esHvP/AHqoJgvmmubLD78SwxsvziVrw4R87LjE8JidP1WoAUce9MMNA+iS8SsxTxGoKKkMcKQJ3IQ0xhgIDkHBZBhhRjCU4DJcO9uUblnhsyQmXTaBNA5Fiigk2LHbBSyvBHU4VDPceccs4la64ut9xLLKnkUooWE7muUZNmLapatWmvsaaaa6laa221t9AiGphbadpqa6334Do36qzVOb8zM8KII408ytBRRxt9Qp+ZZp5l6qyzzb7CiguZWGXpqqutvsVtlGKnnXfZuutuux+4duJJJ59y9NTTTn9HTV5l+/X1L1CTF2rhImXn6TtqzDrVtyXE5CQbZiAWkoC4GgIQOhhmvkpKwZAzzHwLFEUOBJkNG7fEEAPCtCXkI+/Y/ULuj3Bzuf4RbuGfkHMG3f8DOQd033H7DWrLtHlexJ4qtJz6SPWdQCKq62H0eshG5Vs3f/t57Gs3LbPp6MMSYntNGuduMpzkTb1Tair7TFwwJ9ZeWc+W3HZdh+iinsO99Ui0sdYef/1scUV1dnCeM/i9bZI+9iTrLWdJWQwiG1M2V/1hzL25LeS8s1Le9fje8LY0ds1JkNGQp+9z9NUP4XDLWTfJUkwglXWjTCWsoMO7HUePu7Ybcsqb6Lb2Nez7OmmxhaRnhnD0zsVV7nb26cVG3/cotU8XuCxP8l2Jw/K6a5zEcZPsfxojduHLE2Pe+ZzutsWcQu4ntUXadirsiXHsbZ7gZ1Zpx8OlvfYZMy2NkmfSsXxuAz5QLG05eNMOy5S0ICFrFFkoX8hplAlRoV9XHWoWc7RPNpRjh7hHEwHcCEeI3U0D69lTj7P/vCfW4pZhHgmTnOAVJwfLXu+57O3aTZ3Qvpwdz5wrlFj7oEbCqfFQsjO1HdagibC9AskRSNjik/oQT2E/xw1dEnYJg0JMhYrsPVbWAlI5PWsrii3bRQ2P3Fj/mPICnrreO+c61+7oURa2lZ89dE+b8Id7PXkcnx/kenblhlgWA3gRQcg4FDCtmOqQ0U/VBUH4ypdUqIZA8v2NsXRoKL22PV1ZaOE2nla5RDMDgro6BtSsiMk6uQhV1Utuq+VNfsrxe8KCRrpV4mSTrjeStWafnDVQzVdhhfCHW0Q7Ib8UN/YNEp4/5bsIp/7aXWvbquSggxLHJCida27fpp4ONTchFjbQDDWEYme9udLdBtQYq9FbAFPzcMCapJYR8qmYIfvEtY/WtfqQNijVEVpyYr5wyirzWN2YGgOsUUlNRFpb4U0tdKL7dgjQwHxVBjN6RmcHeMHoYbYgflovnYvuuUQPYeaYgCbV00MYZJ7S3Mm/EuFvNVZaieVqfORgHkMpge8RiG2tSUbz6du4Hl6QF5rPffjHsXYMpLZHh5NF5254tJDy6OFK2r4pYumlYjJzUVYnYo4xFfROmqJ8DRvg7YaP+4mYJvH0ynjKgLDD8n5Qz76OBzhqZWmngcE5m7AwfhswUJXRdAU3rOxG7GegjHNTuPs8FChQGMs8ycDGqsZLxGtb82vMOt2PQGiSXojKGNR15jCH3xs3wgN3iKOhTmktjJmc1YNCwqOJrShKmfetoWPPOygXhRnLqQl9W7VrHQE1pmlGOYB08xsOpmR/r+ggJTe92jLJidje4qMMWuixB09d69mTL/v7lt525D5viWi15jFJ0uMRyyofvz5zLEtjNjuvM7LNYVWKGBidD40WndvCyXyjKUAUClz2p7VaCKsBJrWQY6BPwHQGvobbCEmJbEV3kIGTsbPtkNtBzVNGKPypLIIeJDxldxQFNtKR0HnRxoy0CpoNqYTGC4XxgJwxDgwHg9RBRP6jfHxtH36Sk0MXtYUkoN9hOVi019UweH9CeRy30GddeaG7AdN6JS9moxnknGXtpZPgFZOaaiXkyBbiJCQQB8P0SkYyFt6WApdSZ5hqjduaJGSeZgxhb7Oi85pN/esLKPdFDX5TdrGJkC4ag4IJ07uWhy532Eovad8cZmN5YFswj/1ggrdZCFDulGw9yhYd6QxPO4kFk4dt5Du7zBIjnRf2fMQh1getXlas4HKOSZGm0TMPhkrJh7ox6skliJVXesxdUEHasT7naC+I6EZ+aPU+YxWIFC2W2+cg4eaoJcAgj+7CpaUOfFoPqbHusP8HWYbDw4+TMgjNl4cdMVkcJw3r+iQQ2+CJdvH0O/QRtlzluzD8UFUob0YPYuyNjnztTASCuYjDK5r5xm1tYQcU2zQWlNgONNgV96G8ympxoCH7kh9kxrLewCSa58KLWjKQmr9IYecbC/eIdbc2S7CckRp+HoyGh46r1Ak7afeFlfpp9oA+eXI/DkmlhiJFjB8jB6OW+fDooxP93YZBHMVwpC7RvGErav0EtbQ0UAwETtjQho7XrzxvyUqq0wL11Kzp70xWs7XxYEGjhbFsuM6WriGVgtCcq4141sJ1NYDvQWd6Hugv+2Z+EPdgyRrsATxWtyZ2kekyCvSmVaavrm92Vb+Uz2/KCWux/3dpNFrWBixuqJr8GSbRSBQQJCgE6bHZOebFZ8R6wJOWrWzTy/3WxDfaqvsIUUTuFC4t9OMkXiatPy14xPLCQ8w1wGrsJVTITrbbVjdmARUyRugIEscrdKb/1KS/d0R0kLQx/wOKrEbcxIRjWgAAAYVpQ0NQSUNDIHByb2ZpbGUAAHicfZE9SMNQFIVPU6VFKgp2KKKQoTpZEBVx1CoUoUKoFVp1MHnpHzRpSFpcHAXXgoM/i1UHF2ddHVwFQfAHxM3NSdFFSrwvKbSI8cLjfZx3z+G9+wChUWaa1TUOaHrVTCXiYia7KgZe4UMQ/YhgWGaWMSdJSXjW1z11U93FeJZ335/Vq+YsBvhE4llmmFXiDeLpzarBeZ84zIqySnxOPGbSBYkfua64/Ma54LDAM8NmOjVPHCYWCx2sdDArmhrxFHFU1XTKFzIuq5y3OGvlGmvdk78wlNNXlrlOawgJLGIJEkQoqKGEMqqI0a6TYiFF53EP/6Djl8ilkKsERo4FVKBBdvzgf/B7tlZ+csJNCsWB7hfb/hgBArtAs27b38e23TwB/M/Ald72VxrAzCfp9bYWPQL6toGL67am7AGXO0DkyZBN2ZH8tIR8Hng/o2/KAgO3QM+aO7fWOU4fgDTNKnkDHBwCowXKXvd4d7Bzbv/2tOb3Ay9kcozy2C0nAAAABmJLR0QA/wD/AP+gvaeTAAAACXBIWXMAAAsTAAALEwEAmpwYAAAAB3RJTUUH5QEIEBoNoDME2AAAAKBJREFUKM9jZDAw+89ABGAiRtHC2iriFAa4OhNWmBvoz8DHy0NYYYinG2E3GispMtiZmhBWGOvrTZyvA9xcCCuMdnJgkJeSJKwwzMudcIBLCQow+Dk7EVaYGuBLXBQGubkRVuhubMCgp6FGWGGUlxdxqSfA1ZmwQlgCIKgQlgDwKkROAHgVIicAvAqREwBOhegJAKdC9ASAFUg5uv0nBgAAnCk9i8O3r7cAAAAASUVORK5CYII=", "price": "$21.99", "product_name": "Gaming Headset with Microphone for PC PS4 PS5 Headset Noise Cancelling Gaming Headphones for Laptop Mac Switch Xbox One Headset with LED Lights Deep Bass for Kids Adults Black Blue", "rating": "4", "reviews": "19,883", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDU3NDUwNDg5ODYwMjo6Ojo&url=%2Fdp%2FB0878W5F4X%2Fref%3Dsspa_dk_detail_5%3Fpsc%3D1%26pd_rd_i%3DB0878W5F4X%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWMhttps://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDU3NDUwNDg5ODYwMjo6Ojo&url=%2Fdp%2FB0878W5F4X%2Fref%3Dsspa_dk_detail_5%3Fpsc%3D1%26pd_rd_i%3DB0878W5F4X%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWM"}, {"asin": "B0B4B2HW2N", "image_url": "data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAoAAAAYCAYAAADDLGwtAAAJT3pUWHRSYXcgcHJvZmlsZSB0eXBlIGV4aWYAAHjarZhrkuM4DoT/8xR7BIIvkMfhM2JusMffD5Sruh5dMz0RW26bMi1RIDKRCbXb//3ruP/wF0rwLmWtpZXi+UsttdA5qP75a/dTfLqfz19/jfJ53snbRYGpyBifr/q6QDrz+dcFb6fL+Dzv6uuXUF8LyfvC9y/ane14fQyS+fDMS3ot1PZzUFrVj6GO10LzdeIN5fVO72E9g313nyaULK3MjWIIO0r09zM9EUR7S+yMz2cNdmTHIRZ3p9prMRLyaXtvo/cfE/QpyeWVS/c1++9HX5If+ms+fslleVuo/P4HyV/m4/ttwscbx9eRY/rTDyzUvm3n9T5n1XP2s7ueChktL0bdZMvbMpw4SHm8lxVeyjtzrPfVeFUIOYF8+ekHrylNAqgcJ0mWdDmy7zhlEmIKOyhjCBOgbK5GDS3Mi1iyl5ygscUVK5jNsF2MTIf3WOTet937TVi//BJODcJiwiU/vtzf/fhvXu6caSkSX588wQviCsZrwjDk7JOzAETOC7d8E/z2esHvP/AHqoJgvmmubLD78SwxsvziVrw4R87LjE8JidP1WoAUce9MMNA+iS8SsxTxGoKKkMcKQJ3IQ0xhgIDkHBZBhhRjCU4DJcO9uUblnhsyQmXTaBNA5Fiigk2LHbBSyvBHU4VDPceccs4la64ut9xLLKnkUooWE7muUZNmLapatWmvsaaaa6laa221t9AiGphbadpqa6334Do36qzVOb8zM8KII408ytBRRxt9Qp+ZZp5l6qyzzb7CiguZWGXpqqutvsVtlGKnnXfZuutuux+4duJJJ59y9NTTTn9HTV5l+/X1L1CTF2rhImXn6TtqzDrVtyXE5CQbZiAWkoC4GgIQOhhmvkpKwZAzzHwLFEUOBJkNG7fEEAPCtCXkI+/Y/ULuj3Bzuf4RbuGfkHMG3f8DOQd033H7DWrLtHlexJ4qtJz6SPWdQCKq62H0eshG5Vs3f/t57Gs3LbPp6MMSYntNGuduMpzkTb1Tair7TFwwJ9ZeWc+W3HZdh+iinsO99Ui0sdYef/1scUV1dnCeM/i9bZI+9iTrLWdJWQwiG1M2V/1hzL25LeS8s1Le9fje8LY0ds1JkNGQp+9z9NUP4XDLWTfJUkwglXWjTCWsoMO7HUePu7Ybcsqb6Lb2Nez7OmmxhaRnhnD0zsVV7nb26cVG3/cotU8XuCxP8l2Jw/K6a5zEcZPsfxojduHLE2Pe+ZzutsWcQu4ntUXadirsiXHsbZ7gZ1Zpx8OlvfYZMy2NkmfSsXxuAz5QLG05eNMOy5S0ICFrFFkoX8hplAlRoV9XHWoWc7RPNpRjh7hHEwHcCEeI3U0D69lTj7P/vCfW4pZhHgmTnOAVJwfLXu+57O3aTZ3Qvpwdz5wrlFj7oEbCqfFQsjO1HdagibC9AskRSNjik/oQT2E/xw1dEnYJg0JMhYrsPVbWAlI5PWsrii3bRQ2P3Fj/mPICnrreO+c61+7oURa2lZ89dE+b8Id7PXkcnx/kenblhlgWA3gRQcg4FDCtmOqQ0U/VBUH4ypdUqIZA8v2NsXRoKL22PV1ZaOE2nla5RDMDgro6BtSsiMk6uQhV1Utuq+VNfsrxe8KCRrpV4mSTrjeStWafnDVQzVdhhfCHW0Q7Ib8UN/YNEp4/5bsIp/7aXWvbquSggxLHJCida27fpp4ONTchFjbQDDWEYme9udLdBtQYq9FbAFPzcMCapJYR8qmYIfvEtY/WtfqQNijVEVpyYr5wyirzWN2YGgOsUUlNRFpb4U0tdKL7dgjQwHxVBjN6RmcHeMHoYbYgflovnYvuuUQPYeaYgCbV00MYZJ7S3Mm/EuFvNVZaieVqfORgHkMpge8RiG2tSUbz6du4Hl6QF5rPffjHsXYMpLZHh5NF5254tJDy6OFK2r4pYumlYjJzUVYnYo4xFfROmqJ8DRvg7YaP+4mYJvH0ynjKgLDD8n5Qz76OBzhqZWmngcE5m7AwfhswUJXRdAU3rOxG7GegjHNTuPs8FChQGMs8ycDGqsZLxGtb82vMOt2PQGiSXojKGNR15jCH3xs3wgN3iKOhTmktjJmc1YNCwqOJrShKmfetoWPPOygXhRnLqQl9W7VrHQE1pmlGOYB08xsOpmR/r+ggJTe92jLJidje4qMMWuixB09d69mTL/v7lt525D5viWi15jFJ0uMRyyofvz5zLEtjNjuvM7LNYVWKGBidD40WndvCyXyjKUAUClz2p7VaCKsBJrWQY6BPwHQGvobbCEmJbEV3kIGTsbPtkNtBzVNGKPypLIIeJDxldxQFNtKR0HnRxoy0CpoNqYTGC4XxgJwxDgwHg9RBRP6jfHxtH36Sk0MXtYUkoN9hOVi019UweH9CeRy30GddeaG7AdN6JS9moxnknGXtpZPgFZOaaiXkyBbiJCQQB8P0SkYyFt6WApdSZ5hqjduaJGSeZgxhb7Oi85pN/esLKPdFDX5TdrGJkC4ag4IJ07uWhy532Eovad8cZmN5YFswj/1ggrdZCFDulGw9yhYd6QxPO4kFk4dt5Du7zBIjnRf2fMQh1getXlas4HKOSZGm0TMPhkrJh7ox6skliJVXesxdUEHasT7naC+I6EZ+aPU+YxWIFC2W2+cg4eaoJcAgj+7CpaUOfFoPqbHusP8HWYbDw4+TMgjNl4cdMVkcJw3r+iQQ2+CJdvH0O/QRtlzluzD8UFUob0YPYuyNjnztTASCuYjDK5r5xm1tYQcU2zQWlNgONNgV96G8ympxoCH7kh9kxrLewCSa58KLWjKQmr9IYecbC/eIdbc2S7CckRp+HoyGh46r1Ak7afeFlfpp9oA+eXI/DkmlhiJFjB8jB6OW+fDooxP93YZBHMVwpC7RvGErav0EtbQ0UAwETtjQho7XrzxvyUqq0wL11Kzp70xWs7XxYEGjhbFsuM6WriGVgtCcq4141sJ1NYDvQWd6Hugv+2Z+EPdgyRrsATxWtyZ2kekyCvSmVaavrm92Vb+Uz2/KCWux/3dpNFrWBixuqJr8GSbRSBQQJCgE6bHZOebFZ8R6wJOWrWzTy/3WxDfaqvsIUUTuFC4t9OMkXiatPy14xPLCQ8w1wGrsJVTITrbbVjdmARUyRugIEscrdKb/1KS/d0R0kLQx/wOKrEbcxIRjWgAAAYVpQ0NQSUNDIHByb2ZpbGUAAHicfZE9SMNQFIVPU6VFKgp2KKKQoTpZEBVx1CoUoUKoFVp1MHnpHzRpSFpcHAXXgoM/i1UHF2ddHVwFQfAHxM3NSdFFSrwvKbSI8cLjfZx3z+G9+wChUWaa1TUOaHrVTCXiYia7KgZe4UMQ/YhgWGaWMSdJSXjW1z11U93FeJZ335/Vq+YsBvhE4llmmFXiDeLpzarBeZ84zIqySnxOPGbSBYkfua64/Ma54LDAM8NmOjVPHCYWCx2sdDArmhrxFHFU1XTKFzIuq5y3OGvlGmvdk78wlNNXlrlOawgJLGIJEkQoqKGEMqqI0a6TYiFF53EP/6Djl8ilkKsERo4FVKBBdvzgf/B7tlZ+csJNCsWB7hfb/hgBArtAs27b38e23TwB/M/Ald72VxrAzCfp9bYWPQL6toGL67am7AGXO0DkyZBN2ZH8tIR8Hng/o2/KAgO3QM+aO7fWOU4fgDTNKnkDHBwCowXKXvd4d7Bzbv/2tOb3Ay9kcozy2C0nAAAABmJLR0QA/wD/AP+gvaeTAAAACXBIWXMAAAsTAAALEwEAmpwYAAAAB3RJTUUH5QEIEBoNoDME2AAAAKBJREFUKM9jZDAw+89ABGAiRtHC2iriFAa4OhNWmBvoz8DHy0NYYYinG2E3GispMtiZmhBWGOvrTZyvA9xcCCuMdnJgkJeSJKwwzMudcIBLCQow+Dk7EVaYGuBLXBQGubkRVuhubMCgp6FGWGGUlxdxqSfA1ZmwQlgCIKgQlgDwKkROAHgVIicAvAqREwBOhegJAKdC9ASAFUg5uv0nBgAAnCk9i8O3r7cAAAAASUVORK5CYII=", "price": "$33.99", "product_name": "2.4GHz Wireless Gaming Headset for PS5, PS4 Fortnite & Call of Duty/FPS Gamers, PC,...", "rating": "4", "reviews": "9,011", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDE0NjQ2NjYwMzcwMjo6Ojo&url=%2Fdp%2FB0B4B2HW2N%2Fref%3Dsspa_dk_detail_6%3Fpsc%3D1%26pd_rd_i%3DB0B4B2HW2N%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWMhttps://www.amazon.com/sspa/click?ie=UTF8&spc=MToyOTU5MjgxMzIyMjQ5NzUzOjE3Mzk5MDYyOTY6c3BfZGV0YWlsX3RoZW1hdGljOjMwMDE0NjQ2NjYwMzcwMjo6Ojo&url=%2Fdp%2FB0B4B2HW2N%2Fref%3Dsspa_dk_detail_6%3Fpsc%3D1%26pd_rd_i%3DB0B4B2HW2N%26pd_rd_w%3DCImLL%26content-id%3Damzn1.sym.386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_p%3D386c274b-4bfe-4421-9052-a1a56db557ab%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWxfdGhlbWF0aWM"}, {"asin": "B0B4B2HW2N", "image_url": "data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAoAAAAYCAYAAADDLGwtAAAJT3pUWHRSYXcgcHJvZmlsZSB0eXBlIGV4aWYAAHjarZhrkuM4DoT/8xR7BIIvkMfhM2JusMffD5Sruh5dMz0RW26bMi1RIDKRCbXb//3ruP/wF0rwLmWtpZXi+UsttdA5qP75a/dTfLqfz19/jfJ53snbRYGpyBifr/q6QDrz+dcFb6fL+Dzv6uuXUF8LyfvC9y/ane14fQyS+fDMS3ot1PZzUFrVj6GO10LzdeIN5fVO72E9g313nyaULK3MjWIIO0r09zM9EUR7S+yMz2cNdmTHIRZ3p9prMRLyaXtvo/cfE/QpyeWVS/c1++9HX5If+ms+fslleVuo/P4HyV/m4/ttwscbx9eRY/rTDyzUvm3n9T5n1XP2s7ueChktL0bdZMvbMpw4SHm8lxVeyjtzrPfVeFUIOYF8+ekHrylNAqgcJ0mWdDmy7zhlEmIKOyhjCBOgbK5GDS3Mi1iyl5ygscUVK5jNsF2MTIf3WOTet937TVi//BJODcJiwiU/vtzf/fhvXu6caSkSX588wQviCsZrwjDk7JOzAETOC7d8E/z2esHvP/AHqoJgvmmubLD78SwxsvziVrw4R87LjE8JidP1WoAUce9MMNA+iS8SsxTxGoKKkMcKQJ3IQ0xhgIDkHBZBhhRjCU4DJcO9uUblnhsyQmXTaBNA5Fiigk2LHbBSyvBHU4VDPceccs4la64ut9xLLKnkUooWE7muUZNmLapatWmvsaaaa6laa221t9AiGphbadpqa6334Do36qzVOb8zM8KII408ytBRRxt9Qp+ZZp5l6qyzzb7CiguZWGXpqqutvsVtlGKnnXfZuutuux+4duJJJ59y9NTTTn9HTV5l+/X1L1CTF2rhImXn6TtqzDrVtyXE5CQbZiAWkoC4GgIQOhhmvkpKwZAzzHwLFEUOBJkNG7fEEAPCtCXkI+/Y/ULuj3Bzuf4RbuGfkHMG3f8DOQd033H7DWrLtHlexJ4qtJz6SPWdQCKq62H0eshG5Vs3f/t57Gs3LbPp6MMSYntNGuduMpzkTb1Tair7TFwwJ9ZeWc+W3HZdh+iinsO99Ui0sdYef/1scUV1dnCeM/i9bZI+9iTrLWdJWQwiG1M2V/1hzL25LeS8s1Le9fje8LY0ds1JkNGQp+9z9NUP4XDLWTfJUkwglXWjTCWsoMO7HUePu7Ybcsqb6Lb2Nez7OmmxhaRnhnD0zsVV7nb26cVG3/cotU8XuCxP8l2Jw/K6a5zEcZPsfxojduHLE2Pe+ZzutsWcQu4ntUXadirsiXHsbZ7gZ1Zpx8OlvfYZMy2NkmfSsXxuAz5QLG05eNMOy5S0ICFrFFkoX8hplAlRoV9XHWoWc7RPNpRjh7hHEwHcCEeI3U0D69lTj7P/vCfW4pZhHgmTnOAVJwfLXu+57O3aTZ3Qvpwdz5wrlFj7oEbCqfFQsjO1HdagibC9AskRSNjik/oQT2E/xw1dEnYJg0JMhYrsPVbWAlI5PWsrii3bRQ2P3Fj/mPICnrreO+c61+7oURa2lZ89dE+b8Id7PXkcnx/kenblhlgWA3gRQcg4FDCtmOqQ0U/VBUH4ypdUqIZA8v2NsXRoKL22PV1ZaOE2nla5RDMDgro6BtSsiMk6uQhV1Utuq+VNfsrxe8KCRrpV4mSTrjeStWafnDVQzVdhhfCHW0Q7Ib8UN/YNEp4/5bsIp/7aXWvbquSggxLHJCida27fpp4ONTchFjbQDDWEYme9udLdBtQYq9FbAFPzcMCapJYR8qmYIfvEtY/WtfqQNijVEVpyYr5wyirzWN2YGgOsUUlNRFpb4U0tdKL7dgjQwHxVBjN6RmcHeMHoYbYgflovnYvuuUQPYeaYgCbV00MYZJ7S3Mm/EuFvNVZaieVqfORgHkMpge8RiG2tSUbz6du4Hl6QF5rPffjHsXYMpLZHh5NF5254tJDy6OFK2r4pYumlYjJzUVYnYo4xFfROmqJ8DRvg7YaP+4mYJvH0ynjKgLDD8n5Qz76OBzhqZWmngcE5m7AwfhswUJXRdAU3rOxG7GegjHNTuPs8FChQGMs8ycDGqsZLxGtb82vMOt2PQGiSXojKGNR15jCH3xs3wgN3iKOhTmktjJmc1YNCwqOJrShKmfetoWPPOygXhRnLqQl9W7VrHQE1pmlGOYB08xsOpmR/r+ggJTe92jLJidje4qMMWuixB09d69mTL/v7lt525D5viWi15jFJ0uMRyyofvz5zLEtjNjuvM7LNYVWKGBidD40WndvCyXyjKUAUClz2p7VaCKsBJrWQY6BPwHQGvobbCEmJbEV3kIGTsbPtkNtBzVNGKPypLIIeJDxldxQFNtKR0HnRxoy0CpoNqYTGC4XxgJwxDgwHg9RBRP6jfHxtH36Sk0MXtYUkoN9hOVi019UweH9CeRy30GddeaG7AdN6JS9moxnknGXtpZPgFZOaaiXkyBbiJCQQB8P0SkYyFt6WApdSZ5hqjduaJGSeZgxhb7Oi85pN/esLKPdFDX5TdrGJkC4ag4IJ07uWhy532Eovad8cZmN5YFswj/1ggrdZCFDulGw9yhYd6QxPO4kFk4dt5Du7zBIjnRf2fMQh1getXlas4HKOSZGm0TMPhkrJh7ox6skliJVXesxdUEHasT7naC+I6EZ+aPU+YxWIFC2W2+cg4eaoJcAgj+7CpaUOfFoPqbHusP8HWYbDw4+TMgjNl4cdMVkcJw3r+iQQ2+CJdvH0O/QRtlzluzD8UFUob0YPYuyNjnztTASCuYjDK5r5xm1tYQcU2zQWlNgONNgV96G8ympxoCH7kh9kxrLewCSa58KLWjKQmr9IYecbC/eIdbc2S7CckRp+HoyGh46r1Ak7afeFlfpp9oA+eXI/DkmlhiJFjB8jB6OW+fDooxP93YZBHMVwpC7RvGErav0EtbQ0UAwETtjQho7XrzxvyUqq0wL11Kzp70xWs7XxYEGjhbFsuM6WriGVgtCcq4141sJ1NYDvQWd6Hugv+2Z+EPdgyRrsATxWtyZ2kekyCvSmVaavrm92Vb+Uz2/KCWux/3dpNFrWBixuqJr8GSbRSBQQJCgE6bHZOebFZ8R6wJOWrWzTy/3WxDfaqvsIUUTuFC4t9OMkXiatPy14xPLCQ8w1wGrsJVTITrbbVjdmARUyRugIEscrdKb/1KS/d0R0kLQx/wOKrEbcxIRjWgAAAYVpQ0NQSUNDIHByb2ZpbGUAAHicfZE9SMNQFIVPU6VFKgp2KKKQoTpZEBVx1CoUoUKoFVp1MHnpHzRpSFpcHAXXgoM/i1UHF2ddHVwFQfAHxM3NSdFFSrwvKbSI8cLjfZx3z+G9+wChUWaa1TUOaHrVTCXiYia7KgZe4UMQ/YhgWGaWMSdJSXjW1z11U93FeJZ335/Vq+YsBvhE4llmmFXiDeLpzarBeZ84zIqySnxOPGbSBYkfua64/Ma54LDAM8NmOjVPHCYWCx2sdDArmhrxFHFU1XTKFzIuq5y3OGvlGmvdk78wlNNXlrlOawgJLGIJEkQoqKGEMqqI0a6TYiFF53EP/6Djl8ilkKsERo4FVKBBdvzgf/B7tlZ+csJNCsWB7hfb/hgBArtAs27b38e23TwB/M/Ald72VxrAzCfp9bYWPQL6toGL67am7AGXO0DkyZBN2ZH8tIR8Hng/o2/KAgO3QM+aO7fWOU4fgDTNKnkDHBwCowXKXvd4d7Bzbv/2tOb3Ay9kcozy2C0nAAAABmJLR0QA/wD/AP+gvaeTAAAACXBIWXMAAAsTAAALEwEAmpwYAAAAB3RJTUUH5QEIEBoNoDME2AAAAKBJREFUKM9jZDAw+89ABGAiRtHC2iriFAa4OhNWmBvoz8DHy0NYYYinG2E3GispMtiZmhBWGOvrTZyvA9xcCCuMdnJgkJeSJKwwzMudcIBLCQow+Dk7EVaYGuBLXBQGubkRVuhubMCgp6FGWGGUlxdxqSfA1ZmwQlgCIKgQlgDwKkROAHgVIicAvAqREwBOhegJAKdC9ASAFUg5uv0nBgAAnCk9i8O3r7cAAAAASUVORK5CYII=", "price": "$33.99", "product_name": "2.4GHz Wireless Gaming Headset for PS5, PS4 Fortnite & Call of Duty/FPS Gamers, PC,...", "rating": "4", "reviews": "9,011", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDAxNDY0NjY2MDM3MDI6Ojo6&url=%2Fdp%2FB0B4B2HW2N%2Fref%3Dsspa_dk_detail_0%3Fpsc%3D1%26pd_rd_i%3DB0B4B2HW2N%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwyhttps://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDAxNDY0NjY2MDM3MDI6Ojo6&url=%2Fdp%2FB0B4B2HW2N%2Fref%3Dsspa_dk_detail_0%3Fpsc%3D1%26pd_rd_i%3DB0B4B2HW2N%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwy"}, {"asin": "B0CLLJC6QC", "image_url": "data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAoAAAAYCAYAAADDLGwtAAAJT3pUWHRSYXcgcHJvZmlsZSB0eXBlIGV4aWYAAHjarZhrkuM4DoT/8xR7BIIvkMfhM2JusMffD5Sruh5dMz0RW26bMi1RIDKRCbXb//3ruP/wF0rwLmWtpZXi+UsttdA5qP75a/dTfLqfz19/jfJ53snbRYGpyBifr/q6QDrz+dcFb6fL+Dzv6uuXUF8LyfvC9y/ane14fQyS+fDMS3ot1PZzUFrVj6GO10LzdeIN5fVO72E9g313nyaULK3MjWIIO0r09zM9EUR7S+yMz2cNdmTHIRZ3p9prMRLyaXtvo/cfE/QpyeWVS/c1++9HX5If+ms+fslleVuo/P4HyV/m4/ttwscbx9eRY/rTDyzUvm3n9T5n1XP2s7ueChktL0bdZMvbMpw4SHm8lxVeyjtzrPfVeFUIOYF8+ekHrylNAqgcJ0mWdDmy7zhlEmIKOyhjCBOgbK5GDS3Mi1iyl5ygscUVK5jNsF2MTIf3WOTet937TVi//BJODcJiwiU/vtzf/fhvXu6caSkSX588wQviCsZrwjDk7JOzAETOC7d8E/z2esHvP/AHqoJgvmmubLD78SwxsvziVrw4R87LjE8JidP1WoAUce9MMNA+iS8SsxTxGoKKkMcKQJ3IQ0xhgIDkHBZBhhRjCU4DJcO9uUblnhsyQmXTaBNA5Fiigk2LHbBSyvBHU4VDPceccs4la64ut9xLLKnkUooWE7muUZNmLapatWmvsaaaa6laa221t9AiGphbadpqa6334Do36qzVOb8zM8KII408ytBRRxt9Qp+ZZp5l6qyzzb7CiguZWGXpqqutvsVtlGKnnXfZuutuux+4duJJJ59y9NTTTn9HTV5l+/X1L1CTF2rhImXn6TtqzDrVtyXE5CQbZiAWkoC4GgIQOhhmvkpKwZAzzHwLFEUOBJkNG7fEEAPCtCXkI+/Y/ULuj3Bzuf4RbuGfkHMG3f8DOQd033H7DWrLtHlexJ4qtJz6SPWdQCKq62H0eshG5Vs3f/t57Gs3LbPp6MMSYntNGuduMpzkTb1Tair7TFwwJ9ZeWc+W3HZdh+iinsO99Ui0sdYef/1scUV1dnCeM/i9bZI+9iTrLWdJWQwiG1M2V/1hzL25LeS8s1Le9fje8LY0ds1JkNGQp+9z9NUP4XDLWTfJUkwglXWjTCWsoMO7HUePu7Ybcsqb6Lb2Nez7OmmxhaRnhnD0zsVV7nb26cVG3/cotU8XuCxP8l2Jw/K6a5zEcZPsfxojduHLE2Pe+ZzutsWcQu4ntUXadirsiXHsbZ7gZ1Zpx8OlvfYZMy2NkmfSsXxuAz5QLG05eNMOy5S0ICFrFFkoX8hplAlRoV9XHWoWc7RPNpRjh7hHEwHcCEeI3U0D69lTj7P/vCfW4pZhHgmTnOAVJwfLXu+57O3aTZ3Qvpwdz5wrlFj7oEbCqfFQsjO1HdagibC9AskRSNjik/oQT2E/xw1dEnYJg0JMhYrsPVbWAlI5PWsrii3bRQ2P3Fj/mPICnrreO+c61+7oURa2lZ89dE+b8Id7PXkcnx/kenblhlgWA3gRQcg4FDCtmOqQ0U/VBUH4ypdUqIZA8v2NsXRoKL22PV1ZaOE2nla5RDMDgro6BtSsiMk6uQhV1Utuq+VNfsrxe8KCRrpV4mSTrjeStWafnDVQzVdhhfCHW0Q7Ib8UN/YNEp4/5bsIp/7aXWvbquSggxLHJCida27fpp4ONTchFjbQDDWEYme9udLdBtQYq9FbAFPzcMCapJYR8qmYIfvEtY/WtfqQNijVEVpyYr5wyirzWN2YGgOsUUlNRFpb4U0tdKL7dgjQwHxVBjN6RmcHeMHoYbYgflovnYvuuUQPYeaYgCbV00MYZJ7S3Mm/EuFvNVZaieVqfORgHkMpge8RiG2tSUbz6du4Hl6QF5rPffjHsXYMpLZHh5NF5254tJDy6OFK2r4pYumlYjJzUVYnYo4xFfROmqJ8DRvg7YaP+4mYJvH0ynjKgLDD8n5Qz76OBzhqZWmngcE5m7AwfhswUJXRdAU3rOxG7GegjHNTuPs8FChQGMs8ycDGqsZLxGtb82vMOt2PQGiSXojKGNR15jCH3xs3wgN3iKOhTmktjJmc1YNCwqOJrShKmfetoWPPOygXhRnLqQl9W7VrHQE1pmlGOYB08xsOpmR/r+ggJTe92jLJidje4qMMWuixB09d69mTL/v7lt525D5viWi15jFJ0uMRyyofvz5zLEtjNjuvM7LNYVWKGBidD40WndvCyXyjKUAUClz2p7VaCKsBJrWQY6BPwHQGvobbCEmJbEV3kIGTsbPtkNtBzVNGKPypLIIeJDxldxQFNtKR0HnRxoy0CpoNqYTGC4XxgJwxDgwHg9RBRP6jfHxtH36Sk0MXtYUkoN9hOVi019UweH9CeRy30GddeaG7AdN6JS9moxnknGXtpZPgFZOaaiXkyBbiJCQQB8P0SkYyFt6WApdSZ5hqjduaJGSeZgxhb7Oi85pN/esLKPdFDX5TdrGJkC4ag4IJ07uWhy532Eovad8cZmN5YFswj/1ggrdZCFDulGw9yhYd6QxPO4kFk4dt5Du7zBIjnRf2fMQh1getXlas4HKOSZGm0TMPhkrJh7ox6skliJVXesxdUEHasT7naC+I6EZ+aPU+YxWIFC2W2+cg4eaoJcAgj+7CpaUOfFoPqbHusP8HWYbDw4+TMgjNl4cdMVkcJw3r+iQQ2+CJdvH0O/QRtlzluzD8UFUob0YPYuyNjnztTASCuYjDK5r5xm1tYQcU2zQWlNgONNgV96G8ympxoCH7kh9kxrLewCSa58KLWjKQmr9IYecbC/eIdbc2S7CckRp+HoyGh46r1Ak7afeFlfpp9oA+eXI/DkmlhiJFjB8jB6OW+fDooxP93YZBHMVwpC7RvGErav0EtbQ0UAwETtjQho7XrzxvyUqq0wL11Kzp70xWs7XxYEGjhbFsuM6WriGVgtCcq4141sJ1NYDvQWd6Hugv+2Z+EPdgyRrsATxWtyZ2kekyCvSmVaavrm92Vb+Uz2/KCWux/3dpNFrWBixuqJr8GSbRSBQQJCgE6bHZOebFZ8R6wJOWrWzTy/3WxDfaqvsIUUTuFC4t9OMkXiatPy14xPLCQ8w1wGrsJVTITrbbVjdmARUyRugIEscrdKb/1KS/d0R0kLQx/wOKrEbcxIRjWgAAAYVpQ0NQSUNDIHByb2ZpbGUAAHicfZE9SMNQFIVPU6VFKgp2KKKQoTpZEBVx1CoUoUKoFVp1MHnpHzRpSFpcHAXXgoM/i1UHF2ddHVwFQfAHxM3NSdFFSrwvKbSI8cLjfZx3z+G9+wChUWaa1TUOaHrVTCXiYia7KgZe4UMQ/YhgWGaWMSdJSXjW1z11U93FeJZ335/Vq+YsBvhE4llmmFXiDeLpzarBeZ84zIqySnxOPGbSBYkfua64/Ma54LDAM8NmOjVPHCYWCx2sdDArmhrxFHFU1XTKFzIuq5y3OGvlGmvdk78wlNNXlrlOawgJLGIJEkQoqKGEMqqI0a6TYiFF53EP/6Djl8ilkKsERo4FVKBBdvzgf/B7tlZ+csJNCsWB7hfb/hgBArtAs27b38e23TwB/M/Ald72VxrAzCfp9bYWPQL6toGL67am7AGXO0DkyZBN2ZH8tIR8Hng/o2/KAgO3QM+aO7fWOU4fgDTNKnkDHBwCowXKXvd4d7Bzbv/2tOb3Ay9kcozy2C0nAAAABmJLR0QA/wD/AP+gvaeTAAAACXBIWXMAAAsTAAALEwEAmpwYAAAAB3RJTUUH5QEIEBoNoDME2AAAAKBJREFUKM9jZDAw+89ABGAiRtHC2iriFAa4OhNWmBvoz8DHy0NYYYinG2E3GispMtiZmhBWGOvrTZyvA9xcCCuMdnJgkJeSJKwwzMudcIBLCQow+Dk7EVaYGuBLXBQGubkRVuhubMCgp6FGWGGUlxdxqSfA1ZmwQlgCIKgQlgDwKkROAHgVIicAvAqREwBOhegJAKdC9ASAFUg5uv0nBgAAnCk9i8O3r7cAAAAASUVORK5CYII=", "price": "$39.99", "product_name": "Wireless Gaming Headset, 7.1 Surround Sound, 2.4Ghz USB Gaming Headphones with Blue...", "rating": "4", "reviews": "895", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDAyMDI2MDcxNTU0MDI6Ojo6&url=%2Fdp%2FB0CLLJC6QC%2Fref%3Dsspa_dk_detail_1%3Fpsc%3D1%26pd_rd_i%3DB0CLLJC6QC%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwyhttps://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDAyMDI2MDcxNTU0MDI6Ojo6&url=%2Fdp%2FB0CLLJC6QC%2Fref%3Dsspa_dk_detail_1%3Fpsc%3D1%26pd_rd_i%3DB0CLLJC6QC%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwy"}, {"asin": "B09JFZC7D6", "image_url": "https://m.media-amazon.com/images/I/41kn-1bwKKL._AC_UF480,480_SR480,480_.jpg", "price": "$29.99", "product_name": "Bluetooth Cat Ear Headphones for Kids, Wireless & Wired Mode Foldable Headset with ...", "rating": "4", "reviews": "604", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDA2NjYxMDQ4MTk3MDI6Ojo6&url=%2Fdp%2FB09JFZC7D6%2Fref%3Dsspa_dk_detail_2%3Fpsc%3D1%26pd_rd_i%3DB09JFZC7D6%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwy"}, {"asin": "B0D3HV5M3Y", "image_url": "https://m.media-amazon.com/images/I/41MUVUXwcyL._AC_UF480,480_SR480,480_.jpg", "price": "$29.99", "product_name": "Wireless Gaming Headset for PC, PS4, PS5, Mac,Switch,2.4GHz USB Gaming Headset with...", "rating": "4", "reviews": "359", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDA2Njg1NTkyOTQ3MDI6Ojo6&url=%2Fdp%2FB0D3HV5M3Y%2Fref%3Dsspa_dk_detail_3%3Fpsc%3D1%26pd_rd_i%3DB0D3HV5M3Y%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwy"}, {"asin": "B0DJW2X8BX", "image_url": "https://m.media-amazon.com/images/I/41ofZctOgqL._AC_UF480,480_SR480,480_.jpg", "price": "$39.99", "product_name": "Wireless Gaming Headset for PS5, PS4, Elden Ring, PC, Mac, Switch, Bluetooth 5.3 Ga...", "rating": "5", "reviews": "534", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDA2NjEzMzE2MDUyMDI6Ojo6&url=%2Fdp%2FB0DJW2X8BX%2Fref%3Dsspa_dk_detail_4%3Fpsc%3D1%26pd_rd_i%3DB0DJW2X8BX%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwy"}, {"asin": "B0D69Z3QR4", "image_url": "https://m.media-amazon.com/images/I/41Rjxnr8aUL._AC_UF480,480_SR480,480_.jpghttps://m.media-amazon.com/images/I/216-OX9rBaL.png", "price": "$32.99", "product_name": "3D Stereo Wireless Gaming Headset for PC, PS4, PS5, Mac, Switch, Mobile | Bluetooth...", "rating": "4", "reviews": "400", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDA2NDc5NTcyNDY1MDI6Ojo6&url=%2Fdp%2FB0D69Z3QR4%2Fref%3Dsspa_dk_detail_5%3Fpsc%3D1%26pd_rd_i%3DB0D69Z3QR4%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwy"}, {"asin": "B0CNVHKVVL", "image_url": "https://m.media-amazon.com/images/I/41+mB9ThR+L._AC_UF480,480_SR480,480_.jpghttps://m.media-amazon.com/images/I/216-OX9rBaL.png", "price": "$35.99", "product_name": "2.4GHz Wireless Gaming Headset with Wired Mode, 5.4 Bluetooth Gaming Headphones for...", "rating": "4", "reviews": "494", "url": "https://www.amazon.com/sspa/click?ie=UTF8&spc=MTozNTk0MDk5MjAyMTI5MDgyOjE3Mzk5MDYyOTY6c3BfZGV0YWlsMjozMDA2NjQ2NDQxODA0MDI6Ojo6&url=%2Fdp%2FB0CNVHKVVL%2Fref%3Dsspa_dk_detail_6%3Fpsc%3D1%26pd_rd_i%3DB0CNVHKVVL%26pd_rd_w%3D55FTJ%26content-id%3Damzn1.sym.953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_p%3D953c7d66-4120-4d22-a777-f19dbfa69309%26pf_rd_r%3D3HYQN9N1DWJ06DEX71N8%26pd_rd_wg%3DoBtem%26pd_rd_r%3D6a03dfba-02b7-4e7d-b756-9adaa7f5ce50%26s%3Delectronics%26sp_csd%3Dd2lkZ2V0TmFtZT1zcF9kZXRhaWwy"}] | [{"five_star": "77%", "four_star": "8%", "one_star": "7%", "three_star": "5%", "two_star": "3%"}] |