Web scraping is an automated process of extracting vast amounts of online data for market research, competitor monitoring, and pricing strategies. When scraping, you must carefully extract the data without harming the website’s function.

This article delves into effective web scraping guidelines, the dos and don’ts that demand your attention. Adhering to these guidelines ensures you handle the data and scraping process with care, leading to compliance and the maximization of benefits.

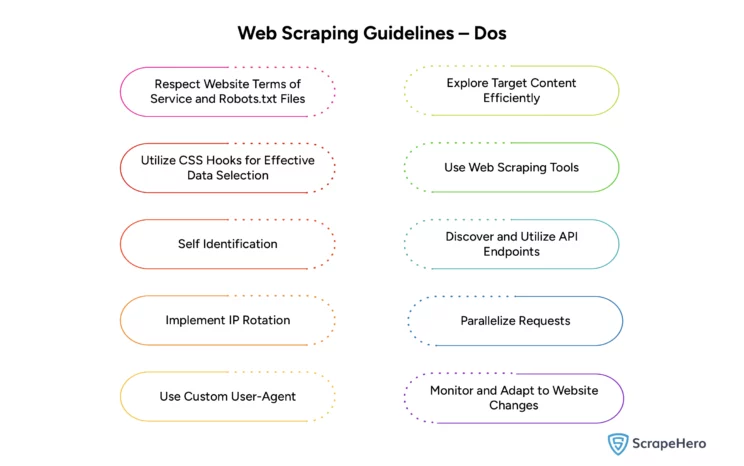

Web Scraping Guidelines – Dos

You must implement specific practices to ensure effective web scraping. Awareness of the issues during scraping will ensure you scrape websites by establishing a code of conduct.

1. Respect Website Terms of Service and Robots.txt Files

One of the best practices for web scraping that you should never fail to follow is respecting the target website’s Terms of Service and robots.txt files. These documents contain permissible actions for extracting data. The robots.txt file particularly mentions the website’s accessible parts to bots and rate limits for requests.

2. Utilize CSS Hooks for Effective Data Selection

CSS selectors are required to identify specific HTML elements for data extraction. They allow precise targeting and enhance the efficiency and accuracy of web scraping. Using CSS selectors reduces the possibility of errors in data extraction and ensures that only relevant data is gathered.

3. Self Identification

Self identification is one of the best practices for implementing web scraping. It is essential to identify the web crawler by including contact information in its header. This way, you can ensure transparency, and the website administrators can contact you if there are any issues with the crawler, which reduces the risk of being blocked.

4. Implement IP Rotation

It is essential to use IP rotation strategies to avoid detection and blocking. Most websites prevent scraping by blocking IPs that send too many requests. By rotating IPs through proxy services, the scraper mimics actual users and avoids blocks. Here’s a simple example using proxies in Python:

import requests

import random

urls = ["http://example.com"] # replace with your target URLs

proxy_list = ["54.37.160.88:1080", "18.222.22.12:3128"] # replace with your proxies

for url in urls:

proxy = random.choice(proxy_list)

proxies = {"http": f"http://{proxy}", "https": f"http://{proxy}"}

response = requests.get(url, proxies=proxies)

print(response.text)

5. Use Custom User-Agent

When web scraping, alter or rotate the User-Agent headers. This web scraping practice helps you avoid detection by web servers. Generally, websites block scrapers that contain generic or suspicious User-Agent strings. Using different User-Agent strings disguises the scraping activities as regular web traffic.

6. Explore Target Content Efficiently

One of the best practices for web scraping is to examine the website’s source code before scraping. Check whether there are JSON-structured data or hidden HTML inputs, which are easier and more stable data extraction points. Also, they are less prone to changes than other elements.

7. Use Web Scraping Tools

Employing web scraping tools such as ScrapeHero Crawlers from ScrapeHero Cloud can ensure you follow all the guidelines. Such tools automate and streamline the scraping process, handling large volumes of data more efficiently and accurately. You can use any tool that complies with the website’s scraping policies.

8. Discover and Utilize API Endpoints

APIs are more stable, efficient, and rule-compliant methods of accessing data. Official API endpoints or ScrapeHero APIs can be used instead of direct web scraping, which will also help avoid hitting rate limits imposed on web scraping.

9. Parallelize Requests

Instead of sending requests sequentially, it is always better to send multiple requests simultaneously to increase scraping efficiency. Parallelizing requests is one of the best web scraping techniques that can maximize the throughput of the scraping operations, especially when handling more extensive data sets.

# Example Python code for parallel requests using threading

from concurrent.futures import ThreadPoolExecutor

import requests

urls = ["http://example.com/page1", "http://example.com/page2"] # your target URLs

def fetch(url):

return requests.get(url).text

with ThreadPoolExecutor(max_workers=10) as executor:

results = list(executor.map(fetch, urls))

print(results)10. Monitor and Adapt to Website Changes

Websites may often change their structure, Terms of Service, or robots.txt policies. It is vital to monitor the changes in these target websites regularly. To prevent websites from blocking the scraping operations and ensure continued access to the data, you must adapt to these changes promptly.

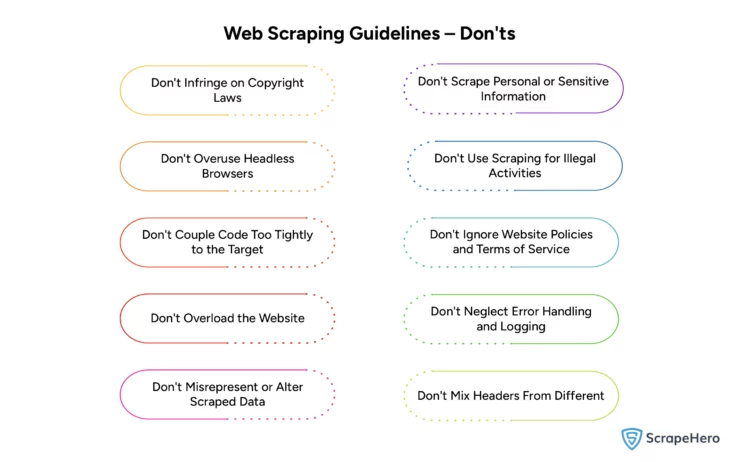

Web Scraping Guidelines – Don’ts

Web scraping has its challenges to overcome. There are certain don’ts that you must keep in mind while web scraping which, if followed, will ensure ethical and legal compliance.

1. Don’t Infringe on Copyright Laws

One vital web scraping guideline you should implement is ensuring that you are not violating copyright laws. This means that you only scrape data that is either publicly available or explicitly licensed for reuse. In most cases, factual data like names is not subject to copyright, but their presentation or original expression, like videos, is.

2. Don’t Overuse Headless Browsers

It would help if you used headless browsers like Selenium and Puppeteer for web scraping JavaScript-heavy sites. But try to use them only for some tasks. Since these tools consume significant resources, they can slow down web scraping. Access the data through more straightforward HTTP requests.

3. Don’t Couple Code Too Tightly to the Target

One of the best web scraping practices may be to avoid writing scraping code that is highly specific to the structure of a particular website. When the website changes, the code written should also be updated. When writing code, keep the basic crawling code separate from the parts that are specific to each website, like CSS selectors.

4. Don’t Overload the Website

When scraping, consider the website’s bandwidth and server load. Do not overload the site and degrade service for other users, as this may lead to IP blocking. To avoid this, limit the rate of requests, respect the site’s `robots.txt` directives, and only scrape during off-peak hours.

5. Don’t Misrepresent or Alter Scraped Data

The accuracy and integrity of the data extracted are crucial as these may be used for analysis or application. When the data is altered or misinterpreted, it can mislead the users, damaging our credibility and leading to legal consequences to maintain the accuracy of the data collected and frequently update the database.

6. Don’t Scrape Personal or Sensitive Information

Scraping sensitive or personal information without consent is unethical and illegal. Therefore, ensure that the extracted data is appropriately used and complies with privacy laws. Avoid scraping sensitive data such as social security numbers, personal addresses, or confidential business information unless explicitly permitted.

7. Don’t Use Scraping for Illegal Activities

Scraping should only be used for legitimate and ethical purposes. Never use the data extracted from websites through scraping for unlawful activities, which may result in severe legal penalties and damage your reputation. Rather than one of the web scraping tips, this should be considered a strict rule to follow.

8. Don’t Ignore Website Policies and Terms of Service

Before scraping, review and comply with the target website’s terms of service and privacy policies. Ignoring these policies can lead to legal issues and even bans from the website. Using the website’s API is usually safer and falls under ethical web scraping. Ensure that your scraping activities are always aligned with the website’s guidelines.

9. Don’t Neglect Error Handling and Logging

In web scraping, error handling and detailed logging are critical. This practice helps to identify and resolve issues during scraping and to understand the behavior of the web scraping setup over time. You must ensure that the scripts are robust against common issues such as network errors and unexpected data formats.

10. Don’t Mix Headers from Different Browsers

When configuring requests, make sure that the headers used match the characteristics of the browser you are pretending to be. If there are any mismatches in headers, anti-scraping technologies will be triggered. So, to avoid detection, maintain a library of accurate header sets corresponding to each user agent you use.

Here’s how you can maintain consistency by managing headers in web scraping :

# Example of managing headers consistently within a scraping session

header_sets = [

{"Accept-Encoding": "gzip, deflate, br", "Cache-Control": "no-cache", "User-Agent": "Mozilla/5.0 (iPhone ...)"},

{"Accept-Encoding": "gzip, deflate, br", "Cache-Control": "no-cache", "User-Agent": "Mozilla/5.0 (Windows ...)"}

]

# More header sets can be added as per the User-Agent profiles being used

urls = ["http://example.com"] # Target URLs

proxies = {"http": "http://yourproxy:port"} # Define your proxies here

for url in urls:

headers = random.choice(header_sets) # Select a consistent header set

response = requests.get(url, headers=headers, proxies=proxies)

print(response.text)

Web Scraping Guidelines-Dos and Don’ts-Summary

|

Dos of Web Scraping

|

Don’ts of Web Scraping

|

|

Respect Website Terms of Service and Robots.txt Files |

Don’t Infringe on Copyright Laws |

|

Utilize CSS Hooks for Effective Data Selection |

Don’t Overuse Headless Browsers |

|

Self Identification |

Don’t Couple Code Too Tightly to the Target |

|

Implement IP Rotation

|

Don’t Overload the Website |

|

Use Custom User-Agent |

Don’t Misrepresent or Alter Scraped Data

|

|

Explore Target Content Efficiently |

Don’t Scrape Personal or Sensitive Information |

|

Use Web Scraping Tool |

Don’t Use Scraping for Illegal Activities

|

|

Discover and Utilize API Endpoints |

Don’t Ignore Website Policies and Terms of Service |

|

Parallelize Requests

|

Don’t Neglect Error Handling and Logging |

|

Monitor and Adapt to Website Changes

|

Don’t Mix Headers From Different Browsers |

Wrapping Up

Following some web scraping best practices helps ensure that all the scraping activities are responsible, ethical, and legal. When it comes to web scraping on a large scale, it can become much more complicated. In such cases, you may require services from an industry leader, ScrapeHero.

Over the past decade, we have been able to provide data extraction services to a diverse client base that includes Global Financial Corporation, Big 4 Accounting Firms, and US Healthcare Companies.

ScrapeHero web scraping services can navigate the complexities of data extraction using advanced techniques. Without compromising on the legality and ethics of web scraping, we do it all for you on a massive scale.

Frequently Asked Questions

Yes, web scraping can harm a website if done irresponsibly. In most cases, it overloads the website’s server with too many requests, causing slowdowns or crashes.

Yes, websites can block web scraping. If websites detect bots, they can block the IP addresses of scrapers. They can also set rules in their robots.txt file restricting automated access.

You can use efficient data targeting techniques such as CSS selectors or APIs to manage the request rates, thus minimizing server load and avoiding IP bans.

If the scraping activity respects the website’s terms of service and does not harm its functionality for others, then it is considered ethical web scraping.

The legality of web scraping depends on the jurisdiction. However, it can be said to be illegal if it violates copyright laws, breaches a website’s Terms of Service, or involves accessing protected data without permission.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data