Data extraction is gathering data from different sources, cleaning it, and preparing it for any data analysis.

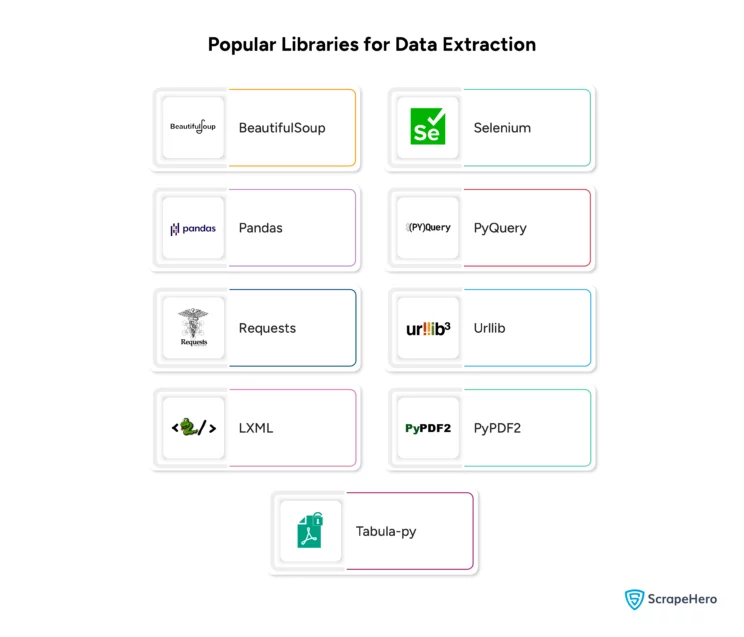

Python has a rich set of libraries specifically designed for data extraction. These libraries can be used individually or in combination, depending on the requirements of the tasks.

In this blog, let’s explore some popular Python libraries for data extraction, their features, pros and cons.

Data Extraction vs. Web Crawling vs. Web Scraping

Before beginning, let’s understand the basic difference between web crawling, web scraping and data extraction, even though they are interchangeably used.

Data extraction is a process in which specific data from various sources is stored and used in a structured format for further analysis.

Web crawling is the process of systematically indexing and gathering information from websites by browsing the web for search engines to update their databases.

Web scraping is an automated method of collecting structured data from websites using bots and extracting information available on web pages.

Web scraping is, hence, a combination of web crawling and data extraction.

Need to know the tools used to extract data? Then, read our article on free and paid data extraction tools and software.

Note: To clean and consolidate data from multiple sources, including databases, files, APIs, data warehouses and data lakes, external partner data, and website data, ETL tools and frameworks are used.

Best Data Extraction Libraries in Python

These are some of the popular Python data extraction libraries. They cover a wide range of needs, from web scraping to handling different file formats.

1. BeautifulSoup

BeautifulSoup, a versatile library, is used to parse HTML and XML documents. It can create parse trees, making it a powerful tool for extracting data from HTML.

Features of BeautifulSoup

- It has a simple and intuitive API

- It supports various parsers, including lxml and html.parser, html5lib

- It can navigate parse trees easily

Pros of BeautifulSoup

- It is easy to use and learn

- It has good documentation

Cons of BeautifulSoup

- It is slower when compared to other libraries like LXML

- It is limited to parsing HTML and XML

Data Extraction Using BeautifulSoup

Here is a simple Python code demonstrating data extraction using the BeautifulSoup library:

# Import necessary libraries

import requests

from bs4 import BeautifulSoup

# Define the URL of the webpage to scrape

url = 'https://example.com'

# Send a GET request to the webpage

response = requests.get(url)

# Parse the webpage content with BeautifulSoup

soup = BeautifulSoup(response.content, 'html.parser')

# Extract specific data (e.g., all the headings)

headings = soup.find_all('h1') # You can change 'h1' to other tags like 'h2', 'p', etc.

# Print the extracted headings

for heading in headings:

print(heading.text)

You can also learn web scraping using BeautifulSoup from ScrapeHero articles.

2. Pandas

Pandas is a Python library primarily used for data analysis and manipulation. It is also usually used to extract data from different file formats such as CSV, Excel, JSON, etc.

Features of Pandas

- It supports reading/writing from/to CSV, Excel, SQL, JSON, etc

- It has powerful data structures like DataFrame and Series

- It offers an extensive range of data manipulation functions

Pros of Pandas

- It’s a versatile library

- It gives excellent performance for data manipulation

Cons of Pandas

- It can be memory-intensive with large datasets

- It has a steeper learning curve for beginners

Data Extraction Using Pandas

Here is a simple Python code demonstrating data extraction from a CSV file using the Pandas library.

import pandas as pd

# Define the path to the CSV file

csv_file_path = 'path/to/your/file.csv'

# Read the CSV file into a DataFrame

df = pd.read_csv(csv_file_path)

# Display the first few rows of the DataFrame

print(df.head())

3. Requests

Requests is a simple and elegant HTTP library for Python designed to make sending HTTP/1.1 requests easier.

Features of Requests

- It simplifies making HTTP requests

- It can support HTTP methods such as GET, POST, PUT, DELETE, etc

- It handles cookies and sessions

Pros of Requests

- It has a simple and elegant API

- It is widely used and well-documented

Cons of Requests

- It is limited to HTTP/1.1

- It is not suitable for asynchronous requests

Data Extraction Using Requests

Here is a simple Python code demonstrating data extraction using the requests library to fetch JSON data from an API and then processing it:

import requests

# Define the URL of the API endpoint

api_url = 'https://api.example.com/data'

# Send a GET request to the API

response = requests.get(api_url)

# Check if the request was successful

if response.status_code == 200:

# Parse the JSON data

data = response.json()

# Extract specific information (e.g., names and ages)

for item in data:

name = item.get('name')

age = item.get('age')

print(f'Name: {name}, Age: {age}')

else:

print(f'Failed to retrieve data. Status code: {response.status_code}')

4. LXML

LXML library provides a convenient API for XML and HTML parsing.

Features of LXML

- It supports fast and efficient parsing

- It supports XPath and XSLT

- It can handle large files

Pros of LXML

- It is swift and efficient

- It has powerful features for XML/HTML processing

Cons of LXML

- It has a more complex API than other libraries

- It requires additional installation of libxml2 and libxslt

Data Extraction Using LXML

Here is a simple Python code demonstrating data extraction using the LXML library to parse and extract data from an HTML file:

from lxml import html

import requests

# Define the URL of the webpage to scrape

url = 'https://example.com'

# Send a GET request to the webpage

response = requests.get(url)

# Parse the webpage content with lxml

tree = html.fromstring(response.content)

# Extract specific data (e.g., all the headings)

headings = tree.xpath('//h1/text()') # You can change 'h1' to other tags like 'h2', 'p', etc.

# Print the extracted headings

for heading in headings:

print(heading)

5. Selenium

Selenium is a tool for automating web browsers. It can navigate web pages and extract data rendered by JavaScript.

Features of Selenium

- It controls web browsers programmatically

- It supports multiple browsers like Chrome, Firefox, Safari, etc

- It can handle dynamic content rendered by JavaScript efficiently

How do you scrape a dynamic website? Read our article on web scraping dynamic websites.

Pros of Selenium

- It can interact well with JavaScript-heavy websites

- It supports running in headless mode

Cons of Selenium

- It is slower compared to non-browser-based scrapers

- It requires a browser driver setup

Data Extraction Using Selenium

Here is a simple Python code demonstrating data extraction using the Selenium library to automate a web browser and extract data from a webpage:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

from webdriver_manager.chrome import ChromeDriverManager

# Set up Chrome options

chrome_options = Options()

chrome_options.add_argument("--headless") # Run in headless mode

# Set up the Chrome driver

service = Service(ChromeDriverManager().install())

driver = webdriver.Chrome(service=service, options=chrome_options)

# Define the URL of the webpage to scrape

url = 'https://example.com'

# Open the webpage

driver.get(url)

# Extract specific data (e.g., all the headings)

headings = driver.find_elements(By.TAG_NAME, 'h1') # You can change 'h1' to other tags like 'h2', 'p', etc.

# Print the extracted headings

for heading in headings:

print(heading.text)

# Close the browser

driver.quit()

6. PyQuery

PyQuery is a jQuery-like library that parses HTML documents, making navigating, searching, and modifying the DOM easier.

Features of PyQuery

- It is a jQuery-like API for Python

- It supports CSS selectors

- It is built on top of LXML

Pros of PyQuery

- It is familiar to jQuery users

- It is built on a fast parser (LXML)

Cons of PyQuery

- It is limited to HTML documents

- It has a smaller community and less documentation

Data Extraction Using PyQuery

Here is a simple Python code demonstrating data extraction using the PyQuery library to fetch and parse HTML content from a webpage:

from pyquery import PyQuery as pq

import requests

# Define the URL of the webpage to scrape

url = 'https://example.com'

# Send a GET request to the webpage

response = requests.get(url)

# Parse the HTML content with PyQuery

doc = pq(response.text)

# Extract specific data (e.g., all the headings)

headings = doc('h1') # You can change 'h1' to other tags like 'h2', 'p', etc.

# Print the extracted headings

for heading in headings.items():

print(heading.text())

7. Urllib

Urllib is a standard Python library that handles URLs, such as opening and reading URLs.

Features of Urllib

- It is part of Python’s standard library

- It supports opening and reading URLs

- It can handle basic authentication and cookies

Pros of Urllib

- It requires no additional installation

- It is simple to use

Cons of Urllib

- It is less user-friendly compared to Requests

- It has limited functionality

Data Extraction Using Urllib

Here is a simple Python code demonstrating data extraction using the urllib library to fetch HTML content from a webpage and then parse it using BeautifulSoup:

import urllib.request

from bs4 import BeautifulSoup

# Define the URL of the webpage to scrape

url = 'https://example.com'

# Send a request to the webpage and get the response

response = urllib.request.urlopen(url)

# Read the HTML content from the response

html_content = response.read()

# Parse the HTML content with BeautifulSoup

soup = BeautifulSoup(html_content, 'html.parser')

# Extract specific data (e.g., all the headings)

headings = soup.find_all('h1') # You can change 'h1' to other tags like 'h2', 'p', etc.

# Print the extracted headings

for heading in headings:

print(heading.text)

8. PyPDF2

PyPDF2 is a library for reading and extracting text and data from PDF files.

Features of PyPDF2

- It extracts text from PDF files

- It splits, merges, and rotates PDF pages

- It can access PDF metadata

Pros of PyPDF2

- It is a simple and lightweight library

Cons of PyPDF2

- It gives limited support for complex PDF structures

- It can become slow with large PDFs

Data Extraction Using PyPDF2

Here is a simple Python code demonstrating data extraction using the PyPDF2 library to extract text from a PDF file:

import PyPDF2

# Define the path to the PDF file

pdf_file_path = 'path/to/your/file.pdf'

# Open the PDF file in read-binary mode

with open(pdf_file_path, 'rb') as file:

# Create a PDF reader object

reader = PyPDF2.PdfReader(file)

# Get the number of pages in the PDF

num_pages = len(reader.pages)

# Iterate through each page and extract text

for page_num in range(num_pages):

# Get the page object

page = reader.pages[page_num]

# Extract text from the page

text = page.extract_text()

# Print the extracted text

print(f"Page {page_num + 1}:\n{text}\n")

9. Tabula-py

Tabula-py is a simple Python wrapper for tabula-java, used for reading and extracting tables from PDF files.

Features of Tabula-py

- It can convert PDF tables into pandas DataFrames

- It has a simple API

- It works well with both local and remote PDFs

Pros of Tabula-py

- It is easy to use

- It is effective for tabular data extraction

Cons of Tabula-py

- It requires Java runtime

- It is limited to extracting tables only

Data Extraction Using Tabula-py

Here is a simple Python code demonstrating data extraction using the Tabula-py library to extract tables from a PDF file:

First, install tabula-py using pip:

pip install tabula-pyHere is the Python code to extract data using Tabula-py

import tabula

# Define the path to the PDF file

pdf_file_path = 'path/to/your/file.pdf'

# Extract tables from the PDF file

tables = tabula.read_pdf(pdf_file_path, pages='all')

# Print the extracted tables

for i, table in enumerate(tables):

print(f"Table {i + 1}:\n")

print(table)

print("\n")

Here’s an essential guide and checklist for data extraction services for you to have a better understanding.

You can also read in detail about various data visualization libraries and data manipulation libraries used in Python in our articles.

Wrapping Up

Python libraries for data extraction must be chosen according to the types of extraction tasks.

Each library has unique strengths and weaknesses; choosing the correct ones is essential to save you time and effort.

When it comes to large-scale data extraction, you might encounter several challenges. So, you require a reliable data partner like ScrapeHero to handle your data needs.

As a fully managed enterprise-grade web scraping service provider, ScrapeHero can offer you custom solutions providing hassle-free data.

Frequently Asked Questions

To extract data from a database using Python, you must establish a connection using a database-specific library like sqlite3 or psycopg2 and execute SQL queries through a cursor to retrieve and process the data.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data