Amazon is a significant e-commerce leader, which makes it a critical source for collecting and analyzing vast amounts of data. However, scraping data from Amazon can be challenging.

Amazon’s anti-scraping techniques, implemented to protect its data, make scraping much more difficult. In this article, we discuss the various Amazon web scraping challenges you may have to face while trying to scrape its pages.

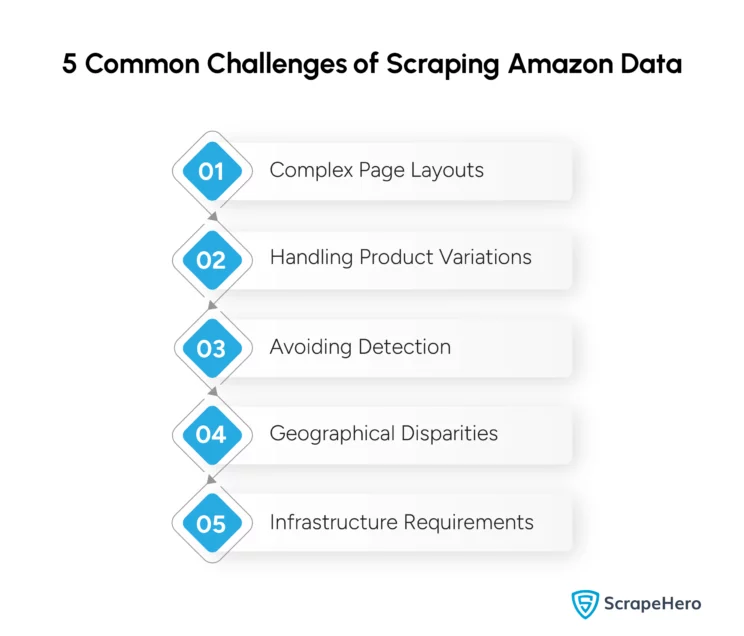

Common Challenges of Scraping Amazon

Scraping data from Amazon, including product details, pricing information, and customer reviews, can be a complex task. Here, we outline some of the common Amazon web scraping challenges you may encounter when scraping these types of data.

1. Complex Page Layouts

Amazon’s website is complex and dynamic, making it difficult to extract data. It uses a variety of product page templates, frequently updating them, which makes the process a bit more complicated. Since Amazon employs JavaScript for dynamic content loading, downloading HTML pages does not ensure complete data acquisition. This variability also applies to product layouts, attributes, and HTML tags.

Furthermore, Amazon’s templates may undergo several redesigns and vary by geographic market, which disrupts the web scrapers that rely on specific HTML or CSS selectors. These variations lead to numerous scraping errors and exceptions, and the scraper has to be designed to handle such changes.

2. Handling Product Variations

Amazon’s product pages feature variants of the same product, such as different sizes and colors. These variants are challenging for web scrapers as they are in diverse formats across the site.

It is difficult to scrape reviews and ratings from Amazon as the ratings and reviews aggregate across the product variants. Changes are also made to how best seller rank information is displayed. The format and count of best seller rank assignments also change frequently, further complicating web scraping data from Amazon.

3. Avoiding Detection

One of the major Amazon scraping challenges is the detection of bots. Amazon employs various methods, such as CAPTCHAs and IP blacklisting, for detecting and blocking scrapers. It detects bots when continuous or large numbers of page requests from the same IP address.

Amazon checks for valid User-Agent strings and either blocks or serves different content to non-standard User-Agents. It also checks for specific HTTP headers that browsers generally send with their requests. When Amazon identifies suspicious activity, it prompts a CAPTCHA verification to determine whether the user is a human or a bot. These CAPTCHAs can interrupt web scraping.

4. Geographical Disparities

Across various country-specific platforms, Amazon’s website features and product availability also vary, which complicates web scraping. For example, if Amazon is accessed from a foreign country, it adjusts its displays to show only items that can be shipped to the user’s location.

These geographical disparities, which are one of the major Amazon web scraping challenges, apply to product listings, search results, and product detail pages. Even though Amazon allows users to change their location during their first browsing session, coding this into a scraper is only sometimes feasible.

5. Infrastructure Requirements

A robust technical infrastructure is required for efficient large-scale web scraping from Amazon. Therefore, high-end cloud storage platforms with extensive memory resources and exceptional network capabilities are needed to handle and store data.

Further, more advanced machine learning algorithms and specialists to manage them are required for Amazon web scraping. Also, to streamline and facilitate data operations like querying, exporting, and deduplication databases should be used. Most small-scale enterprises cannot meet the infrastructure requirements needed to improve Amazon’s web scraping activities’ speed and reliability.

Practical Strategies to Prevent Getting Blocked While Scraping Amazon

To scrape a website without getting blocked, navigating the anti-scraping measures on websites is essential, and this applies to Amazon, too. Mentioned are some effective strategies that can help you prevent getting blocked while scraping Amazon.

-

Resolve CAPTCHA

You can use third-party tools to solve CAPTCHAs, which involve handling various CAPTCHAs, like captcha, reCAPTCHA v2, and image-based CAPTCHAs. Image CAPTCHAs might be difficult to solve as they involve a mix of letters, digits, or words, requiring more complex solutions.

-

Use IP Rotation

IP rotation can mitigate the challenges of scraping Amazon. You can either set up manual IP proxies or use residential IPs, which are more effective at mimicking user behavior. If Amazon’s security protocols identify the user as genuine, it is less likely to be blocked.

-

Additional Techniques

Implementing extra techniques can prevent your scraper from getting blocked while scraping Amazon.

- User-Agent Rotation: Using different user-agent strings helps prevent being identified based on browser signatures.

- Auto-Clear Cookies and Session Management: Clear cookies regularly and manage session data to help you identify as a normal user.

- Headless Browsers: When dealing with dynamic content, use tools like Puppeteer or Selenium to automate browsers and mimic human actions.

- Rate Limiting and Respect for Robots.txt: Introduce delays between requests and adhere to Amazon’s robots.txt guidelines, reducing the chances of getting blocked by the site.

-

Best Practices and Legal Compliance

You should follow responsible scraping practices and comply with the legality of web scraping.

- Utilize CAPTCHA Solving Services: Using CAPTCHA Solving Services is an effective solution, but this approach may raise ethical concerns.

- Legal Compliance: Always ensure that your scraping activities align with applicable laws and Amazon’s Terms of Service.

Wrapping Up

Amazon data scraping challenges occur due to the immense volume and complexity of the data. Moreover, scraping data from Amazon on a large scale requires specialized expertise. So for non-data-based enterprises, dealing with such large datasets without a proper team, like ScrapeHero, will be hectic.

In such situations, you can use ScrapeHero Cloud, which provides ready-made web scrapers and APIs for your data scraping needs. However, to handle millions of records, it is advisable to use ScrapeHero web scraping services, as we have a decade of experience in data extraction.

We can overcome the numerous obstacles in web scraping, ensuring high-quality service to our customers. We streamline your data acquisition process, allowing you to concentrate on your business and use the extracted data for competitive analysis, market trends, and customer insights.

Frequently Asked Questions

Amazon does not entirely ban web scraping, but it has taken measures to limit its scraping activities. Amazon’s Terms of Service does not allow automated data extraction without permission.

If there are unusual traffic patterns like high request rates from a single IP or repetitive access patterns, then Amazon identifies these as automated bots. As a detection mechanism, Amazon also uses CAPTCHAs and IP-blocking.

Yes. You can scrape Amazon reviews using Python or with ScrapeHero Amazon Review Scraper.

When you violate Amazon’s Terms of Service, you can face legal risks or account bans. Using data without proper authorization can also result in copyright infringement.

You can use officially sanctioned APIs by Amazon for practical and ethical web scraping. Respect rate limits and ensure compliance with Amazon’s Terms of Service. Managing session cookies and using rotating proxies can also minimize detection risks.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data