This article outlines a few methods to scrape Amazon product offers and third party sellers. By scraping you can effectively export seller and offer data to Excel or other formats for easier access and use.

There are three methods to scrape the data :

- Scraping Amazon Product Offers and Sellers in Python or JavaScript

- Using the ScrapeHero Cloud, Amazon Product Offers and Third Party Sellers Scraper, a no-code tool

- Using Amazon Offer Listing API by ScrapeHero Cloud

Don’t want to code? ScrapeHero Cloud is exactly what you need.

With ScrapeHero Cloud, you can download data in just two clicks!

Amazon Product Offers and Sellers Scraper in Python/JavaScript

In this section, we will guide you on how to scrape Amazon product offers and sellers using either Python or JavaScript. We will utilize the browser automation framework called Playwright to emulate browser behavior in our code.

One of the key advantages of this approach is its ability to bypass common blocks often put in place to prevent scraping. However, familiarity with the Playwright API is necessary to use it effectively.

You could also use Python Requests, LXML, or Beautiful Soup to build an Amazon seller a and product scraper without using a browser or a browser automation library. But bypassing the anti scraping mechanisms put in place can be challenging and is beyond the scope of this article.

Here are the steps to scrape Amazon product offers data using Playwright:

Step 1: Choose either Python or JavaScript as your programming language.

Step 2: Install Playwright for your preferred language:

Python

JavaScript

Python

pip install playwright

# to download the necessary browsers

playwright installJavaScript

npm install playwright@latest

Step 3: Write your code to emulate browser behavior and extract the desired data from Amazon using the Playwright API. You can use the code provided below:

Python

JavaScript

Python

import asyncio

import json

from playwright.async_api import async_playwright

url = "https://www.amazon.com/dp/B073JYC4XM/ref=olp-opf-redir?aod=1"

async def extract_data(page) -> list:

"""

Parsing details from the listing page

Args:

page: webpage of the browser

Returns:

list: details of homes for sale

"""

# Initializing selectors and xpaths

div_selector = "[id='aod-offer']"

name_selector = "[id='aod-asin-title-text']"

condition_selector = "[id='aod-offer-heading']"

price_selector = "[class='a-offscreen']"

seller_selector = "//a[@class='a-size-small a-link-normal' and @role='link']"

delivery_selector = "//div[@id='aod-offer-shipsFrom']//span[contains(@class,'a-color-base')]"

seler_rating_xpath = "//i[contains(@class,'a-star-mini')]"

rating_count_xpath = "[id='seller-rating-count-{iter}']"

# List to save the details of properties

amazon_offers_listings = []

# Waiting for the page to finish loading

await page.wait_for_selector(div_selector)

name = await page.locator(name_selector).inner_text() if await page.locator(name_selector).count() else None

name = clean_data(name)

# Extracting the elements

review_cards = page.locator(div_selector)

cards_count = await review_cards.count()

for index in range(cards_count):

# Hovering the element to load the price

inner_element = review_cards.nth(index=index)

await inner_element.hover()

inner_element = review_cards.nth(index=index)

# Extracting necessary data

condition = await inner_element.locator(condition_selector).inner_text() if await inner_element.locator(condition_selector).count() else None

price = await inner_element.locator(price_selector).inner_text() if await inner_element.locator(price_selector).count() else None

seller = await inner_element.locator(seller_selector).inner_text() if await inner_element.locator(seller_selector).count() else None

delivery = await inner_element.locator(delivery_selector).inner_text() if await inner_element.locator(delivery_selector).count() else None

rating_data = await inner_element.locator(rating_count_xpath).inner_text() if await inner_element.locator(rating_count_xpath).count() else None

seller_rate_data = await inner_element.locator(seler_rating_xpath).get_attribute('class') if await inner_element.locator(seler_rating_xpath).count() else None

# Removing extra spaces and unicode characters

condition = clean_data(condition)

price = clean_data(price)

seller = clean_data(seller)

delivery = clean_data(delivery)

rating_data = clean_data(rating_data)

seller_rate_data = clean_data(seller_rate_data)

seller_rate = seller_percentage = None

# extracting data from rating data

if rating_data:

data_list = rating_data.split()

if 'positive' in data_list:

ind = data_list.index('positive')

seller_percentage = data_list[ind-1]+ ' ' + 'positive'

if 'ratings)' in data_list:

ind = data_list.index('ratings)')

seller_rating = data_list[ind][1:]+' '+ 'ratings'

if seller_rate_data:

data_list = seller_rate_data.split()

for data in data_list:

if 'a-star-mini' in data:

seller_rate = data

break

seller_rate = seller_rate[12:].replace('-','.')

data_to_save = {

"name": name,

"condition": condition,

"price": price,

"seller": seller,

"delivery": delivery,

"seller_percentage": seller_percentage,

"seller_rate":seller_rate

}

amazon_offers_listings.append(data_to_save)

save_data(amazon_offers_listings, "Data.json")

async def run(playwright) -> None:

# Initializing the browser and creating a new page.

browser = await playwright.firefox.launch(headless=False)

context = await browser.new_context()

page = await context.new_page()

await page.set_viewport_size({"width": 1920, "height": 1080})

page.set_default_timeout(150000)

# Navigating to the homepage

await page.goto(url, wait_until="domcontentloaded")

await extract_data(page)

await context.close()

await browser.close()

def clean_data(data: str) -> str:

"""

Cleaning data by removing extra white spaces and Unicode characters

Args:

data (str): data to be cleaned

Returns:

str: cleaned string

"""

if not data:

return ""

cleaned_data = " ".join(data.split()).strip()

cleaned_data = cleaned_data.encode("ascii", "ignore").decode("ascii")

return cleaned_data

def save_data(product_page_data: list, filename: str):

"""Converting a list of dictionaries to JSON format

Args:

product_page_data (list): details of each product

filename (str): name of the JSON file

"""

with open(filename, "w") as outfile:

json.dump(product_page_data, outfile, indent=4)

async def main() -> None:

async with async_playwright() as playwright:

await run(playwright)

if __name__ == "__main__":

asyncio.run(main())JavaScript

const { chromium, firefox } = require('playwright');

const fs = require('fs');

const url = "https://www.amazon.com/dp/B073JYC4XM/ref=olp-opf-redir?aod=1";

/**

* Save data as list of dictionaries

as json file

* @param {object} data

*/

function saveData(data) {

let dataStr = JSON.stringify(data, null, 2)

fs.writeFile("data.json", dataStr, 'utf8', function (err) {

if (err) {

console.log("An error occurred while writing JSON Object to File.");

return console.log(err);

}

console.log("JSON file has been saved.");

});

}

function cleanData(data) {

if (!data) {

return;

}

// removing extra spaces and unicode characters

let cleanedData = data.split(/s+/).join(" ").trim();

cleanedData = cleanedData.replace(/[^x00-x7F]/g, "");

return cleanedData;

}

/**

* The data extraction function used to extract

necessary data from the element.

* @param {HtmlElement} innerElement

* @returns

*/

async function extractData(innerElement) {

async function extractData(data) {

let count = await data.count();

if (count) {

return await data.innerText()

}

return null

};

// intializing xpath and selectors

const conditionSelector = "[id='aod-offer-heading']";

const priceSelector = "[class='a-offscreen']";

const sellerSelector = "//a[@class='a-size-small a-link-normal' and @role='link']";

const deliverySelector = "//div[@id='aod-offer-shipsFrom']//span[contains(@class,'a-color-base')]";

const selerRatingXpath = "//i[contains(@class,'a-star-mini')]";

const ratingCountXpath = "[id='seller-rating-count-{iter}']";

let condition = innerElement.locator(conditionSelector);

condition = await extractData(condition);

let price = innerElement.locator(priceSelector);

price = await extractData(price);

let seller = innerElement.locator(sellerSelector);

seller = await extractData(seller);

let delivery = innerElement.locator(deliverySelector);

delivery = await extractData(delivery);

let ratingData = innerElement.locator(selerRatingXpath);

ratingData = await extractData(ratingData);

let sellerRateData = innerElement.locator(ratingCountXpath);

sellerRateData = await extractData(sellerRateData);

// cleaning data

condition = cleanData(condition)

price = cleanData(price)

seller = cleanData(seller)

delivery = cleanData(delivery)

ratingData = cleanData(ratingData)

sellerRateData = cleanData(sellerRateData)

let sellerPercentage = null;

let sellerRating = null;

// Extracting data from rating data

if (ratingData) {

const dataList = ratingData.split(' ');

const positiveIndex = dataList.indexOf('positive');

if (positiveIndex !== -1) {

sellerPercentage = dataList[positiveIndex - 1] + ' positive';

}

}

if (sellerRateData) {

const dataList = sellerRateData.split(' ');

for (const data of dataList) {

if (data.includes('a-star-mini')) {

sellerRating = data;

break;

}

}

if (sellerRating) {

sellerRating = sellerRating.slice(12).replace('-', '.');

}

}

extractedData = {

'condition': condition,

'price': price,

'seller': seller,

'delivery': delivery,

'sellerRating':sellerRating,

'sellerPercentage':sellerPercentage

}

return extractedData

}

/**

* The main function initiate a browser object and handle the navigation.

*/

async function run() {

// intializing browser and creating new page

const browser = await firefox.launch({ headless: false });

const context = await browser.newContext();

const page = await context.newPage();

await page.setViewportSize({ width: 1920, height: 1080 });

page.setDefaultTimeout(120000);

// intializing xpath and selectors

const divSelector = "[id='aod-offer']";

const nameSelector = "[id='aod-asin-title-text']";

// Navigating to the home page

await page.goto(url, { waitUntil: 'domcontentloaded' });

// Wait until the list of properties is loaded

await page.waitForSelector(divSelector);

// to store the extracted data

let data = [];

await page.waitForLoadState("load");

await page.waitForTimeout(10);

let reviewCards = page.locator(divSelector);

reviewCardsCount = await reviewCards.count()

// going through each listing element

for (let index = 0; index < reviewCardsCount; index++) {

await page.waitForTimeout(2000);

await page.waitForLoadState("load");

let innerElement = await reviewCards.nth(index);

await innerElement.hover();

innerElement = await reviewCards.nth(index);

let dataToSave = await extractData(innerElement);

let name = null;

// Checking if the element with the given selector exists

const nameElement = await page.locator(nameSelector).first();

if (nameElement) {

// Extract the inner text if the element exists

name = await nameElement.innerText();

}

dataToSave.name = name;

data.push(dataToSave);

};

saveData(data);

await context.close();

await browser.close();

};

run();

This code shows how to scrape Amazon Product Offers and Sellers using the Playwright library in Python and JavaScript.

The corresponding scripts have two main functions, namely:

- run function: This function takes a Playwright instance as an input and performs the scraping process. The function launches a Chromium browser instance, navigates to Amazon, fills in a search query, clicks the search button, and waits for the results to be displayed on the page.

The save_data function is then called to extract the offer details and store the data in a Data.json file. - save_data function: This function takes a Playwright page object as input and returns a list of dictionaries containing seller and offer details. The details include each listing’s name, condition, price, seller’s name, delivery date, seller percentage, and seller rate.

Finally, the main function uses the async_playwright context manager to execute the run function. A JSON file containing the seller details of the Amazon seller data script you just executed would be created.

Step 4: Run your code and collect the scraped data from Amazon.

Using No-Code Amazon Product Offers and Sellers Scraper by ScrapeHero Cloud

The Amazon Product Offers and Seller Scraper by ScrapeHero Cloud is a convenient method for scraping seller details from Amazon. It provides an easy, no-code method for scraping data, making it accessible for individuals with limited technical skills.

This section will guide you through the steps to set up and use the Amazon Seller Scraper.

- Sign up or log in to your ScrapeHero Cloud account.

- Go to the Amazon Product Offers and Third Party Sellers Scraper by ScrapeHero Cloud in the marketplace.

- Add the scraper to your account. (Don’t forget to verify your email if you haven’t already.)

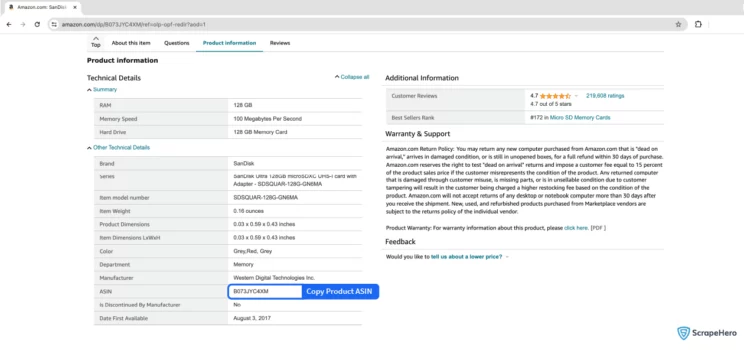

- Add the ASIN for a particular product to start the scraper. If it’s just a single query, enter it in the field provided and choose the number of pages to scrape.

- You can get the product ASIN from the product information section of a product listing page.

- You can get the product ASIN from the product information section of a product listing page.

- To scrape results for multiple queries, switch to Advance Mode, and in the Input tab, add the ASINs to the SearchQuery field and save the settings.

- To start the scraper, click on the Gather Data button.

- The scraper will start fetching data for your queries, and you can track its progress under the Jobs tab.

- Once finished, you can view or download the data from the same.

- You can also export the seller data into an Excel spreadsheet from here. Click on the Download Data, select “Excel,” and open the downloaded file using Microsoft Excel.

Using Amazon Offer Listing API by ScrapeHero Cloud

The ScrapeHero Cloud Amazon Offer Listing API is an alternate tool for extracting seller data from Amazon. This user-friendly API enables those with minimal technical expertise to obtain product offer and seller data effortlessly from Amazon.

This section will walk you through the steps to configure and utilize the Amazon offer listing scraper API provided by ScrapeHero Cloud.

- Sign up or log in to your ScrapeHero Cloud account.

- Go to the Amazon offer listing scraper API by ScrapeHero Cloud in the marketplace.

- Click on the subscribe button.

Note: As this is a paid API, you must subscribe to one of the available plans to use the API. - After subscribing to a plan, head over to the Documentation tab to get the necessary steps to integrate the API into your application.

Use cases of Amazon Seller Data

If you’re unsure as to why you should scrape Amazon Seller Data, here are a few use cases where this data would be helpful:

Brand Protection and Compliance Monitoring

Companies can scrape data to ensure that third-party sellers are compliant with brand guidelines and pricing policies. This is vital for maintaining brand integrity and avoiding market dilution or damage through unauthorized or misrepresented sales.

Identifying Unauthorized Sellers

By monitoring third-party sellers, companies can identify unauthorized sellers who may be distributing counterfeit or grey-market versions of their products. This is crucial for protecting brand reputation and ensuring customer trust.

Warranty and Service Validity

Companies can track whether the products sold by third-party sellers are eligible for warranties and customer service. This ensures that only legitimate products are covered, protecting the company from fraudulent warranty claims.

Marketplace Dominance Analysis

By analyzing third-party seller data, companies can assess their market dominance and visibility on platforms like Amazon. This helps in strategizing to improve product placement and promotional efforts.

Geo-Specific Strategy Development

Scraping data can provide insights into how products are performing in different regions and under various sellers. This assists in developing tailored strategies for different geographic markets.

Frequently Asked Questions

What is the subscription fee for the Amazon Seller Scraper by ScrapeHero?

To know more about the pricing, visit the pricing page.

Legality depends on the legal jurisdiction, i.e., laws specific to the country and the locality. Gathering or scraping publicly available information is not illegal.

Generally, Web scraping is legal if you are scraping publicly available data.

Please refer to our Legal Page to learn more about the legality of web scraping.