Python HTML parsers play a vital role in web scraping. They help navigate and manipulate HTML code and convert it into a structured format.

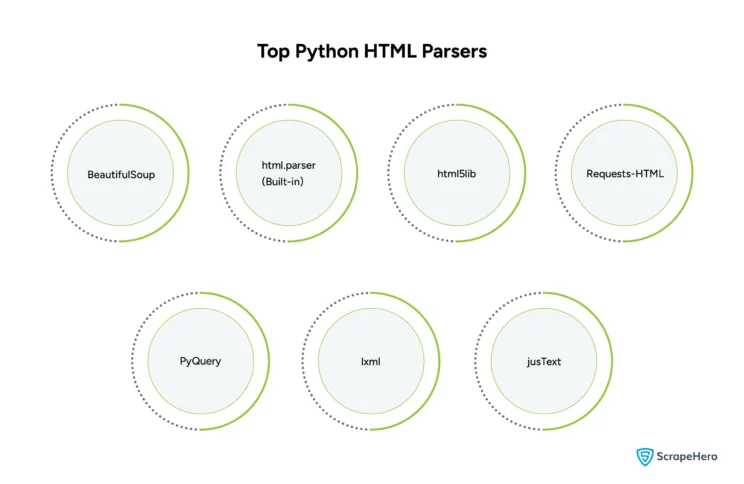

This article discusses in detail the top 7 Python HTML parsers that you could choose depending on the scope of your project.

What Is an HTML Parser?

An HTML parser markup processing tool that processes HTML input and breaks it down into individual elements.

These elements are then organized into a Document Object Model (DOM) tree representing the hierarchical layout of the HTML document.

HTML parsers facilitate the process and extraction of specific content from websites. However, these parsers can vary significantly in performance, ease of use, and flexibility.

How To Do HTML Parsing in Python?

To parse HTML in Python, use libraries such as BeautifulSoup. Install them and then fetch the HTML content using the Request library.

Later, you can parse it with your chosen Python HTML parser and use methods provided by the library to navigate and extract data from the DOM.

The following sections of this article include a detailed explanation of parsing HTML with Python using different libraries.

What Is the Best HTML Parser in Python?

Choosing the best Python HTML parser depends entirely on your specific needs, such as the complexity of the web content and your application’s performance requirements.

For instance, you can use BeautifulSoup for complex scraping tasks, which is ideal for beginners.

But when you need high efficiency, fast processing, and advanced XML path capabilities, you should choose lxml.

Some of the popular and widely used Python HTML Parsers that you can consider using for web scraping are:

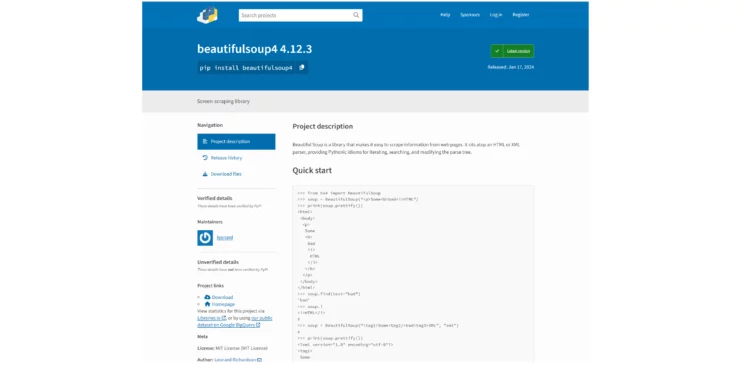

1. BeautifulSoup

BeautifulSoup is a popular Python library that offers a simple method to navigate, search, and modify the parse tree of HTML or XML files.

It is an excellent HTML parser for Python, ideal for web scraping, especially for beginners or projects where simplicity and speed are critical.

How to Install BeautifulSoup

Here’s how you can install BeautifulSoup:

pip install beautifulsoup4

pip install lxml # or html5lib if you prefer

Basic Usage of BeautifulSoup

Here’s a simple example of using BeautifulSoup for web scraping.

import requests

from bs4 import BeautifulSoup

# Fetch the HTML content

response = requests.get('https://example.com')

html_content = response.text

# Parse the HTML content

soup = BeautifulSoup(html_content, 'lxml') # or 'html5lib'

# Extract elements using BeautifulSoup methods

title = soup.title.text # gets the text within the <title> tag

print(f'Page Title: {title}')

# Find a specific element

first_paragraph = soup.find('p').text

print(f'First Paragraph: {first_paragraph}')

Pros of BeautifulSoup

-

Ease of Use

BeautifulSoup’s methods make navigating, searching, and modifying the DOM tree easy.

-

Flexibility

It works well with different Python HTML parsers, giving flexibility to the document you are working with.

-

Good Documentation

It has clear documentation, which is excellent for beginners.

-

Community Support

It has strong community support, making finding solutions to common problems more accessible.

Cons of BeautifulSoup

-

Performance

BeautifulSoup can be slower than other libraries like lxml when dealing with large documents.

-

Dependency on External Parsers

It requires external libraries such as html5lib to parse documents.

-

Limited to Static Content

It can only parse static content and does not handle JavaScript-rendered content.

Learn how to handle JavaScript-rendered content and scrape a dynamic site by reading our article on Scraping Dynamic Websites.

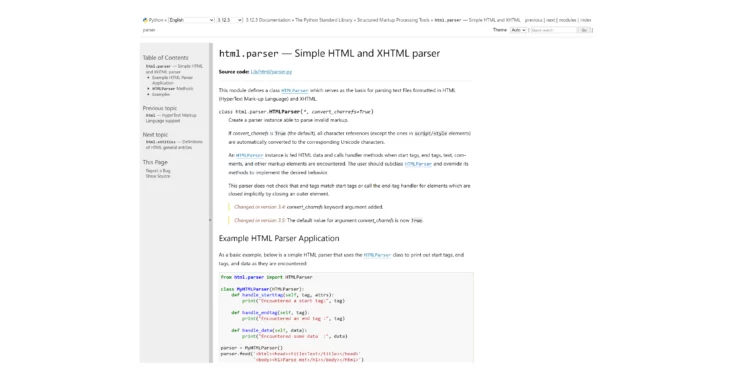

2. html.parser (Built-in)

html.parser is a built-in HTML parser in Python for parsing HTML documents.

If html.parser is already installed, then there is no need for additional external libraries such as BeautifulSoup or lxml.

How to Install html.parser

If Python is installed on your system, there’s no need to install anything else, as html.parser is part of Python’s standard library.

Basic Usage of html.parser

Given is a basic example of using html.parser to parse HTML content.

import requests

from html.parser import HTMLParser

class MyHTMLParser(HTMLParser):

def handle_starttag(self, tag, attrs):

print("Encountered a start tag:", tag)

def handle_endtag(self, tag):

print("Encountered an end tag :", tag)

def handle_data(self, data):

print("Encountered some data :", data)

# Fetch the HTML content

response = requests.get('https://example.com')

html_content = response.text

# Initialize the parser and feed it

parser = MyHTMLParser()

parser.feed(html_content)

Pros of html.parser

-

Built-in

No additional installation is required if Python has already been installed.

-

Simplicity

Simple to use for basic parsing tasks.

-

Good for Learning

It is an excellent choice to understand the basics of HTML parsing.

Cons of html.parser

-

Performance

html.parser is slower and less efficient than Python HTML parsers like lxml.

-

Features

It needs the advanced features and robust error-handling capabilities of libraries like BeautifulSoup or lxml.

-

Limited Capabilities

It may not handle malformed HTML as other Python HTML parsers.

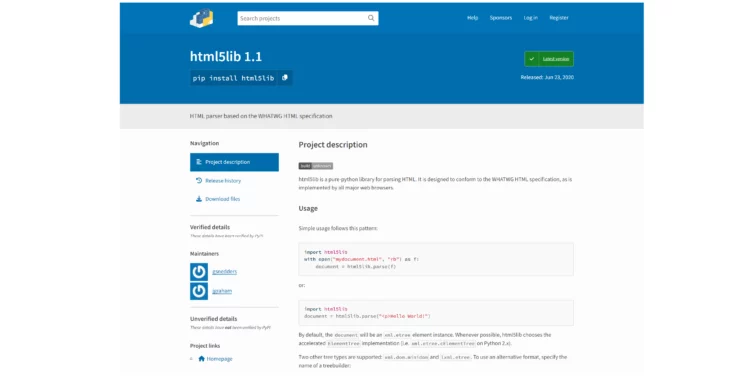

3. html5lib

html5lib is a valuable Python library for web scraping as it can handle malformed HTML, just like any modern web browser.

It is an excellent choice for parsing HTML generated by complex web applications that rely heavily on JavaScript.

How to Install html5lib

Install html5lib using pip, Python’s package manager. It’s also recommended to install BeautifulSoup, as they work well together to provide powerful scraping capabilities.

pip install html5lib

pip install beautifulsoup4 # Optional but recommended for easier HTML handling

Basic Usage of html5lib

Here’s a simple example of how to use html5lib with BeautifulSoup to parse HTML and extract data.

import requests

from bs4 import BeautifulSoup

# Fetch the HTML content

response = requests.get('https://example.com')

html_content = response.text

# Parse the HTML content using html5lib

soup = BeautifulSoup(html_content, 'html5lib')

# Extract elements using BeautifulSoup methods

title = soup.title.text # Gets the text within the <title> tag

print(f'Page Title: {title}')

# Find a specific element

first_paragraph = soup.find('p').text

print(f'First Paragraph: {first_paragraph}')

Pros of html5lib

-

Robust Parsing

It can handle malformed HTML very well.

-

Flexible

It works well with BeautifulSoup and provides a flexible scraping solution.

-

Standard Compliance

Parses HTML documents according to HTML5 standards, which is crucial for modern web pages.

Cons of html5lib

-

Performance

html5lib is slower than some other Python HTML parsers like lxml.

-

Complexity

The parser itself is more complex, although it handles complex HTML well.

-

Resource Intensive

It is more resource-intensive, especially with large or complex HTML documents.

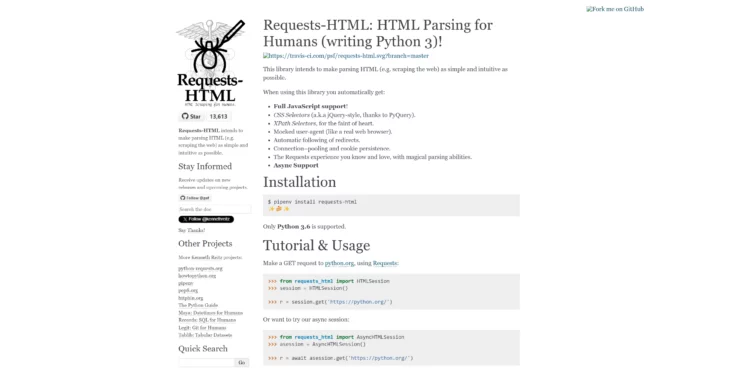

4. Requests-HTML

Requests-HTML is a Python library built on top of requests, providing capabilities for fetching web pages.

It helps in scraping as it includes features for parsing and manipulating HTML and support for JavaScript.

How to Install Requests-HTML

You can install requests-html using pip.

pip install requests-htmlBasic Usage of Requests-HTML

Here’s how you can use `requests-html` to fetch a web page and then extract elements:

from requests_html import HTMLSession

# Create a session

session = HTMLSession()

# Fetch the webpage

r = session.get('https://example.com')

# Render JavaScript (optional, if the page uses JavaScript to load content)

r.html.render()

# Extract elements

title = r.html.find('title', first=True).text

print(f'Page Title: {title}')

# Extract all links

links = [link.attrs['href'] for link in r.html.find('a')]

print(links)

Pros of Requests-HTML

-

Integrated JavaScript Support

It can render JavaScript and access content on dynamic web pages.

-

Ease of Use

It simplifies the scraping process by combining fetching and parsing in a unified API.

-

Powerful Selectors

It supports modern CSS selectors and XPath, which provides flexibility when targeting HTML elements.

ScrapeHero Cloud APIs offer a wide range of real-time APIs that you can use to power up your internal applications and workflows with web data integration.

Cons of Requests-HTML

-

Performance

For complex websites, rendering JavaScript can be resource-intensive and slow.

-

Stability Issues

The JavaScript rendering can sometimes be unstable or inconsistent.

-

Dependency on Chromium

Since it relies on a Chromium backend for JavaScript rendering, it requires more setup and deployment time than other libraries.

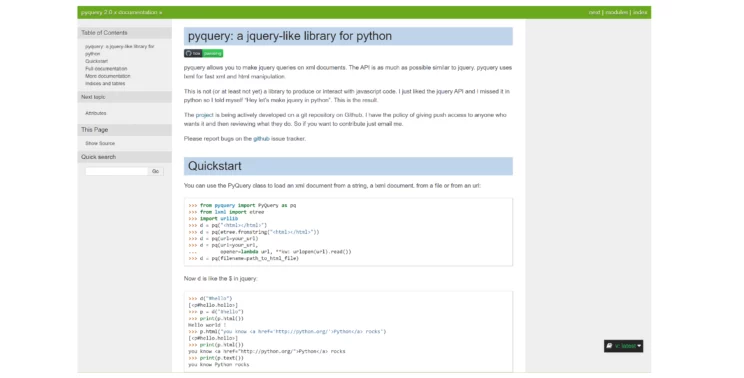

5. PyQuery

PyQuery is a Python library that allows you to make jQuery queries on XML documents. It is a convenient tool for web scraping as it parses HTML and extracts information.

How to Install PyQuery

Install PyQuery via pip, Python’s package manager.

pip install pyqueryBasic Usage of PyQuery

Here’s an example to demonstrate how to use PyQuery to fetch and parse HTML content:

from pyquery import PyQuery as pq

# Fetch and parse HTML from a URL

d = pq(url='https://example.com')

# Use CSS selectors to find elements

title = d('title').text()

print(f'Page Title: {title}')

# Find all 'p' elements and print their text

paragraphs = d('p').text()

print(f'Paragraph Texts: {paragraphs}')

Pros of PyQuery

-

Familiar Syntax

PyQuery uses a syntax similar to jQuery, which makes it easy to adapt and use.

-

Efficient and Powerful

It can parse large documents and support complex queries and manipulations.

-

Flexible

It can work well for both HTML and XML and handle different documents.

Cons of PyQuery

-

Limited JavaScript Support

It doesn’t handle JavaScript and can only parse static HTML. That means it cannot access dynamic content loaded via JavaScript.

-

Learning Curve

There might be a learning curve to effectively use PyQuery for users who are not familiar with jQuery.

-

Less Popular

It is less popular than libraries like BeautifulSoup or lxml. So, it has fewer community resources and less extensive documentation.

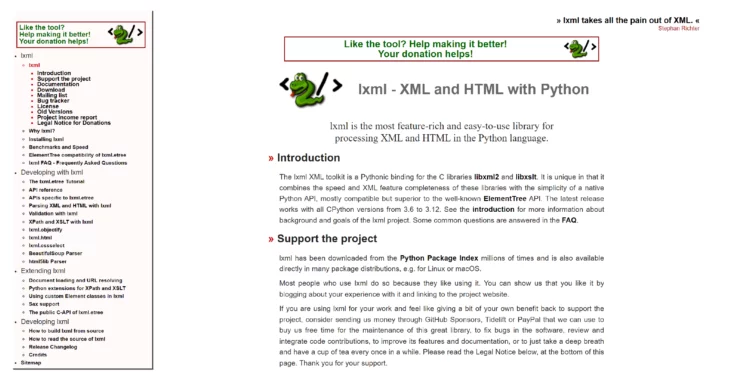

6. lxml

lxml is a feature-rich library for processing XML and HTML in Python. It supports XPath and XSLT, making it a popular choice for web scraping and data processing.

How to Install lxml

Install lxml via pip, but it might require dependencies like libxml2 and libxslt if they still need to be installed on your system.

pip install lxmlBasic Usage of lxml

Given is a simple example of using lxml to parse HTML and perform operations using XPath.

import requests

from lxml import etree

# Fetch the HTML content

response = requests.get('https://example.com')

html_content = response.content

# Parse the HTML

html = etree.HTML(html_content)

# Use XPath to extract elements

title = html.xpath('//title/text()')[0]

print(f'Page Title: {title}')

# Extract all links

links = html.xpath('//a/@href')

print(f'Links found: {links}')

Pros of lxml

-

Performance

lxml is extremely fast, making it suitable for processing large datasets.

-

XPath and XSLT Support

It supports XPath and XSLT, which allows for sophisticated querying and transformation of XML and HTML documents.

Have a glance at the XPath cheat sheet used in web scraping.

-

Robust

It handles malformed HTML and adheres closely to XML and HTML standards.

Cons of lxml

-

Complexity

The API can be more complex than more straightforward libraries like BeautifulSoup -

Installation

Its installation may be tricky on some systems due to its dependencies on native code. -

Error Handling

Its error messages can sometimes be cryptic, making debugging more challenging.

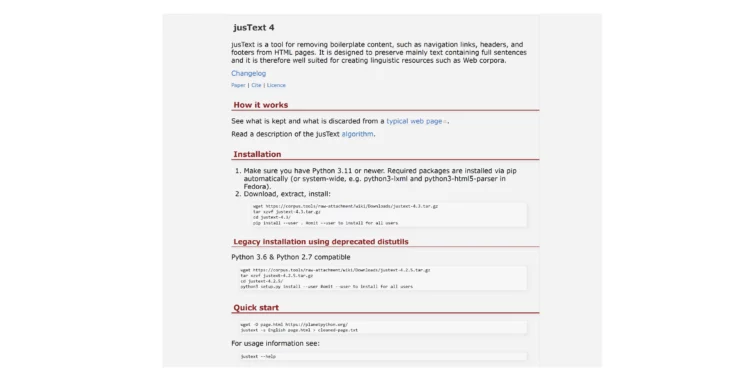

7. jusText

jusText is a Python library designed to remove content from HTML pages, such as navigation links, headers, and footers.

It can extract meaningful or relevant textual content from a web page, which makes it particularly useful for web scraping.

How to Install jusText

You can install jusText via pip.

pip install justext

Basic Usage of jusText

Here’s a simple example of using jusText to extract the main text from an HTML page.

import requests

import justext

# Fetch the HTML content

response = requests.get('https://example.com')

html_content = response.content

# Get paragraphs with jusText

paragraphs = justext.justext(html_content, justext.get_stoplist("English"))

# Print paragraphs that are not considered boilerplate

for paragraph in paragraphs:

if not paragraph.is_boilerplate:

print(paragraph.text)

Pros of jusText

-

Effective Cleaning

It can remove irrelevant content from web pages, extracting clean, readable text.

-

Language Support

It supports multiple languages, improving its effectiveness across various sites.

-

Simple to Use

Since its API is straightforward, it is easy to integrate into web scraping workflows.

Cons of jusText

-

Limited Flexibility

Primarily focused on text extraction, it doesn’t provide functionality for more nuanced HTML parsing or data extraction.

-

Dependent on Heuristics

It relies on heuristics rather than structural analysis. So its performance can vary depending on the structure of the web pages.

-

Not for Complex Data Extraction

It is not suitable for tasks requiring structured data extraction like tables or lists.

Wrapping Up

Python offers a wide range of HTML parsers, such as BeautifulSoup, to handle varied web scraping needs. Knowing which Python HTML parser to use is essential for complex web scraping tasks.

A fully managed enterprise-grade web scraping service provider like ScrapeHero can assist you in making this decision and meeting your data-related needs.

We provide customized web scraping services across industries, helping businesses to use data for decision-making and strategic planning.

Frequently Asked Questions

Yes. HTML parsers like BeautifulSoup and html5lib are part of Python’s extensive library ecosystem, and each is designed for different web scraping requirements.

You can use the BeautifulSoup library to parse a local HTML file in Python. To do this, you have to open the file with Python’s built-in open() function and then parse its contents by creating a BeautifulSoup object with the file’s data.

To parse HTML using XPath in Python, you can use the lxml library, which supports XPath queries to navigate through HTML documents.

To parse an HTML table in Python, use BeautifulSoup to extract the table data from HTML, and Python Pandas to convert this data into a structured DataFrame.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data