Google Shopping, formerly known as Google Product Search, Google Products, and Froogle is an online platform by Google that facilitates efficient online shopping experiences for consumers as well as businesses. It streamlines the process of finding a wide array of products, and one can even scrape Google Shopping for instant comparisons of product details such as price, availability, reviews, rating, and much more.

Google Shopping also offers a robust advertising solution, namely Product Listing Ads (PLAs), so businesses can showcase their products in Google search results. You might already be familiar with scraping Google Maps, Google Careers and Google Reviews. This article will be mainly focused on scraping Google Shopping results.

Why Scrape Google Shopping?

It becomes necessary to scrape Google Shopping as the conversion rate of Google Shopping is significantly high. Scraping large amounts of e-commerce data can assist enterprises in making informed decisions on marketing strategies and tracking competitors. Below are some of the prime reasons why you should scrape Google Shopping.

-

Market Research

You can scrape Google Shopping results data for market research as it provides a deep understanding of the current trends in the market. A detailed analysis of products can lead to a better product marketing strategy for any business. Tracking the competitors and updating with the trends also contributes to creating a better market for any product.

-

Price Monitoring

Google Shopping price monitoring allows businesses to monitor and compare their competitors’ product prices on different eCommerce sites. Google Shopping results are thus considered an efficient way for businesses to create a competitive pricing strategy, stay ahead of the competition, and lure more customers.

-

Product Research

Apart from Google Shopping price monitoring the obtained results can be made in use for product research. Product listings, performance of different products, sales data, and other product data can be tracked, and companies can determine their product placement in the market.

Google Shopping Page Structure

A Google Shopping Results Page generally includes product name, product image, price, product link, merchant name, reviews, and ratings. Understanding the Google Shopping page structure is crucial to creating a Google Shopping scraper. Distinct HTML elements contain all this information, and with a suitable web scraping tool or library, they can be extracted.

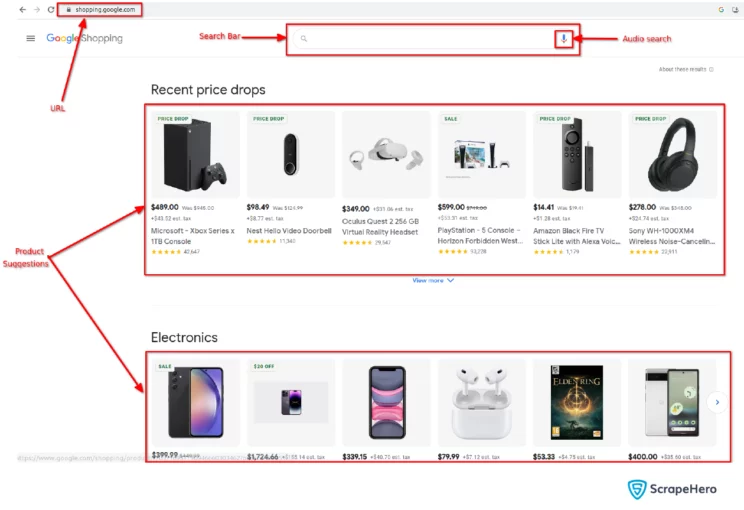

Search/Home Page

The Search/Home page lists some recent and trending products from different categories. Using the search bar, desired products can be searched and located. A typical Google Shopping Home page may be similar to the image given below. Note that, according to the users, product suggestions can vary.

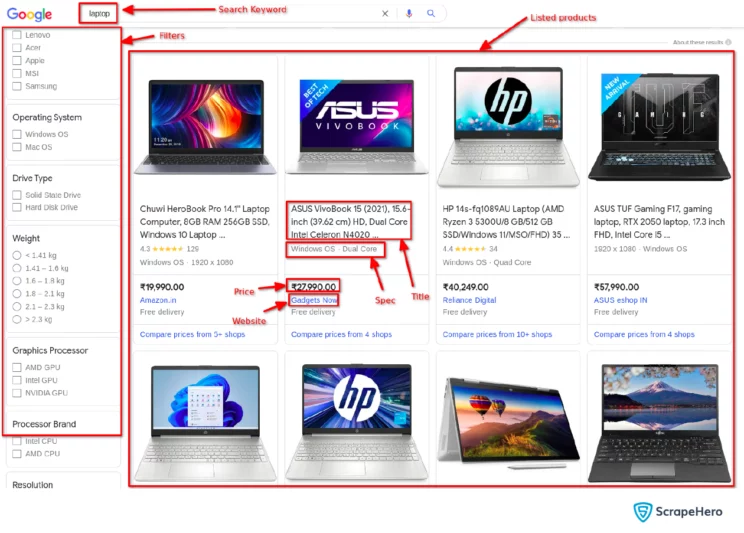

Listing Page

The keyword that is used for searching in the search bar will land up on the listing page, where all the products based on keywords are listed. Usually, the listing page includes the following sections:

- Resultant Products: These are the search results that are listed according to the search keyword. Every listed product in this section contains the following data.

- Image Thumbnail: A small image icon of the product.

- Title: The title of the product

- Rating: The customer rating of the product.

- Rating Count: The number of customers that rated this product.

- Price: The sale price of this product.

- Short Specification: The major specifications of this product.

- Website: The actual website where the product is listed.

- Price Comparison: A hyperlink that leads to the price comparison of this product on different websites.

- Filter Bar: With the filter bar, you can sort or filter the search results according to the requirement. Different categories will have different filter options.

The image given below is a typical listing page that opens when searched for the keyword laptop.

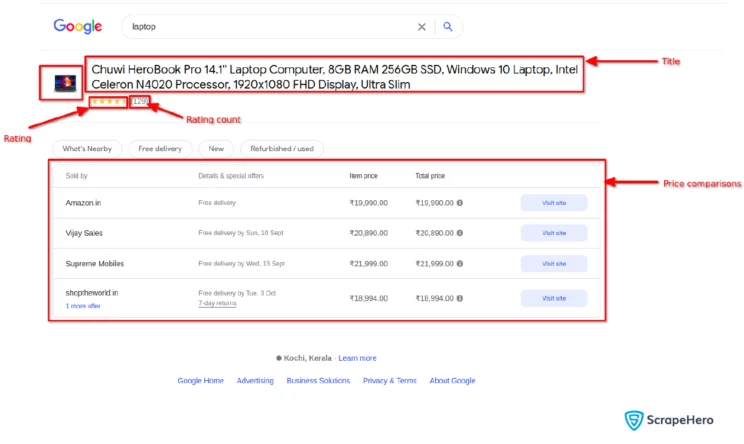

Product Page

From the listing page you can click a specific product to open the product page. It contains more detailed information about the product, which is given below.

Image: The image of the product.

Title: The title of the product

Rating: The customer rating of the product.

Rating Count: The number of customers that rated this product.

Price Comparison Table: A table that helps in Google Shopping price monitoring and compares the product prices from different websites.

A typical product page is as shown below:

Steps To Scrape Google Shopping

To scrape Google Shopping, different tools can be used. In this tutorial, the Python-Requests module for handling HTTP requests and LXML for parsing and extracting data from the response are used in creating a Google Shopping scraper.

Run the following commands to scrape Google Shopping and complete the process:

-

Installing Dependencies

$ pip install requests

$ pip install lxml-

Importing the Required Packages

# For csv file handling

import csv

# For cleaning the data

import re

# For sending HTTP Request

import requests

# For parsing the response

from lxml import html-

Initializing proxies

If you are using proxies define it here. We are keeping it as empty dictionary

# Defining proxies

proxies = {

# "http": "http://:",

# "https": "http://:"

}-

Defining the Headers for the Requests

# Defining the HTTP headers for the request

headers = {

"authority": "www.google.com",

"accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,a\

pplication/signed-exchange;v=b3;q=0.7",

"accept-language": "en-GB,en-US;q=0.9,en;q=0.8",

"referer": "https://shopping.google.com/",

"sec-ch-ua": '"Chromium";v="110", "Not A(Brand";v="24", "Google Chrome";v="110"',

"sec-ch-ua-arch": '"x86"',

"sec-ch-ua-bitness": '"64"',

"sec-ch-ua-full-version": '"110.0.5481.177"',

"sec-ch-ua-full-version-list": '"Chromium";v="110.0.5481.177", "Not A(Brand";v="24.0.0.0", "Google Chrome";v="\

110.0.5481.177"',

"sec-ch-ua-mobile": "?0",

"sec-ch-ua-model": '""',

"sec-ch-ua-platform": '"Linux"',

"sec-ch-ua-platform-version": '"5.14.0"',

"sec-ch-ua-wow64": "?0",

"sec-fetch-dest": "document",

"sec-fetch-mode": "navigate",

"sec-fetch-site": "same-site",

"upgrade-insecure-requests": "1",

"user-agent": "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/110.0.0.0 Safari\

/537.36",

}-

Creating a Function To Save the Data to a CSV File

def save_as_csv(data: list):

"""

The function that used to save

the data as a csv file

Args:

data (list): data as list of dictionary

"""

# Column(field) names for the csv file

fieldnames = ["title", "image_url", "rating", "website", "item_price", "url"]

# Opening an csv file to save the data

with open("outputs.csv", mode="w", newline="") as file:

writer = csv.DictWriter(file, fieldnames=fieldnames)

# Writing the headers(Column name)

writer.writeheader()

# Iterating through each product data

for row in data:

# Writing the data in a row

writer.writerow(row)-

Creating a Function To Extract Product URLs From Listing Page

def parse_listing_page(input_search_term: str):

"""

This function will generate the listing page url from

the input search keyword, Send request to it and extract

product URLs from the response.

Args:

input_search_term (string): The seach keyword that we want

to search

Returns:

list: List of extracted product urls

"""

# Generating the listing page URL

url = f"https://www.google.com/search?q={input_search_term}&safe=off&tbm=shop"

# Sending request to the URL

response = requests.get(url=url, headers=headers)

# Parsing the response

tree = html.fromstring(response.content)

# Extracting product URLs

product_urls = tree.xpath('//a[@class="xCpuod"]/@href')

return product_urls-

Creating a Function To Extract the Data From Product Page

def parse_product_page(product_urls: list):

"""

This function will accept a list of input URLs,

iterate through each of them, Send request to each

URL, store the data in a list and return back

Args:

product_urls (list): List of product URLs

Returns:

list: data as list of dictionary

"""

# Initializing an empty list to save the extracted data

data = []

# Iterating through each product URL

for product_url in product_urls:

# Generating the product URL

url = f"https://www.google.com{product_url}"

# Sending the request

response = requests.get(url=url, headers=headers)

# Parsing the response

tree = html.fromstring(response.content)

# Extracting title and image url

title = tree.xpath("//span[contains(@class, 't__title')]/text()")

image_url = tree.xpath(

"//div[@id='sg-product__pdp-container']//img[contains(@alt, 'View product image #1')]/@src"

)

if not image_url:

image_url = tree.xpath(

"//div[@id='sg-product__pdp-container']//div[contains(@class, 'main-image')]/img/@src"

)

# Extracting the price comparison table

price_table = tree.xpath(

"//table[contains(@id, 'online-sellers-grid')]/tbody/tr"

)

# Extracting rating

rating = tree.xpath(

'//a[@href="#reviews"]/div[contains(@aria-label, "out of")]/@aria-label'

)

# Iterating through each row

for row in price_table:

# Extracting the website name

website = row.xpath("./td[1]//a/text()")

if not website:

continue

# Extracting the price on that website

item_price = row.xpath("./td[3]/span/text()")

item_price = item_price[0] if item_price else None

# Creating a dictionary of data and appending to the list

data_to_save = {

"title": title[0] if title else None,

"image_url": image_url[0] if image_url else None,

"rating": rating[0] if rating else None,

"website": website[0] if website else None,

"item_price": item_price,

"url": url,

}

data.append(data_to_save)

return data-

Combining the Functions

if __name__ == "__main__":

input_search_term = "laptop"

product_urls = parse_listing_page(input_search_term)

data = parse_product_page(product_urls)

save_as_csv(data)Put all the code together to see the output. Refer the following link to get the complete code.

https://github.com/scrapehero-code/Google-Shopping/blob/main/google_shopping.py

Wrapping Up

The Google Shopping scraper is a powerful tool that assists enterprises in gaining insight into products and trends in the market, thus leading to data-driven decisions for scaling businesses. Target markets, consumers, competitors, etc. can be studied from the extracted data.

ScrapeHero, a reputed enterprise-grade data scraping service provider, offers various services related to data scraping. Check out our scrapers for scraping various Google services. Let’s connect if what you search for is a reliable data scraping service provider for customized services ranging from data extraction to robotic process automation to alternative data.