Picture this: it’s 11 PM, and your customer orders ice cream for a late-night binge, only to see the app display “sold out.” You lose the sale and, more importantly, the trust.

In the world of quick commerce (Q-commerce), where delivery times are counted in minutes and customer expectations are sky-high, every second counts.

Quick commerce isn’t a nice-to-have. It’s a rapidly growing field.

According to Market Research.com, the market hit $111 billion in 2024. It is expected to reach $353 billion by 2030, growing at a rate of 21.3%.

This fast-paced industry demands real-time data, including dynamic pricing, stock availability, and hyperlocal variations, all of which can change in the blink of an eye.

Quick commerce web scraping is the critical practice that enables businesses to keep pace with this ever-evolving landscape. By extracting structured data from platforms like Blinkit, Zepto, and Instamart, web scraping helps provide real-time insights needed to stay competitive.

However, outsourcing to a web scraping service like ScrapeHero can get you the data you need without any hassle. Want to scrape Q-Commerce data the easiest way?

Continue reading to explore the key aspects of quick commerce web scraping, including the types of data collected, the platforms that can be scraped, the potential challenges, and the strategies that can be employed to extract actionable data efficiently.

Hop on a free call with our experts to gauge how web scraping can benefit your businessIs web scraping the right choice for you?

Types of Data that Quick Commerce Web Scraping Can Gather

Quick commerce web scraping enables the extraction of structured data from quick commerce platforms. Here are some key types of data that can be gathered through quick commerce web data extraction.

- Product Catalog

A core component of any quick commerce platform is its product catalog. Web scraping can gather comprehensive details about products, including:

- Product names

- Prices

- Availability status (in stock, out of stock, limited quantity)

- Discounts and promotions

- Product descriptions

- Images and product specifications

- Delivery ETA (Estimated Time of Arrival)

Real-time data on delivery times is crucial for both customers and businesses. Quick commerce platforms often provide estimated delivery times for products depending on the location. Web scraping can extract:

- Delivery windows

- Estimated arrival times based on the user’s location

- Availability of time slots for delivery

- Store-Level Differences (Location-Based Catalog)

Many quick commerce platforms feature different catalogs based on geographic location. Products available in one area may not be available in another due to local stock variations. Scraping can capture:

- Geo-specific product listings

- Price differences by location

- Stock availability based on the zip code or delivery area

- SKU IDs and Categories

Each product on a quick commerce platform is often associated with an SKU (Stock Keeping Unit) and categorized into various product types. Web scraping can gather:

- Unique SKU IDs

- Product categories (e.g., snacks, beverages, household goods)

- Subcategories and variants (e.g., different sizes, flavors, or packaging)

- Promotional Offers and Flash Sales

Flash sales, time-sensitive offers, and limited-time discounts are a common feature of quick commerce platforms. Web scraping can track:

- Active promotional campaigns (e.g., discounts, BOGO deals)

- Flash sale timelines (start and end dates)

- Category-specific promotions

Key Quick Commerce Platforms and Their Scraping Hurdles

Quick commerce platforms like Zepto, Blinkit, Swiggy Instamart, etc are changing the way people shop by offering fast delivery, often within minutes.

Overview of Major Q-Commerce Platforms <h3>

Zepto: Known for its hyperlocal grocery delivery, Zepto promises delivery within minutes across major urban centers.

It focuses on essentials, offering a streamlined shopping experience for urban dwellers.

Zepto’s platform is mobile-first, with an interface optimized for quick and easy ordering. It operates in select cities and continues to expand its user base.

Blinkit (formerly Grofers): Blinkit is one of the most popular quick commerce platforms, offering grocery and essentials delivery within minutes.

Blinkit operates primarily in urban areas, and its integration with Zomato has further solidified its place in the market.

Its app offers a smooth, intuitive interface designed for ease of use. The platform is predominantly mobile-driven, although it also has a web interface.

Want to monitor Blinkit in real-time? Try this Enterprise-grade Blinkit scraping API to extract product details, zip codes, and search results from blinkit.com.

Instamart: As part of the Swiggy ecosystem, Instamart caters to fast grocery delivery. It’s available in multiple cities and provides a wide range of products from groceries to household essentials.

The mobile app is the primary point of interaction for most users, though some data may be available through their website as well.

Quick Commerce Web Scraping: Mobile App vs. Web

One of the most important aspects of scraping data from quick commerce platforms is understanding the difference between their mobile apps and web interfaces.

- Mobile Apps: Most Q-commerce platforms are mobile-first, meaning they prioritize their mobile applications for user interaction.

Mobile apps often use JavaScript-heavy frameworks, dynamic content, and API calls that render the data. This can pose challenges for scrapers, as traditional scraping methods (e.g., simple HTML scraping) may not work efficiently.

- Web Platforms: In contrast, web platforms may have a simpler, more structured design, where data like product catalogs, stock levels, and pricing are often more directly accessible through the HTML code.

However, many quick commerce platforms now use AJAX or JavaScript frameworks, similar to mobile apps, which can make scraping more difficult.

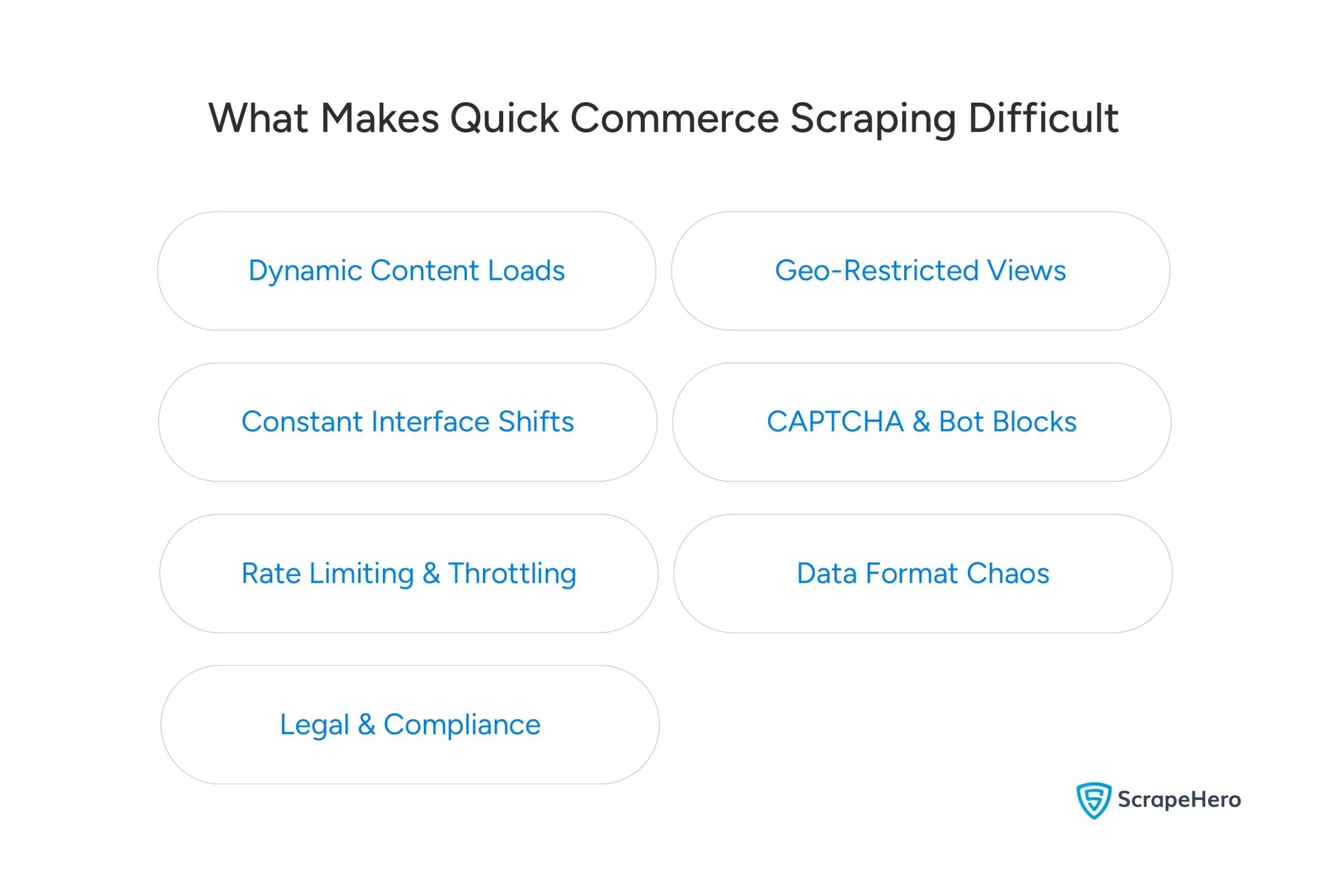

Hurdles to Scraping Quick Commerce Data

Quick commerce data scraping sounds simple, until the hurdles appear.

Instant-delivery platforms use advanced protections and ever-changing interfaces. Attempting a DIY scraper often leads to broken workflows and data gaps.

Below are the core difficulties that arise when web scraping for Q-commerce platforms without professional support.

- JavaScript-Rendered Content

- Geo-Restricted Data

- Frequent UI and API Changes

- CAPTCHAs and Bot Deterrants

- Rate Limits and Throttling

- Data Normalization and Inconsistency

- Scaling Across Regions and Categories

- Legal and Compliance Risks

JavaScript-Rendered Content

Many quick commerce platforms load product lists, prices, and stock levels via JavaScript. A basic HTTP request won’t see that data. Scrapers need a headless browser or API reverse-engineering to capture the full page state.

Geo-Restricted Data

Quick commerce platforms tailor pricing and availability to specific locations. Scraping from one IP returns only that area’s view. A broad scraper must rotate proxies across multiple cities or ZIP codes. Failing to simulate local requests yields incomplete insights.

Frequent UI and API Changes

Apps push updates several times a week. HTML structures shift, CSS classes rename, and API endpoints rearrange. Homegrown scripts break with each change. Continuous maintenance becomes a full-time job.

CAPTCHAs and Bot Deterrents

Platforms deploy CAPTCHAs, rate limits, and behavior-analysis tools to block automated access. Simple scrapers can be flagged and blocked. Overcoming these measures demands smart request pacing, CAPTCHA-solving services, and stealth techniques.

Here are six methods by which you can bypass CAPTCHA while web scraping.

Rate Limits and Throttling

Instant-delivery apps limit the number of requests an IP address can make per minute. Excess traffic leads to 429 errors or temporary bans.

Data Normalization and Inconsistency

Prices may appear as “$120,” “120 dollars,” or “120.00” depending on category or update. Stock statuses might read “In Stock,” “Only 2 left,” or “Out of Stock.” Normalizing and standardizing these variations is crucial for accurate analytics.

Scaling Across Regions and Categories

Covering dozens of cities and hundreds of SKUs multiplies complexity. Each combination requires its own crawl schedule and error handling. DIY setups struggle to maintain coverage and performance at scale.

Legal and Compliance Risks

Yes, there can be legal consequences if you don’t scrape ethically. Here’s a guide to understand the A to Z of the legal side of web scraping: Is Web Scraping Legal? A Guide to Ethical Web Scraping.

Terms of service often forbid automated scraping. Violating them can lead to IP bans or legal warnings. A compliant approach uses ethical scraping practices, respects robots.txt, and negotiates data-access agreements when possible.

Hop on a free call with our experts to gauge how web scraping can benefit your businessIs web scraping the right choice for you?

Tackling these challenges demands specialized tooling, skilled engineers, and dedicated monitoring. That’s why many brands turn to web scraping service experts like ScrapeHero, teams that handle the tough technical and legal work so data remains reliable and uninterrupted.

Scraping Strategies for Quick Commerce Web Scraping

To effectively scrape quick commerce data from platforms like Zepto, Blinkit, Swiggy Instamart, businesses need to use advanced scraping strategies that can handle the dynamic and sometimes challenging nature of these sites.

Quick commerce platforms often rely on JavaScript-heavy content, mobile-first interfaces, and anti-bot measures, which makes data extraction more complex.

Below are the key scraping strategies used for quick commerce web scraping.

1. Headless Browsers (e.g., Puppeteer/Playwright)

Headless browsers are a critical tool for scraping quick commerce platforms because they allow dynamic content rendering without requiring a visible user interface.

Tools like Puppeteer and Playwright are often used to simulate real user interactions within a headless browser, making it possible to scrape data from JavaScript-rendered pages that load content dynamically. These browsers can:

- Render JavaScript and capture data after it is processed by the platform.

- Click buttons, scroll through pages, and interact with elements as a human user would.

- Bypass static HTML structures and scrape data that appears only after user interaction, such as product listings, stock levels, and pricing updates.

Headless browsing is essential for extracting real-time pricing and stock information from mobile and web interfaces that use complex JavaScript frameworks like React or Vue.js.

2. IP Rotation & Residential Proxies

Quick commerce platforms often implement anti-bot measures such as rate limiting, CAPTCHAs, and IP blocking to prevent excessive scraping. IP rotation and residential proxies are essential for overcoming these obstacles while scraping efficiently.

- IP Rotation: By rotating between multiple IP addresses, businesses can avoid triggering platform defenses that block a single IP address after too many requests. This technique ensures that requests appear to come from different users, making it harder for the platform to detect scraping behavior.

- Residential Proxies: Unlike data center proxies, residential proxies use real user IP addresses provided by Internet Service Providers (ISPs). These proxies are much harder to detect and block because they appear as genuine residential users.

Scraping services often use residential proxies to ensure that requests are seen as legitimate user activity, thereby bypassing IP bans and throttling.

Want to take a look at the different types of proxy servers that you can select to avoid potential financial losses and achieve the desired outcome?

IP rotation and residential proxies enable businesses to scrape large volumes of data over long periods without running into technical roadblocks, ensuring consistent data collection from multiple locations and platforms.

3. Using Location Emulation or Spoofing for Hyperlocal Content

Quick commerce platforms often tailor their product offerings, prices, and delivery slots based on the customer’s geographic location.

To extract relevant hyperlocal data, businesses need to simulate various locations to get insights into how the platform’s data changes by area. This can be achieved through location emulation or spoofing.

- Location Emulation: Scraping services use tools like GPS spoofing or proxy rotation with geo-targeting to simulate requests from different locations. This allows businesses to scrape price variations or stock availability that are specific to certain cities, regions, or even neighborhoods.

- Spoofing Delivery Slots: Quick commerce platforms often offer different delivery timeframes based on location. By emulating a location within a specific region, businesses can gather hyperlocal insights into available delivery slots and time-sensitive offers.

This strategy is particularly useful for businesses that want to localize their pricing, optimize inventory, or customize marketing campaigns based on specific geographic regions.

How Will You Benefit from Quick Commerce Web Scraping?

Quick commerce web scraping provides significant benefits that directly impact business success.

These benefits stem from the real-time, actionable data gathered through scraping.

Hop on a free call with our experts to gauge how web scraping can benefit your businessIs web scraping the right choice for you?

By pulling price, stock, and trend data from instant-delivery apps every few minutes, you feed your team’s decisions with accurate, timely information.

You reorder best-sellers before they vanish. You tweak prices when rivals launch flash discounts. In a market where minutes can cost thousands, that real-time edge keeps your customers happy and your sales growing.

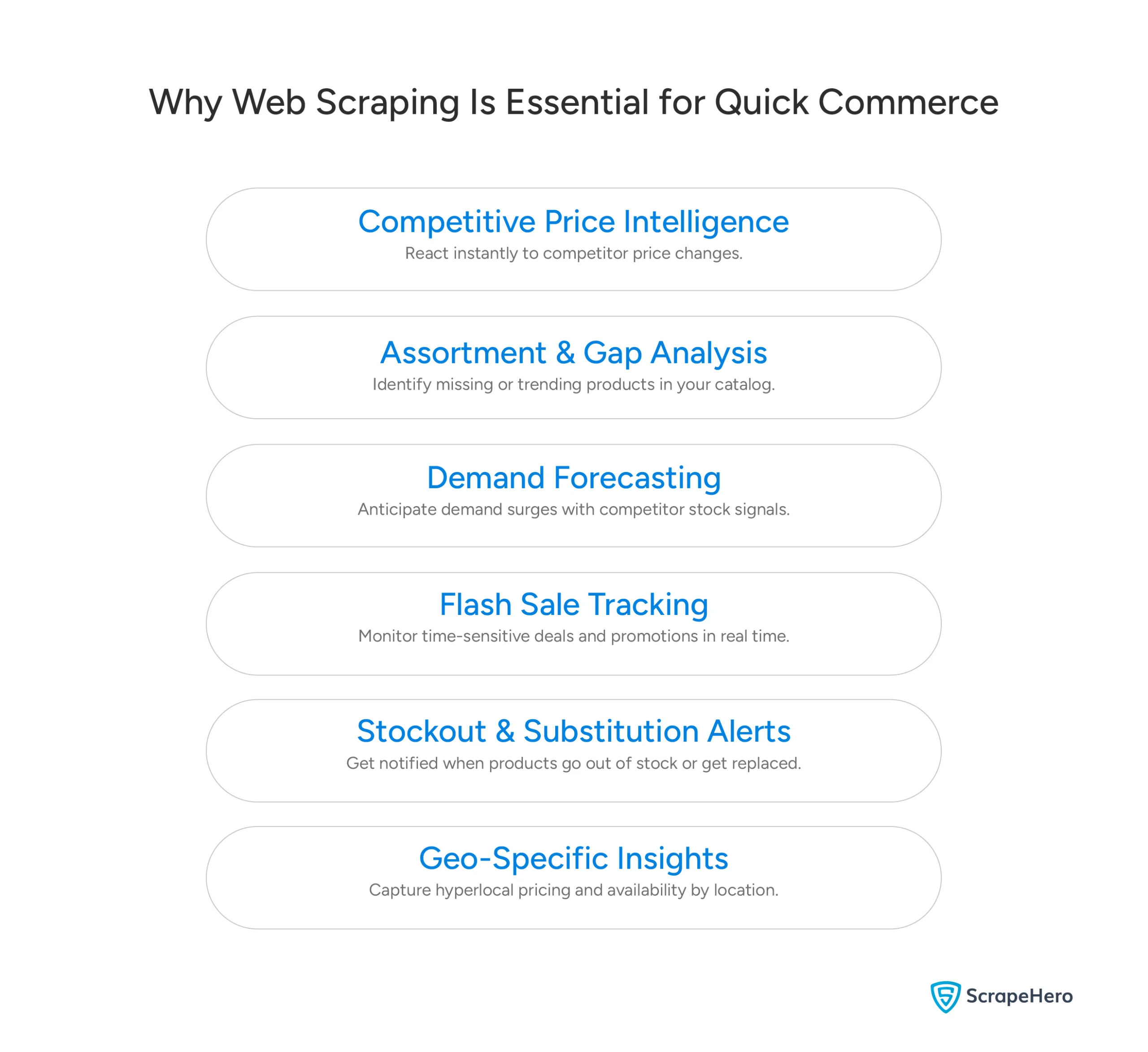

Here’s why it’s indispensable:

- Competitive Price Intelligence

- Assortment & Gap Analysis

- Demand Forecasting

- Flash Sale Tracking

- Stockout & Substitution Alerts

- Geo-Specific Insights

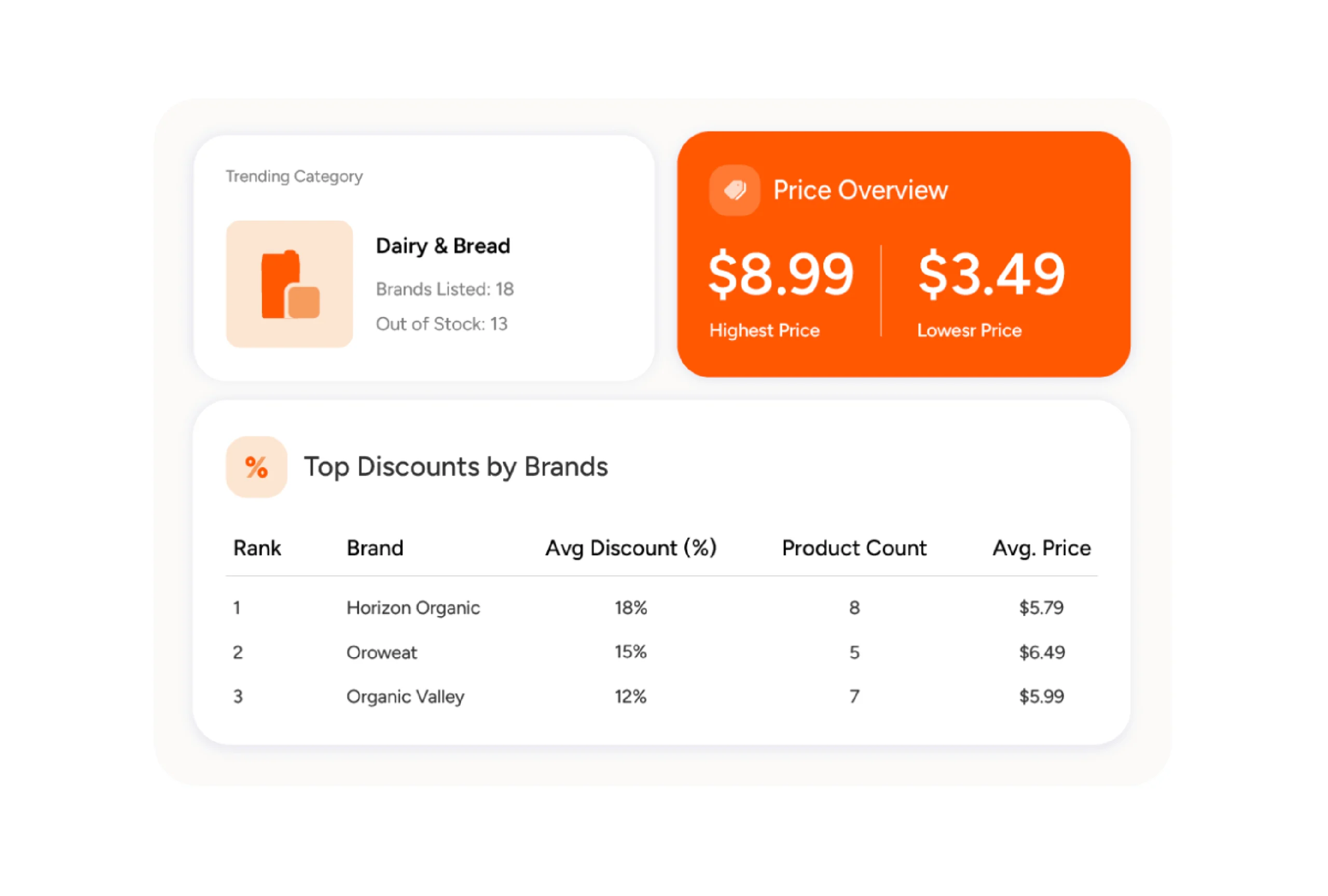

Competitive Price Intelligence through Quick Commerce Web Scraping

Quick commerce web scraping enables businesses to gather the data to track real-time pricing data across platforms like Blinkit, Zepto, and Instamart.

(Given above is a sample representation of how quick commerce data, such as price ranges, stockouts, and brand discounts, can be visualized for actionable insights.)

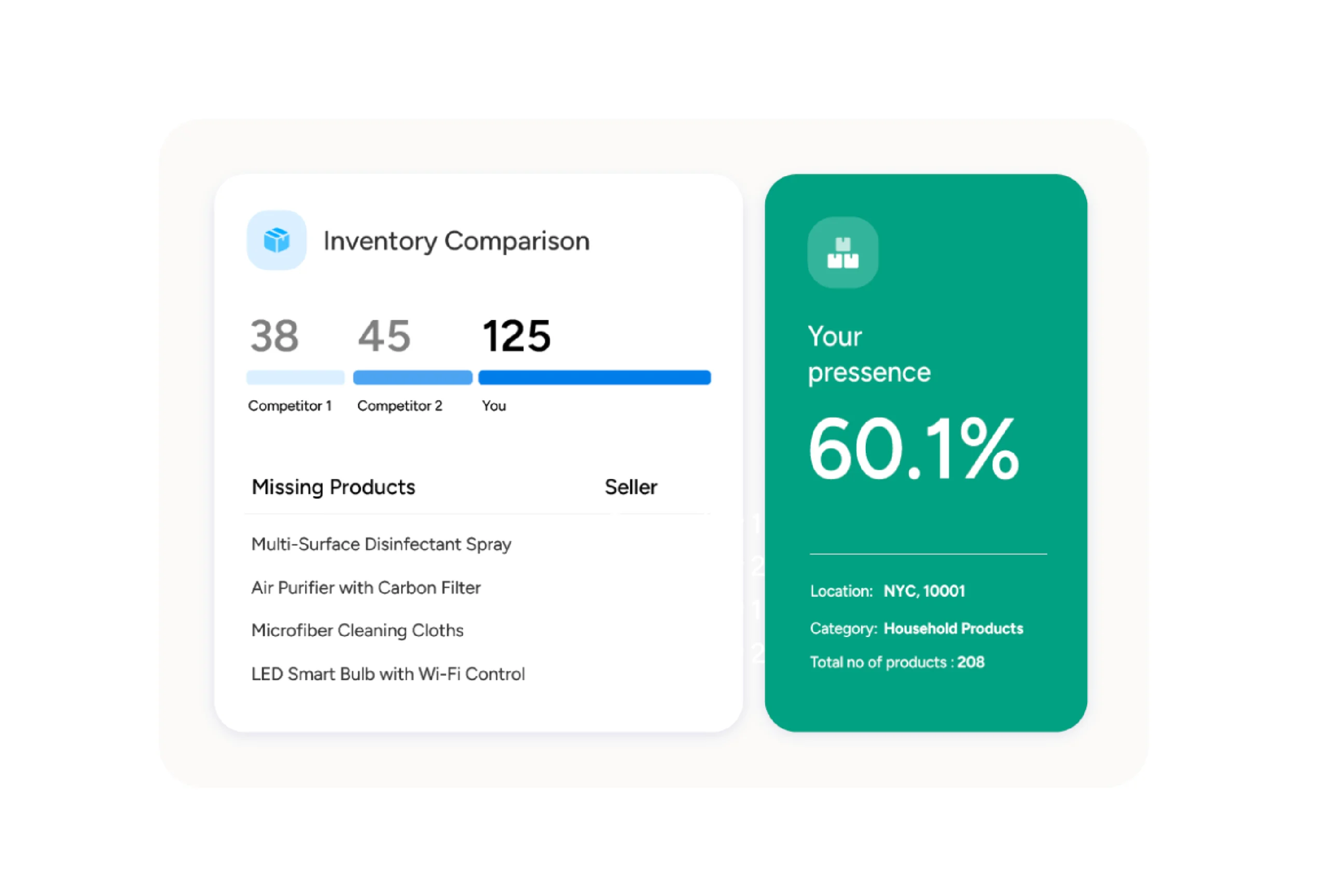

Assortment & Gap Analysis

Using quick commerce web scraping, businesses can gather the data to analyze competitors’ product assortments and identify gaps in their own offerings.

For instance, in our analysis of the Personal Care category on Blinkit, we found that over 10,000 products, more than 1 in 5 listings, were out of stock. That’s a significant chunk of unavailable inventory, and without scraping this data, it’s easy to miss assortment gaps or overestimate availability.

That’s critical for planning new listings and avoiding lost sales.

Forecast Demand, Optimize Inventory

Quick commerce web scraping makes demand forecasting more accurate with data to look into real-time customer preferences.

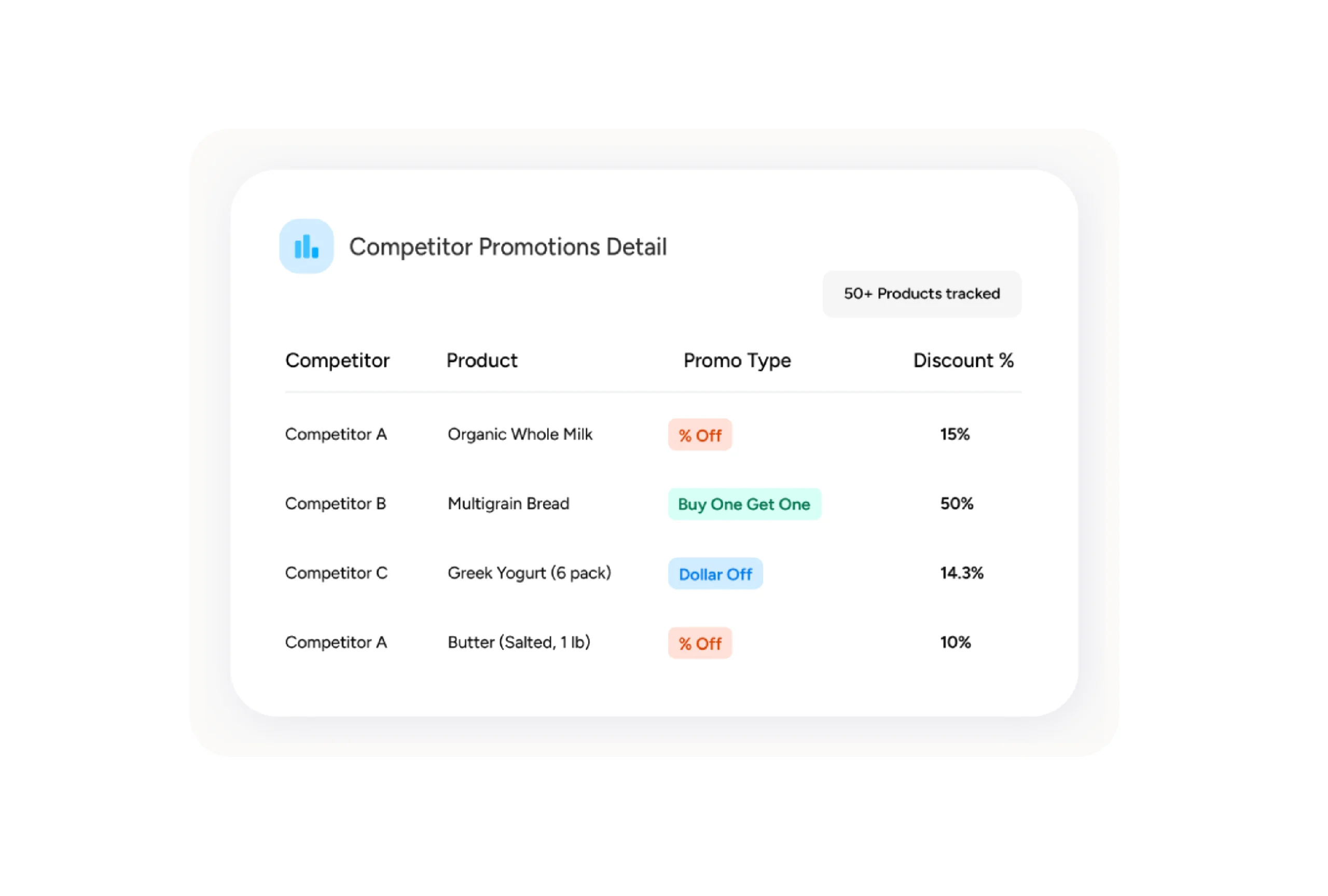

Flash Sales Tracking

Q-commerce apps thrive on urgency: limited-time offers, BOGO deals, and app-only drops. Quick commerce data scraping lets you catch limited‑time discounts the moment they go live.

Stockouts and Substitution Alerts

Quick commerce web scraping is essential for monitoring stock levels and receiving alerts on stockouts or substitutions.

Geo-Specific Pricing and Availability

Quick commerce web scraping allows businesses to gather geo-specific data from various platforms, which is essential for tailored marketing and pricing strategies.

Who Puts This Data to Work? Real-World Use Cases

The data extracted through quick commerce web scraping offers businesses the ability to gain real-time, actionable insights that can be applied across various industries.

Here are some key use cases where this data can make a significant impact:

1. Market Intelligence Firms

Market intelligence firms rely heavily on real-time data to provide competitive insights and industry forecasts. Quick commerce web scraping allows these firms to:

- Track competitor activities: Scraping real-time product data, pricing, stock levels, and promotions from platforms like Zepto, Blinkit, and Instamart helps market intelligence firms monitor competitor strategies and pricing models.

- Analyze market trends: By gathering product information from multiple quick commerce platforms, these firms can identify emerging trends, popular products, and shifting consumer preferences.

- Conduct market analysis: Firms can aggregate and analyze scraped data to deliver detailed reports and recommendations to businesses on market entry, product assortment, and regional performance.

2. Pricing Engines for Competing Platforms

Quick commerce web scraping plays a critical role in pricing optimization. Businesses with pricing engines use the data scraped from quick commerce platforms to:

- Monitor competitor prices: Real-time pricing data from multiple platforms helps businesses adjust their own prices dynamically, ensuring they remain competitive.

- Adjust pricing in real-time: As promotions or price changes happen on competitor platforms, scraping data allows businesses to immediately react with dynamic pricing strategies.

- Manage pricing strategy for multiple platforms: With the ability to track prices across various quick commerce platforms, businesses can ensure consistent pricing across channels, increasing transparency and customer trust.

3. FMCG Brands Tracking Placement

Fast-moving consumer goods (FMCG) brands benefit significantly from quick commerce web scraping as they strive to maintain product visibility and manage inventory on multiple platforms. Scraping helps FMCG brands:

- Track product placement and visibility: Scraping data on where their products appear on various platforms allows FMCG brands to identify if their products are being pushed down the search results or buried under competing brands.

- Monitor stock levels and product availability: Real-time data scraping helps FMCG brands track whether their products are in stock across different locations and if they are featured in key promotions or flash sales.

- Analyze competitor placement strategies: By scraping competitor products, FMCG brands can gain insights into their placement and ensure their own products are displayed prominently.

4. Retail Analytics Firms

Retail analytics firms gather and analyze large volumes of data to help businesses optimize their retail operations, from inventory management to customer engagement. By using quick commerce web scraping, retail analytics firms can:

- Track product availability and stockouts: Scraping real-time data enables retail analysts to track when a product is out of stock and identify inventory gaps across various platforms, helping businesses improve their supply chain management.

- Analyze consumer behavior and trends: Data such as customer reviews, ratings, and product popularity helps analysts forecast demand and make better decisions about product assortments.

- Provide pricing and promotional insights: By scraping competitor pricing and promotional activity, retail analysts help businesses adapt their strategies to maintain market leadership.

5. Hyperlocal Delivery Aggregators

Quick commerce web scraping is particularly useful for hyperlocal delivery aggregators who need to track real-time product data across various platforms in specific regions. These aggregators rely on scraped data to:

- Monitor local pricing and availability: By scraping product data based on geographical location, aggregators can determine the best prices and product availability in real-time, helping customers find what they need faster.

- Optimize delivery routes: By scraping data on product availability and delivery times, aggregators can optimize delivery routes based on inventory levels and customer demand in specific neighborhoods or areas.

- Track local demand patterns: Aggregators can gain insights into which products are in high demand in specific regions, allowing them to tailor their service offerings and provide customers with real-time recommendations.

What are the Advantages of Letting an Expert Scrape Quick Commerce Data for You?

While DIY web scraping for quick commerce is possible, it comes with challenges that can be overwhelming. Letting an expert handle the scraping process has several key advantages, ensuring that data is accurate, timely, and actionable.

- Expert Knowledge and Experience

- Real-Time, Accurate Data

- Efficient Scaling Across Regions

- Ongoing Maintenance & Monitoring

- Handling Legal and Compliance Issues

- Time and Resource Efficiency

- Customization and Flexibility

Expert Knowledge and Experience

Scraping quick commerce platforms requires a deep understanding of how these platforms work, including how they load data, manage locations, and handle user interactions.

Experts bring this experience, ensuring that scrapers run smoothly across web and app platforms, and that complex barriers like JavaScript rendering, CAPTCHAs, and geo-restrictions are handled effectively.

Why worry about expensive infrastructure, resource allocation and complex websites when ScrapeHero can scrape for you at a fraction of the cost?Go the hassle-free route with ScrapeHero

Real-Time, Accurate Data

Web scraping experts set up systems to pull data in real time, ensuring up-to-the-minute insights on pricing, stock levels, and promotions. This allows you to make fast, data-driven decisions and avoid the problems caused by outdated or inaccurate data.

Efficient Scaling Across Regions

When managing multiple regions, categories, and thousands of SKUs, scalability is crucial. Experts know how to scale scraping efforts across multiple cities and categories, ensuring you get comprehensive data without the risk of overload or inefficiency.

Ongoing Maintenance & Monitoring

Scraping quick commerce platforms requires constant monitoring. Experts handle routine updates to scrapers, ensuring they continue to work as app and site layouts change. With ongoing maintenance, you avoid the headaches of broken scripts or missing data.

Handling Legal and Compliance Issues

Experienced professionals understand the legal complexities of scraping. They respect robots.txt, stay within the boundaries of terms of service, and use ethical scraping methods. This minimizes the risk of IP bans or legal complications.

Time and Resource Efficiency

Building and maintaining a DIY scraper takes time and technical expertise. By outsourcing to an expert, you free up internal resources to focus on higher-priority tasks, like analyzing the data and making strategic decisions.

Customization & Flexibility

Experts can tailor scraping solutions to meet specific business needs. Whether it’s scraping a specific set of products or tracking competitor promotions across different platforms, experts can create a solution that’s customized for your goals.

When your business depends on real-time, hyperlocal data to fulfill orders in minutes, you need more than generic scraping tools. Here’s why choosing an experienced web scraping service like ScrapeHero gives you an edge.

Advantages of Partnering With ScrapeHero for Web Scraping in Quick Commerce

ScrapeHero is a full-service data provider with decades of experience. By choosing us, you’re not just getting data, you’re getting highly specialized services designed to address the specific challenges of quick commerce.

- End-to-End Managed Service

We handle everything, from data extraction and cleansing to delivery, so your team can focus on strategy rather than maintenance. - Enterprise-Scale Infrastructure

Our distributed crawling network processes millions of pages per day at thousands of pages per second, handling complex JavaScript/AJAX sites and CAPTCHAs, and self-heals when sites undergo changes. - Unmatched Data Quality

AI-powered validation plus manual QA ensures 99% accuracy. Automated alerts notify you the moment a site’s structure shifts, so you never miss critical updates. - Rapid, Responsive Support

With a 98% customer retention rate and average response times under one hour during business hours, our experts are ready to troubleshoot or optimize your project at a moment’s notice. - Tailored Q-Commerce Solutions

We’ve built specialized workflows for platforms like Blinkit, Zepto, and Swiggy Instamart, capturing geo-targeted pricing, stock levels, and flash-sale data. - Flexible Delivery & Integration

Receive data in your choice of format- JSON, CSV, database dumps, or via real-time APIs, and integrate seamlessly with S3, Azure, Snowflake, or your in-house systems. - Advanced Anti-Blocking Techniques

Our team stays ahead of IP blacklisting, rate limits, and bot defenses using rotating proxies, headless browsers, and intelligent request patterns, so your data flow remains uninterrupted. - Custom AI & Analytics Add-Ons

Beyond scraping, we can build bespoke AI models using your data, perform sentiment analysis on reviews, develop demand-forecasting algorithms, or create competitor-price-prediction engines for deeper insights. - Compliance & Ethical Standards

We respect robots.txt, site terms, and data privacy regulations. As a conduit for publicly available data, we don’t store or resell sensitive information, so you can trust both the legality and integrity of your data pipeline.

Get the quick commerce data you need to turn into actionable strategies with the ScrapeHero web scraping service. Get in touch with us today.

FAQ

Publicly visible data such as product names, prices, discounts, stock status, delivery slot availability, and category listings.

Yes, with techniques like ZIP code simulation and rotating proxies, you can capture geo-specific pricing, delivery options, and stock levels.

Scraping publicly available data is generally legal, but it must be done ethically and in compliance with site terms and local data laws. Learn more in our web scraping legal guide.

It depends on your goals, but for real-time pricing, stock, and promotion tracking, scraping intervals often range from every 5 minutes to hourly.

Data can be delivered via APIs, S3, CSVs, or databases, depending on your setup, and plugged into pricing engines, BI dashboards, or inventory management systems.

Yes. Scrapers can monitor and flag time-sensitive offers, BOGO deals, and discounts the moment they go live.

Using distributed IPs, headless browsers, smart request pacing, and anti-CAPTCHA tools helps prevent detection and ensure uninterrupted scraping.

In-app data only tells you about your own performance. Web scraping gives you competitive intelligence, pricing, assortment, and stock visibility across rivals, which in-app tools can’t provide.

DIY scrapers require constant updates, infrastructure, and legal awareness. A provider like ScrapeHero offers fully managed, scalable, and compliant solutions that save time and reduce risk.