Do you know that most of the data collected from the web is unstructured, making it difficult to analyze directly?

This situation calls for a critical need for efficient normalization and standardization techniques for maximizing data usability.

In this article, we discuss normalization and standardization in scraped data to ensure consistency and quality for deeper insights.

Before that, let’s have a quick look at the concept of data normalization and data standardization.

What is Data Normalization?

Data normalization is a technique that adjusts and scales data values to fit within a specific range, commonly between 0 and 1.

The primary goal of normalization is to ensure that different datasets are compared on a uniform scale, especially when dealing with features that have varying units or magnitudes.

For instance, you can use normalization to convert all prices scraped from various e-commerce websites into a consistent range for straightforward comparisons.

What is Data Standardization?

Data standardization is the process of transforming the scraped data so that it has a mean of zero and a standard deviation of one.

This standardization of data retains the original relationships within the dataset and ensures that no single variable dominates due to differing scales.

For example, you can standardize data from web scraping by converting product reviews from various e-commerce websites into a standard scale, simplifying analysis.

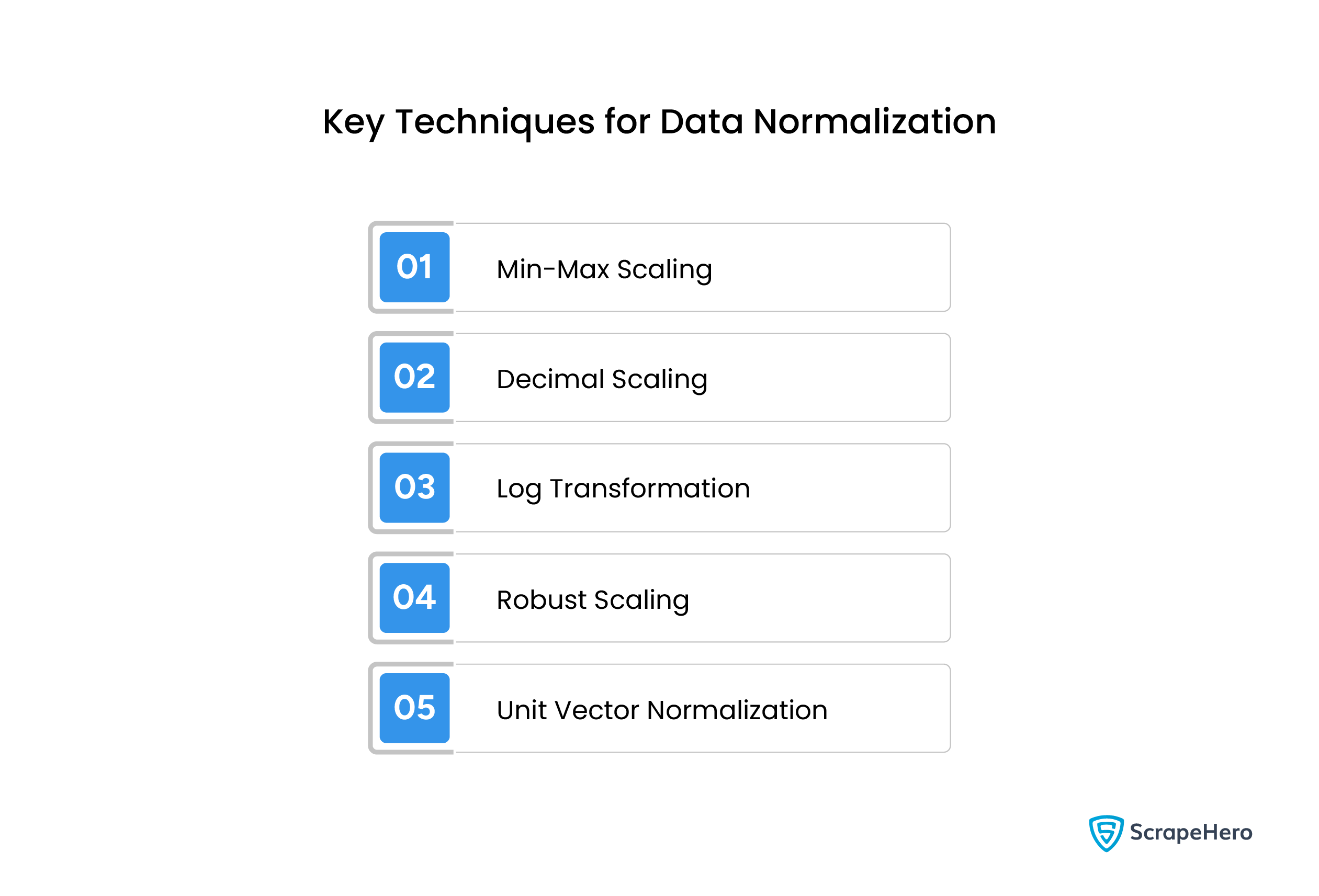

Data Normalization Techniques

Here are some popular data normalization techniques to scale all values in a dataset to a uniform range.

1. Min-Max Scaling

Min-max scaling is a normalization technique that rescales data within a defined range, usually between 0 and 1, preserving the relationships among values without distorting the dataset’s distribution.

That is, Min-max scaling would transform a dataset containing ages ranging from 18 to 65 into a proportional range of 0 to 1, maintaining their relative differences.’

2. Decimal Scaling

Decimal scaling moves the decimal point based on the most considerable absolute value in the dataset, such as geographic coordinates or monetary figures.

Values like 123, 4567, and 89012 would be transformed into 0.00123, 0.04567, and 0.89012, respectively, using decimal scaling, making them more manageable for analysis.

3. Log Transformation

Log transformation is a technique that applies a logarithmic function to data compressing significant variations.

It is especially effective for datasets with exponential growth patterns transforming into their logarithmic equivalents, making them easier to analyze.

4. Robust Scaling

Robust scaling is a method that normalizes data using the median and the interquartile range (IQR).

It is ideal for datasets with a few very high or low values to ensure outliers do not dominate the overall data distribution.

5. Unit Vector Normalization

Unit vector normalization is a technique that transforms data by dividing each value by the dataset’s magnitude.

It is commonly used where vectorized data like [3, 4] needs normalization to [0.6, 0.8] to prevent large values from dominating the analysis.

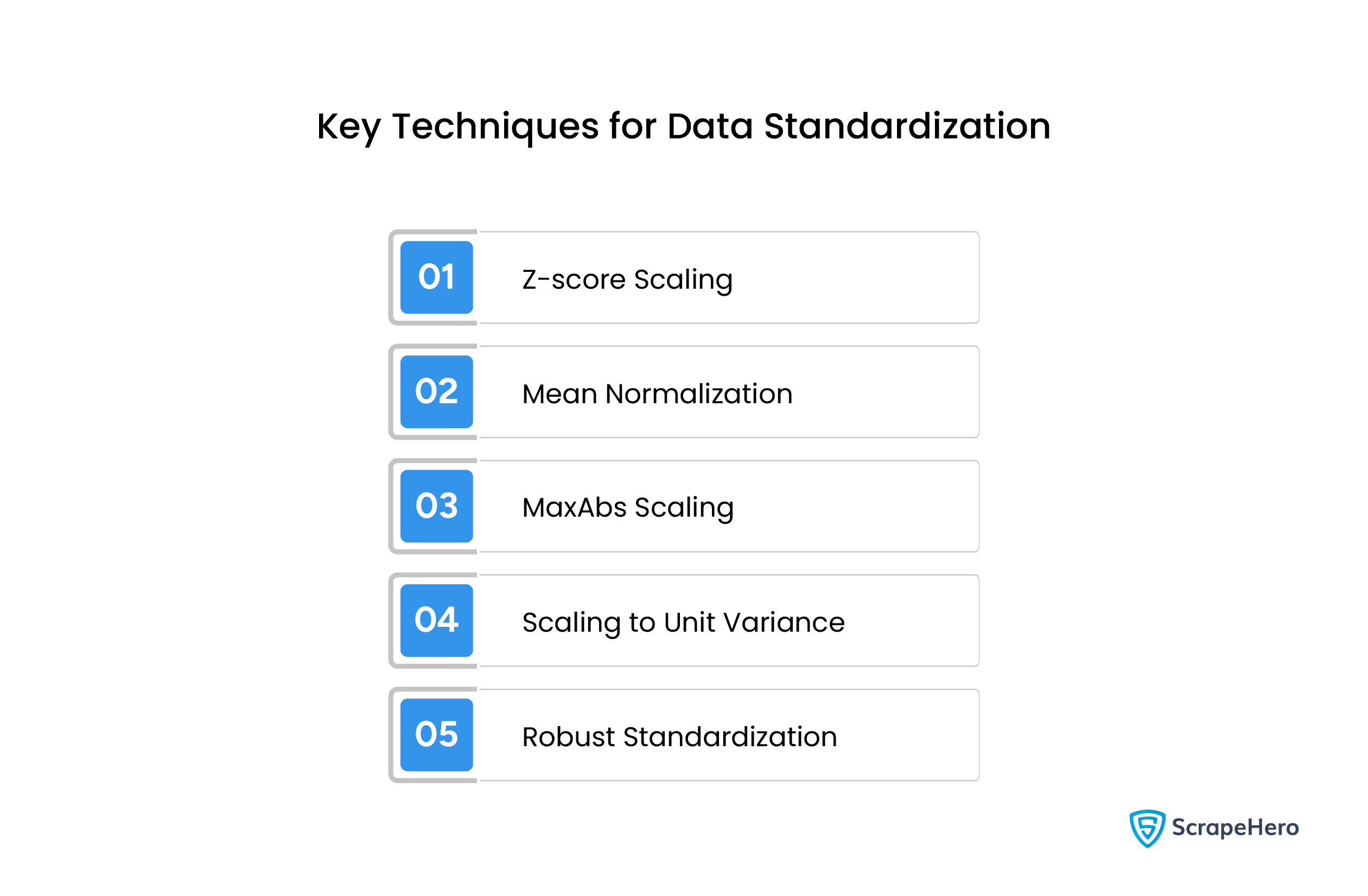

Data Standardization Techniques

Here are key methods to standardize data from web scraping to ensure datasets are centered and scaled for algorithms sensitive to varying feature magnitudes.

1. Z-score Scaling

Z-score scaling is a technique that standardizes data by using the mean and standard deviation to have a mean of zero and a standard deviation of one.

It is helpful for datasets with features on varying scales to ensure all features are centered and scaled, making them comparable.

2. Mean Normalization

Mean normalization is a method that transforms data by subtracting the mean of the dataset from each data point and dividing by the range of the dataset.

It is ideal for datasets without significant outliers to provide a straightforward adjustment that centers the data around zero.

3. MaxAbs Scaling

MaxAbs scaling is a technique that normalizes data by dividing each value by the maximum absolute value in the dataset.

It is effective for sparse datasets to preserve the sparsity of the data and is often used for datasets with varying magnitudes but similar distributions.

4. Scaling to Unit Variance

Scaling to unit variance is a method that ensures that each data value is divided by the dataset’s standard deviation.

It creates a dataset where all features have unit variance and is helpful in scenarios where the variance of features needs to be equal.

5. Robust Standardization

Robust standardization is an effective technique that adjusts data using the median and interquartile range (IQR) instead of the mean and standard deviation.

It is particularly effective for datasets where extreme values like [1, 2, 3, 100] might skew the mean or standard deviation and ensure a more balanced dataset.

Do you know that ScrapeHero monitors thousands of brands and collects retail store location data for business needs?

Yes. If you need accurate, updated, affordable, and ready-to-use POI location data instantly, then you can download them from our data store.

Steps to Normalize and Standardize Data in Python

Data normalization and data standardization are essential for analyzing and modeling data.

Using the Python libraries such as pandas and scikit-learn, you can do these tasks efficiently. Below is a step-by-step guide:

1. Setting Up Python Environment

Ensure that the libraries pandas and scikit-learn are imported. Pandas is used for handling dataframes, while MinMaxScaler and StandardScaler from scikit-learn provide easy-to-use tools for normalization and standardization.

import pandas as pd

from sklearn.preprocessing import MinMaxScaler, StandardScaler2. Data Normalization (Min-Max Scaling)

Normalization is beneficial when data values have varying scales but need to be compared on an equal footing.

# Example dataset

data = {'Price': [200, 300, 150, 400]}

df = pd.DataFrame(data)

# Applying Min-Max Scaling

scaler = MinMaxScaler()

df['Normalized_Price'] = scaler.fit_transform(df[['Price']])

print(df)Here, the prices (200, 300, 150, 400) are scaled to a range of 0 to 1. The minimum value in the dataset (150) becomes 0, and the maximum (400) becomes 1.

3. Data Standardization (Z-score Scaling)

Standardization is beneficial for machine learning algorithms sensitive to feature scales.

# Applying Z-score Scaling

std_scaler = StandardScaler()

df['Standardized_Price'] = std_scaler.fit_transform(df[['Price']])

print(df)As you can see, the prices originally on different scales are adjusted relative to their average and spread, making them comparable.

Why Should You Consider ScrapeHero Web Scraping Service?

Data normalization and data standardization are essential techniques that ensure that datasets are prepared for practical analysis.

If the underlying data is inaccurate, it can propagate errors, leading to wrong analyses and decisions.

A web scraping service like ScrapeHero can help you gather accurate, well-structured data, which is crucial for effective data normalization and standardization.

We can offer you scalable, custom scraping solutions that are entirely tailored to your unique business needs, saving you time and reducing your effort.

Frequently Asked Questions

Yes, you can. While normalization adjusts range, standardization adjusts for mean and variance.

Before normalization or standardization, you need to make seasonal and trend adjustments to time series data.

Tools like scikit-learn or statsmodels in Python are helpful for this.

Using the StandardScaler in Python you can normalize scraped data by adjusting to a mean of zero and a standard deviation of one.

Data cleaning includes handling missing values, removing duplicates, and detecting outliers.

After cleaning the data, you can apply normalization or standardization based on the analysis needs.

Data normalization and standardization ensure data consistency, improve analysis accuracy, and make the data compatible with various analytical tools.