CAPTCHAs are a popular method of identifying and blocking bots, so they are a major threat to web scraping. Is there a way to skip the CAPTCHA? Yes.

This article discusses some efficient ways to bypass captchas while web scraping.

What are CAPTCHAs?

CAPTCHAs (Completely Automated Public Turing tests to Tell Computers and Humans Apart) are security checks that pop up to block various activities, including web scraping.

They prevent bots and other automated programs from challenging the user with a problem that only humans can solve.

Common types of CAPTCHAs include Text-based CAPTCHA, Image-based CAPTCHA, and Audio-based CAPTCHA.

Some anti-bot protection services like Cloudflare also use CAPTCHAs to prevent bots from entering the sites.

Interested in learning the technologies used for bot detection when web scraping? Then, you can read the article on how websites detect bots.

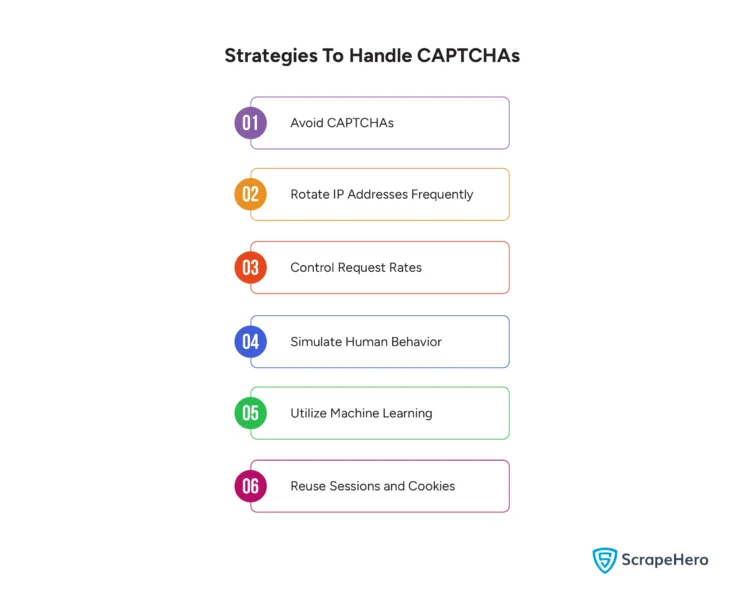

Strategies To Bypass CAPTCHAs While Web Scraping

To bypass CAPTCHAs while web scraping is challenging as they prevent automated access.

Still there are some efficient ways to deal with CAPTCHAs:

- Avoid CAPTCHAs

- Rotate IP Addresses Frequently

- Control Request Rates

- Simulate Human Behavior

- Utilize Machine Learning

- Reuse Sessions and Cookies

1. Avoid CAPTCHAs

At times, rather than bypassing CAPTCHAs, it is wise to avoid them.

-

Identify Non-CAPTCHA Pages

Not all pages on a website have CAPTCHAs. You can scrape relevant information from such pages.

-

Use APIs

If the website offers APIs, it is advisable to use them. This will ensure that you get the necessary data and do not have to deal with CAPTCHAs.

-

Scrape During Off-Peak Hours

In most cases, when traffic is lower, the websites might be less likely to serve CAPTCHAs.

2. Rotate IP Addresses Frequently

With IP rotation, you can overcome the challenge of anti-scraping methods employed by websites to prevent bots.

-

Use Proxies

It is best to rotate proxies and distribute your requests across multiple IP addresses to reduce the chances of triggering CAPTCHAs.

-

Residential Proxies

Use one of the best types of proxies, Residential Proxies, as they are less likely to be flagged than data center proxies.

Here’s an interesting article on how to scrape websites without getting blocked.

3. Control Request Rates

You can manage the request rates to avoid being identified and blocked as a bot.

-

Throttle Requests

To avoid triggering rate-limiting mechanisms, CAPTCHAs make requests at a slower, human-like pace.

-

Randomized Delays

You can also introduce random delays between requests, mimicking human browsing behavior.

4. Simulate Human Behavior

Mimicking human browsing behavior is an efficient way to avoid CAPTCHAs during web scraping.

-

Browser Automation Tools

To simulate human interactions, you can use tools such as Selenium or Puppeteer. These tools can handle JavaScript rendering and CAPTCHAs.

-

Headless Browsers

You can also run browsers in headless mode without a GUI to interact with the webpage like a regular human user.

5. Utilize Machine Learning

You can also use machine learning models to auto-detect and bypass CAPTCHAs.

-

OCR Tools

Using Optical Character Recognition (OCR) tools like Tesseract, you can solve simpler CAPTCHAs like a text CAPTCHA.

-

Custom Models

This method is quite complex and resource-intensive. You can develop a custom machine learning model for recognizing and solving CAPTCHAs.

6. Reuse Sessions and Cookies

You can effectively and efficiently bypass CAPTCHAs while web scraping if you consider the following.

-

Session Management

You should maintain and reuse sessions to avoid frequently hitting CAPTCHA pages.

-

Cookies and Tokens

You can also use cookies and tokens from a logged-in session if the website offers access to authenticated users.

How Do You Bypass CAPTCHAs Using Puppeteer?

To bypass CAPTCHAs using Puppeteer in Python, you can integrate an external CAPTCHA-solving service API with Puppeteer.

pip install pyppeteer requests

import asyncio

import requests

from pyppeteer import launch

async def bypass_captcha():

browser = await launch(headless=False) # Launch Puppeteer browser

page = await browser.newPage()

# Navigate to the page with CAPTCHA

await page.goto('URL_OF_THE_PAGE_WITH_CAPTCHA')

# Example: Assuming there's a CAPTCHA solving service API

captcha_response = await solve_captcha_with_service('CAPTCHA_IMAGE_URL')

# Fill the CAPTCHA response in the form field

await page.type('#captchaInput', captcha_response)

# Submit the form or continue with your task

await page.click('#submitButton')

# Continue with your automation tasks after bypassing CAPTCHA

await browser.close()

async def solve_captcha_with_service(captcha_image_url):

api_key = 'YOUR_CAPTCHA_SERVICE_API_KEY'

api_url = 'https://api.captchaservice.com/solve' # Example API endpoint

try:

response = requests.post(api_url, json={

'apiKey': api_key,

'imageUrl': captcha_image_url

})

response_data = response.json()

return response_data['solution'] # Assuming the API returns the CAPTCHA solution

except Exception as e:

print(f'Error solving CAPTCHA: {e}')

return '' # Handle error or return appropriate response

asyncio.get_event_loop().run_until_complete(bypass_captcha())

To Integrate with the CAPTCHA Solving Service, you should replace URL_OF_THE_PAGE_WITH_CAPTCHA with the actual URL of the page containing the CAPTCHA.

Also, implement the solve_captcha_with_service function to send a request to your CAPTCHA-solving service’s API endpoint (api_url).

How Do You Bypass CAPTCHAs Using Selenium?

Here’s a basic example of bypassing CAPTCHAs using Selenium with a CAPTCHA-solving service like 2Captcha:

from selenium import webdriver

from selenium.webdriver.common.by import By

import requests

import time

# Initialize Selenium WebDriver

driver = webdriver.Chrome()

# Navigate to the website

driver.get("https://example.com")

# Locate CAPTCHA element (this will vary based on the website)

captcha_element = driver.find_element(By.ID, "captcha_image_id")

# Save CAPTCHA image

captcha_image_url = captcha_element.get_attribute("src")

response = requests.get(captcha_image_url)

with open("captcha_image.png", "wb") as f:

f.write(response.content)

# Use 2Captcha to solve the CAPTCHA

api_key = "YOUR_2CAPTCHA_API_KEY"

captcha_file = {"file": open("captcha_image.png", "rb")}

response = requests.post(f"https://2captcha.com/in.php?key={api_key}&method=post", files=captcha_file)

captcha_id = response.text.split("|")[1]

# Wait for CAPTCHA to be solved

time.sleep(15)

response = requests.get(f"https://2captcha.com/res.php?key={api_key}&action=get&id={captcha_id}")

captcha_solution = response.text.split("|")[1]

# Enter CAPTCHA solution into the form

captcha_input = driver.find_element(By.ID, "captcha_input_id")

captcha_input.send_keys(captcha_solution)

# Submit the form

submit_button = driver.find_element(By.ID, "submit_button_id")

submit_button.click()

Wrapping Up

Solving CAPTCHAs can be challenging as they have developed to resist modern tools over the years.

To overcome these challenges, you may require advanced technology and tailored services, such as proxy services and CAPTCHA-solving services , which can add up in cost.

Choosing ScrapeHero, a fully managed enterprise-grade web scraping service provider, can significantly reduce your time and cost.

Our robust solutions for web scraping are designed to navigate these challenges, providing you with a cost-effective and time-saving solution.

ScrapeHero web scraping services can ensure seamless data extraction that meets your specific requirements.

Our experts and advanced technology can efficiently handle CAPTCHAs and ensure smooth web scraping operations, maintaining compliance with legal and ethical standards.

Frequently Asked Questions

Yes. To skip the CAPTCHA, use headless browsers like Puppeteer combined with some CAPTCHA-solving services.

The legality of bypassing CAPTCHAs depends on the intended use and the website’s terms of service regarding restricted content access.

To bypass reCAPTCHA in Python requests, you have to use CAPTCHA-solving, which is quite challenging. So, it is always advisable to consult a data service provider like ScrapeHero to address all web scraping issues.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data