Tripadvisor is a popular site for comparing prices and other details of hotels; it hosts details of a huge number of hotels. Therefore, Tripadvisor data scraping can give you insights into your competitors. You can use the information to improve the amenities and features of your hotels or set competitive prices.

It is a dynamic website, so the requests library is not ideal for scraping data from Tripadvisor using Python. However, you can use Python to scrape a dynamic website using automated browsers like Selenium.

In this tutorial, you will learn how to scrape Tripadvisor data using Selenium and lxml. Both are external Python libraries, and you must install them separately.

Set Up the Environment for Tripadvisor Data Scraping

You must set the environment to scrape data from Tripadvisor. This tutorial uses Python, and you must install Python and the necessary packages on your system to use the code.

Here, you will read about web scraping Tripadvisor using Python Selenium to get the source code from the hotel page. You can then use lxml to parse and extract the necessary data.

The Python package manager, pip, can install Selenium and lxml.

pip install selenium lxmlData Extracted from Tripadvisor

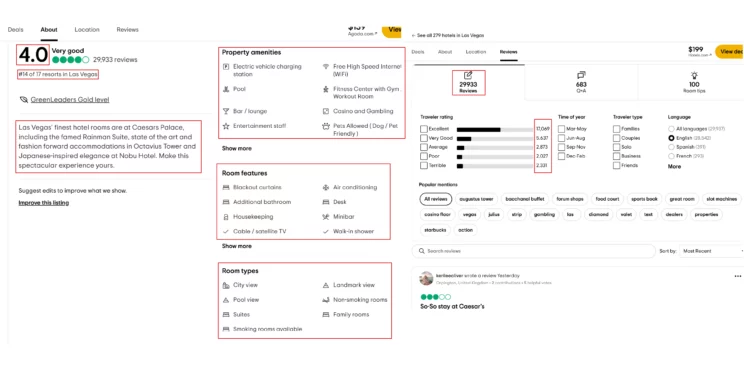

This tutorial will teach you how to scrape Tripadvisor and get details from a hotel page:

- Name

- Address

- Ratings

- Review count

- Overall Rating

- Amenities

- Room features

- Room Types

- Rank

- Hotel URL

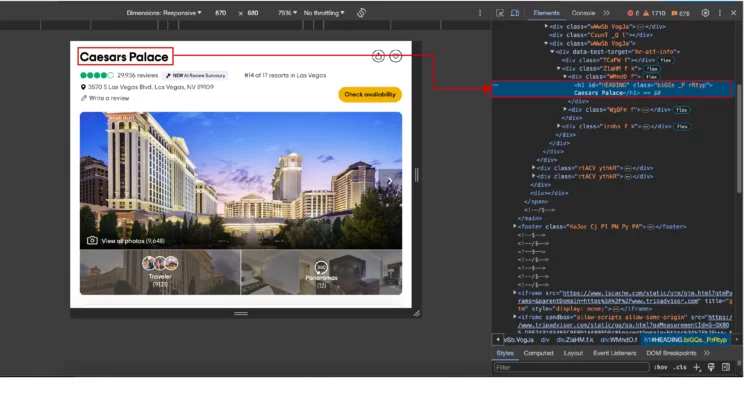

You must analyze the Tripadvisor hotel page to understand the XPaths.

Tripadvisor Data Scraping: The code

You can now start writing the code for Tripadvisor web scraping with Python lxml. The first step is to write import statements. These will allow you to use methods from various libraries, including lxml and Selenium.

Besides selenium and lxml, this tutorial uses three modules: json, argparse, and sleep.

Here are all the libraries and modules imported into this code:

- lxml for parsing the source code of a website

- json for handling JSON files

- argparse to push arguments from the command line

- sleep to instruct the program to wait a certain amount of time before moving on to the next step

- By, webdriver, and Keys from Selenium to interact with the page

from lxml import html

import json

import argparse

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.common.by import By

from time import sleepThis code has three defined functions:

- clean() removes unnecessary spaces.

- process_request() takes the URL as the argument, visits the website, and returns the page source.

- process_page() parses the source code, extracts the details, and returns the data.

Finally, the code gets the data from the process_request method.

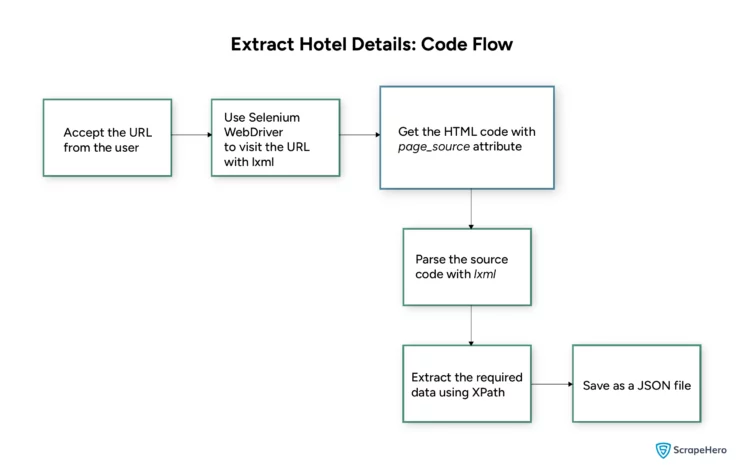

Here is the flowchart showing the code logic:

process_requests() takes the URL and visits a webpage using Selenium WebDriver.

def process_request(url):

driver = webdriver.Chrome()

driver.get(url)

You can then use Selenium’s page_source attribute to get the source code. The process_request() also parses the code using lxml and returns the function process_pages().

sleep(4)

response = driver.page_source

htmls = driver.find_element(By.TAG_NAME,"html")

sleep(3)

htmls.send_keys(Keys.END)

sleep(10)

parser = html.fromstring(response, url)

return process_page(parser, url)

process_page() takes the url and the parsed data as arguments and uses XPaths for data extraction.

Finding the XPaths can be challenging; you must analyze the webpage’s HTML code and figure it out based on the location and attributes of your element.

Ensure that when you build an XPath, you use a static class. Tripadvisor uses JavaScript to display certain elements, and these have dynamic classes.

These classes differ for each hotel. Therefore, building the XPath from the element’s position is better.

For example, say your required data is a text inside a span, and the span is inside a div that is inside another. Then, you can use “//div/div/span/text()” to extract the data.

Moreover, this code stores XPaths in a variable, making it easy to update whenever the XPaths change.

def process_page(parser, url):

XPATH_NAME = '//h1[@id="HEADING"]//text()'

XPATH_RANK = "//div[@id='ABOUT_TAB']/div/div/span/text()"

XPATH_AMENITIES = '//div[contains(text(),"Property amenities")]/following::div[1]//text()'

XPATH_ROOM_FEATURES = '//div[contains(text(),"Room features")]/following::div[1]//text()'

XPATH_OFFICIAL_DESCRIPTION = '//div/div[@class="fIrGe _T"]/text()'

XPATH_ROOM_TYPES = '//div[contains(text(),"Room types")]/following::div[1]//text()'

XPATH_FULL_ADDRESS_JSON = "//div[@id='LOCATION']//span/text()"

XPATH_RATING ="//div[@id='ABOUT_TAB']//text()"

XPATH_USER_REVIEWS = "//div[@id='hrReviewFilters']//span/text()"

XPATH_REVIEW_COUNT = "//span[@data-test-target='CC_TAB_Reviews_LABEL']//text()"

You can then use the variables in the xpath() method to extract the corresponding data point. After extracting each data point, process_page() cleans the data using the clean() function.

raw_name = parser.xpath(XPATH_NAME)

raw_rank = parser.xpath(XPATH_RANK)

amenities = parser.xpath(XPATH_AMENITIES)

print(amenities)

raw_room_features = parser.xpath(XPATH_ROOM_FEATURES)

raw_official_description = parser.xpath(XPATH_OFFICIAL_DESCRIPTION)

raw_room_types = parser.xpath(XPATH_ROOM_TYPES)

raw_address = parser.xpath(XPATH_FULL_ADDRESS_JSON)

name = clean(raw_name)

rank = clean(raw_rank)

official_description = clean(raw_official_description)

room_features = filter(lambda x: x != '\n', raw_room_features)

address = raw_address[0]

newRoomFeatures = ','.join(room_features).replace('\n', '')

rating = parser.xpath(XPATH_RATING)

review_counts = parser.xpath(XPATH_REVIEW_COUNT)

userRating = parser.xpath(XPATH_USER_REVIEWS)

hotel_rating = rating[1]

review_count = review_counts[0]

ratings = {

'Excellent': userRating[0],

'Good': userRating[1],

'Average': userRating[2],

'Poor': userRating[3],

'Terrible': userRating[4]

}

amenity_dict = {'Hotel Amenities': ','.join(amenities)}

room_types = {'Room Types':','.join(raw_room_types)}The function stores the cleaned data as an object and returns it.

data = {

'address': address,

'ratings': ratings,

'amenities': amenity_dict,

'official_description': official_description,

'room_types': room_types,

'rating': hotel_rating,

'review_count': review_count,

'name': name,

'rank': rank,

'highlights': newRoomFeatures,

'hotel_url': url

}

return dataFinally, use json.dump to write the extracted data as a JSON file.

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('url', help='Tripadvisor hotel url')

args = parser.parse_args()

url = args.url

scraped_data = process_request(url)

if scraped_data:

print("Writing scraped data")

with open('tripadvisor_hotel_scraped_data.json', 'w') as f:

json.dump(scraped_data, f, indent=4, ensure_ascii=False)Here is the JSON data extracted using the code.

{

"address": "3570 S Las Vegas Blvd, Las Vegas, NV 89109",

"ratings": {

"Excellent": "17,067",

"Good": "5,637",

"Average": "2,871",

"Poor": "2,026",

"Terrible": "2,328"

},

"amenities": {

"Hotel Amenities": "Electric vehicle charging station,Free High Speed Internet (WiFi),Pool,Fitness Center with Gym / Workout Room,Bar / lounge,Casino and Gambling,Entertainment staff,Pets Allowed ( Dog / Pet Friendly ),Valet parking,Paid private parking on-site,Wifi,Paid internet,Hot tub,Pool / beach towels,Adult pool,Outdoor pool,Heated pool,Fitness / spa locker rooms,Sauna,Coffee shop,Restaurant,Breakfast available,Breakfast buffet,Snack bar,Swimup bar,Poolside bar,Evening entertainment,Nightclub / DJ,Airport transportation,Car hire,Taxi service,Business Center with Internet Access,Conference facilities,Banquet room,Meeting rooms,Photo copier / fax In business center,Spa,Body wrap,Couples massage,Facial treatments,Foot bath,Foot massage,Full body massage,Hand massage,Head massage,Makeup services,Manicure,Massage,Massage chair,Neck massage,Pedicure,Salon,Waxing services,24-hour security,Baggage storage,Concierge,Convenience store,Currency exchange,Gift shop,Outdoor furniture,Shops,Sun deck,Sun loungers / beach chairs,ATM on site,Butler service,24-hour check-in,24-hour front desk,Express check-in / check-out,Dry cleaning,Laundry service"

},

"official_description": "Las Vegas' finest hotel rooms are at Caesars Palace, including the famed Rainman Suite, state of the art and fashion forward accommodations in Octavius Tower and Japanese-inspired elegance at Nobu Hotel. Make this spectacular experience yours.",

"room_types": {

"Room Types": "City view,Landmark view,Pool view,Non-smoking rooms,Suites,Family rooms,Smoking rooms available"

},

"rating": "4.0",

"review_count": "29916",

"name": "Caesars Palace",

"rank": "#14 of 17 resorts in Las Vegas",

"highlights": "Blackout curtains,Air conditioning,Additional bathroom,Desk,Housekeeping,Minibar,Cable / satellite TV,Walk-in shower,Room service,Safe,Telephone,VIP room facilities,Wardrobe / closet,Clothes rack,Iron,Private bathrooms,Tile / marble floor,Wake-up service / alarm clock,Flatscreen TV,On-demand movies,Radio,Bath / shower,Complimentary toiletries,Hair dryer",

"hotel_url": "https://www.tripadvisor.com/Hotel_Review-g45963-d91762-Reviews-Caesars_Palace-Las_Vegas_Nevada.html"

}Limitations of the Code

This code can successfully extract the hotel details. However, Tripadvisor will force you to solve CAPTCHAs if you use the code too frequently. You need CAPTCHA solvers to address that, which this tutorial does not discuss.

Therefore, this code for Tripadvisor data scraping is unsuitable for large-scale projects.

Moreover, you must update the XPaths whenever Tripadvisor changes its website structure. You need to analyze the webpage again to figure out the new XPaths.

Wrapping Up

You can start learning how to scrape data from Tripadvisor using this tutorial. However, remember that you must include additional code to bypass anti-scraping measures. You also need to watch Tripadvisor’s website for any changes in the structure and update the XPaths, or your code won’t succeed.

Of course, you can avoid all this hassle if you use the Tripadvisor Scraper from ScrapeHero Cloud. It is an affordable, no-code web scraper available for free. With a few clicks, you can get Tripadvisor reviews and details of hotels, destinations, and more.

However, if you need to scrape something specific, you can try ScrapeHero Services. Leave all the coding to us; we can build enterprise-grade web scrapers to gather the data you need, including travel, airline, and hotel data.

Frequently Asked Questions

Legality depends on the legal jurisdiction, i.e., laws specific to the country and the locality. Gathering or scraping publicly available information is not illegal. Generally, web scraping is legal if you are scraping publicly available data. Please refer to Legal Information to learn more about the legality of web scraping.

Reviews are also public on Tripadvisor; hence, you can scrape them using Python. However, you must figure out the XPaths of the reviews.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data