How big companies use web scraping is directly tied to their ability to tap into live web data rather than relying on outdated reports. Instead of waiting for last week’s analysis, they monitor market shifts, news, and emerging trends in real time. This ability to act instantly, powered by large-scale web scraping, gives them a competitive edge while others are still catching up with yesterday’s information.

This article explains how big companies use large-scale web scraping. We’ll discuss how it works, where it creates the most value, and what makes a strong, enterprise-grade approach to turning the web into a strategic asset.

What is Large-Scale Web Scraping?

Large-scale web scraping automates the collection of public web data at high speed and volume. In turn, it turns the live internet into a constantly updating database. This creates real-time intelligence that goes far beyond manual work.

The Types of Large-Scale Web Scraping

Here are the 5 main approaches to large-scale web scraping:

- Vertical Scraping

- Horizontal Scraping

- Real-Time Scraping

- Historical or Archival Scraping

- Distributed Scraping

1. Vertical Scraping

Vertical scraping targets one website or platform. You collect everything you need. For example, you scrape every product detail, description, and review from a competitor’s whole catalog. As a result, you get a complete picture of what they sell.

2. Horizontal Scraping

Horizontal scraping is the wide scan. This pulls one data type from many sources. Need to track a product’s price? Scrape that exact data from fifty different websites. Ultimately, it provides a market-wide view of a single variable.

3. Real-Time Scraping

Real-time scraping provides a live pulse. It tracks key data like flash sales, stock shortages, and flight prices every few minutes. Then, this data instantly powers alerts and dynamic systems—where reaction time is everything.

4. Historical or Archival Scraping

Historical or archival scraping looks backward to predict forward. You collect data over months or years. This builds reliable trends. How did a competitor’s ads change before a major product launch? How do summer travel prices shift each year? In essence, this reveals patterns that single snapshots miss.

5. Distributed Scraping

Distributed scraping is the technical backbone for large-scale data collection. It works through a network of proxy servers. This allows simultaneous data requests from multiple sources. Consequently, it delivers speed, prevents overloading websites, bypasses basic blocking, and provides the infrastructure needed to scale web data collection.

Why Big Companies Rely on Web Scraping Today

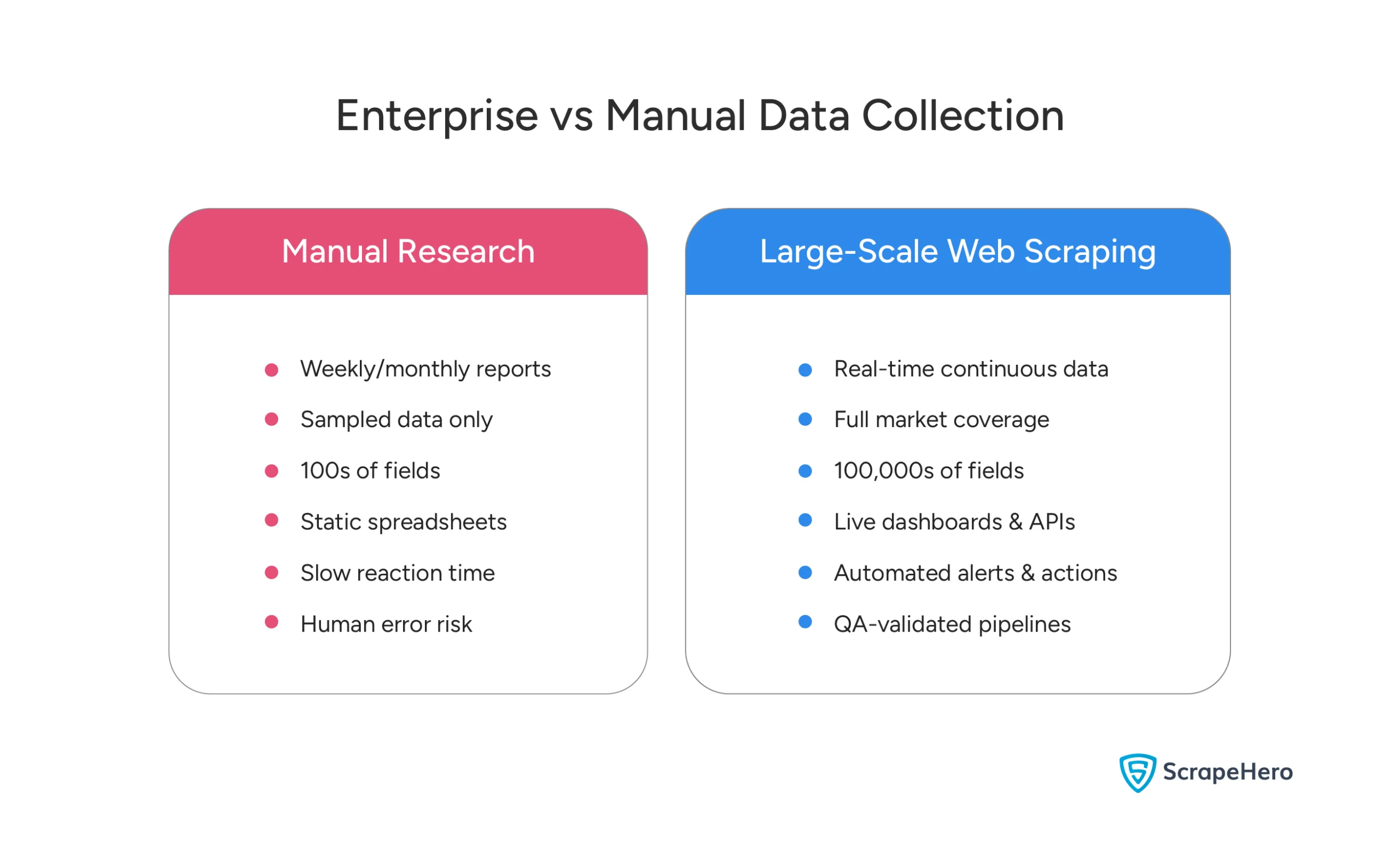

Large firms use web scraping to replace guesswork with real-time facts. It’s different from costly, slow manual research or old analyst reports. Instead, it delivers data needed for practical intelligence the moment they need it.

Scraping provides a live feed of market data. It shows what competitors are doing now and what customers truly say—not past hopes or future strategies. This is web scraping for competitive advantage in action—turning raw data into operational intelligence.

For large companies, scale matters. A small business might track ten items. In contrast, a global brand must monitor thousands across dozens of regions, all under tight deadlines. Here, a single error costs a lot. A mispriced item or a missed signal can be expensive. Through scraping, these companies collect data, narrowing the gap between identifying a problem and fixing it.

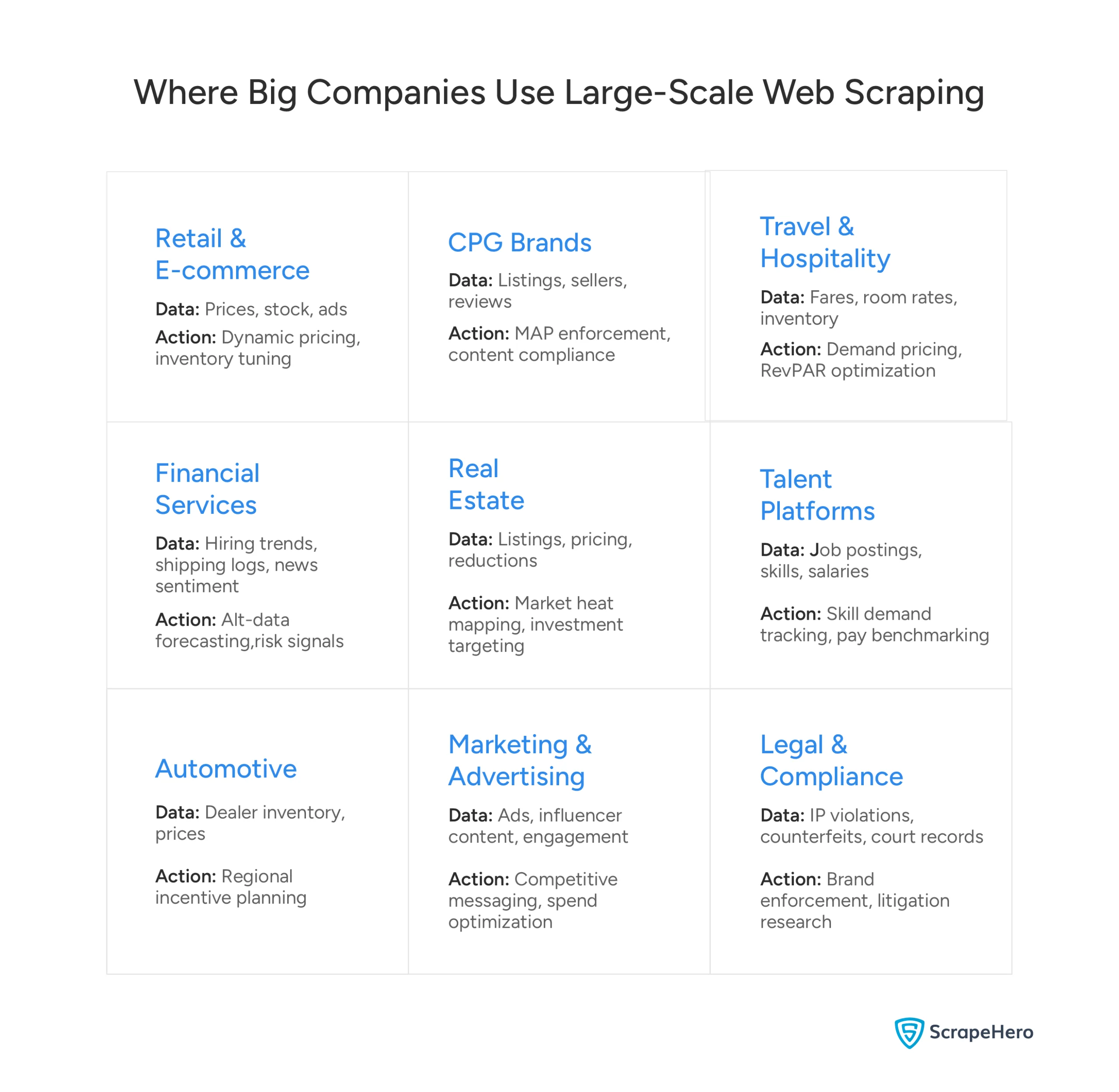

Real-World Use Cases of Web Scraping in Large Organizations

The idea is simple. However, the execution brings profit.

Here’s how top companies apply web scraping to solve specific, costly business problems. These corporate web scraping use cases demonstrate the real-world value of systematic data collection.

- Retail & E-commerce Industry

- CPG & Consumer Brands

- Travel & Hospitality

- Financial Services

- Real Estate

- Talent Acquisition

- Automotive Industry

- Marketing and Advertising

- Legal Firms

Retail & E-commerce Industry

Retail and e-commerce brands track competitor prices, stock levels, and ads across hundreds of sites. They do this every hour. The goal isn’t just to match a price. Instead, it’s to learn the market’s pricing rhythm. When does your competitor cut prices? How low do they go when clearing inventory?

This data feeds rules that adjust your prices instantly. Additionally, it spots gaps in your strategy. If everyone is out of an item, you have two choices: get more or raise your price. Either way, scraping gives you the signal to act now.

CPG & Consumer Brands

CPG and consumer brands use web scraping to take action. Their main goals are:

- Protect prices: Find unauthorized sellers and stop discount violations.

- Guard reputation: Spot negative review trends and fix incorrect product details fast.

- Win visibility: Make sure their product listings are complete and easy to find online.

They use live data to defend their brand and sales. This approach shows how companies outpace competitors by proactively protecting their market position.

Learn how a toy manufacturer strengthens its relationship with families by leveraging data to understand their needs better.

Travel & Hospitality

Travel and hospitality companies use web scraping to make smarter decisions.

Their key actions are:

- Track competitor prices: Monitor rival fares and room rates across all major booking sites in real time.

- Monitor demand signals: See how quickly inventory sells out during seasons, showing surges in demand.

- Optimize pricing models: Feed this live data into their systems to set dynamic prices and maximize revenue.

They use this data to replace guesswork with precise decisions. For instance, if competitor prices are rising and they’re selling out, they can confidently raise rates. This is math driven by external data. Ultimately, it directly pushes up revenue per available room.

Financial Services

Financial services firms use web scraping to find an edge. Their key actions are:

- Scrape for early signals: Track real-world data like shipping logs, company job openings, and news sentiment—not just financial reports.

- Turn signals into forecasts: Use this data in models. For example, negative product reviews can predict future warranty costs. Meanwhile, a hiring surge may signal a new business push.

- Act ahead of the market: Make investment decisions, adjust risk ratings, and check sentiment before official numbers confirm a trend.

In summary, they use this live, alternative data to make faster, better decisions. Competitive intelligence web scraping has become essential in financial services, where acting hours or days ahead of the market creates significant value.

Real Estate

Comparing data is valuable for the real estate industry. Major platforms and investment firms scrape millions of property listings from thousands of broker sites. They don’t just collect asking prices. They track time on market, price cuts, and feature details.

This creates a live model of local supply and demand. An investor uses it to find cheaper markets. Similarly, a developer uses it to decide what kind of building to start. The whole business rests on this public listing data.

Talent Acquisition

Job and recruitment platforms use web scraping to build a strategic business. Their key actions are:

- Track skill demand: Analyze scraped job ads to identify which skills are suddenly trending.

- Benchmark compensation: Track salary data for specific job titles and locations to advise corporate clients on competitive pay.

- Map market growth: Spot emerging industries and roles to guide job seekers to high-opportunity areas.

- Sell actionable intelligence: Package the raw data into reports and insights for corporate and individual clients.

Automotive Industry

Automotive makers need to sell cars, bikes, and more. They scrape dealer websites to see what vehicles are actually sitting on the lots. Which models and colors are selling slowly in which city?

At the same time, they track pricing to see how much dealers are actually discounting. This tells them where to allocate their ad money and how to plan discounts. For example, if an SUV is too expensive in Texas compared to Florida, they can change the regional incentives. In this way, this data steers the sales strategy.

Marketing and Advertising

Modern marketing teams use web scraping for competitive intelligence. Their key actions are:

- Track competitor messaging: Scrape competitor ads and campaigns to analyze their themes and strategy.

- Monitor promotion and reaction: See which social media influencers are promoting products and how audiences respond.

- Analyze content strategy: Examine competitor blogs and content to understand their messaging focus.

- Identify market gaps: Use this intelligence to find weaknesses in competitor messages and anticipate broader trends.

- Optimize ad spend: Shift budget from guesswork to data-driven decisions, targeting what the market is missing.

They use real-time data to learn from the competition and make smarter, more effective marketing moves. This demonstrates how companies outpace competitors with data by staying informed about market trends and competitor strategies in real time.

Legal Firms

Legal and compliance teams actively use web scraping. Their key actions are:

- Enforce trademarks and copyrights: Scan the web for unauthorized use of logos, content, or intellectual property.

- Identify counterfeit goods: Monitor online marketplaces for fake products and false advertising claims.

- Ensure regulatory compliance: In regulated industries, they scan for unlicensed sellers or rule violators.

- Research litigation strategy: Scrape public case databases to analyze past verdicts, opposing counsel’s arguments, and find patterns in similar cases.

- Respond Instantly: Issue takedown notices or cease-and-desist letters the day a violation is found to prevent major brand damage.

In essence, they use scraping to transform legal defense from a reactive cost into a proactive, real-time shield.

Standard Requirements for Large-Scale Web Scraping

Building a reliable, large-scale web scraping capability is a significant infrastructure investment. It requires a specific web scraping architecture. Failure at any point breaks the entire data pipeline. As a result, this leads to lost intelligence and missed opportunities.

To build a successful large-scale web scraping operation, it’s essential to focus on several key processes that ensure data is collected, processed, delivered, and maintained effectively.

- Data Collection

- Data Processing & Quality

- Data Delivery

- Operations and Reliability

- Operational Oversight & Security

Data Collection

Data collection combines the technical access and execution needed to gather web data reliably. The essential components are:

Professional Proxy Network: You need a rotating pool of clean IP addresses that mimic human traffic to make millions of requests without being blocked.

Robust Scraping Systems: Your tools must render complex, JavaScript-heavy sites, click through pages, handle logins, bypass blockers, and navigate bot challenges such as CAPTCHAs.

Constant Maintenance & Adaptation: You must monitor and quickly fix your systems when website code changes to prevent crashes and ensure non-stop data flow.

Data Processing & Quality

The raw data is just the beginning. However, its actual value lies in cleaning and structuring it into a consistent, usable format with defined fields such as SKU, price, and title. This step determines the full value of your data. Missing fields or inconsistencies lead directly to flawed analysis. Therefore, you must enforce adequate quality checks to ensure consistent, reliable data.

Data Delivery

Data delivery must match your operational speed through standardized formats. These include real-time APIs for immediate access, scheduled JSON/CSV files for updates, and direct data warehouse integration. In the end, the right method ensures teams receive intelligence at the pace required for decision-making.

Operations and Reliability

Operations and reliability are how you manage your data pipeline over time. Understanding how big companies use web scraping at scale means recognizing the operational discipline required to maintain consistent data flows.

Here’s what you should do:

- Track success rates, data freshness, and overall system health.

- Set up immediate alerts for when a target site changes and breaks your data collection.

- Create a process to diagnose and fix breaks within hours to maintain uptime.

- Ensure long-term data consistency to build trustworthy trends and reliable analysis.

Operational Oversight & Security

For a technical capability to become a sustainable business operation, it must be built on operational oversight and legal compliance.

- Operate within legal and ethical rules by respecting robots.txt directives and the target site’s terms of service.

- Use rate limiting to control request speed and avoid damaging or overloading target websites.

- Enforce strict data security protocols to protect information both in transit and at rest.

- Establish operational oversight to transform the project from a technical tool into a trusted, stable business asset.

These enterprise web scraping strategies ensure a smooth scraping infrastructure in your company.

Why ScrapeHero is the Right Choice for Large-Scale Web Scraping

Your business choice is clear. Do you build a complex, maintenance-heavy web scraping system inside your company? Or outsource to a reliable web scraping service provider? The first option takes your engineers away from your core operations. On the other hand, the second option gives you immediate, reliable access to the data you need.

ScrapeHero offers a reliable web scraping service that handles the complexities of large-scale data collection, so you can focus on what matters most. We handle the infrastructure, data cleaning, and delivery, ensuring you receive clean, analysis-ready data.

With direct operational support, transparent reporting, and a commitment to legal and ethical operation, we reduce your risk. We transform web data into a trusted business asset.

The result is simple: your team focuses entirely on finding insights and taking action. Meanwhile, we guarantee the data behind those decisions is stable, fast, and accurate.

Final Thoughts

Let’s be clear about how big companies use web scraping. They aren’t just looking at their own sales. They’re analyzing the public web to gain insights into your business, market, and customers. Simply put, the companies that win are the ones that see this environment clearly, all the time.

Large-scale web scraping helps companies turn the internet’s noise into a clean, actionable signal to make better decisions.

However, building this system internally takes considerable time and effort. Fortunately, a specialized partner like ScrapeHero delivers it as a ready-to-use strategic tool. Competitive intelligence web scraping doesn’t have to be complex when you have the right partner handling the technical challenges.

Contact ScrapeHero to discuss your specific data needs. Let’s build your competitive edge on reliable, real-time data.