Is scraping possible without a dedicated server? Yes. Using AWS Lambda, you can build and run applications without managing servers.

This article is about web scraping using AWS Lambda. You can also learn more about serverless architecture and how to build a simple scraper using AWS Lambda.

What is Serverless Web Scraping?

As the name suggests, serverless web scraping is the process of data extraction without maintaining dedicated servers.

The serverless architecture will allow the building and running of applications without the need for a server, and for this, you can use the AWS Lambda platform.

In serverless web scraping with AWS Lambda, it executes code in response to events, such as a scheduled time, without managing servers.

What are AWS and AWS Lambda?

Amazon Web Services (AWS) is a cloud computing platform that offers a wide range of cloud services. It allows you to build and deploy applications without the need to invest in physical infrastructure.

AWS Lambda is a serverless computing service provided by AWS. It allows you to run code without managing servers in response to various events, like HTTP requests, via API Gateway.

Building a Serverless Web Scraper with AWS Lambda and Python

Ensure you have an AWS account and access to AWS Lambda, Amazon S3, and AWS IAM (Identity and Access Management).

Step 1: Create an IAM Role in order to get Lambda function permissions to access S3

- Go to the IAM management console

- Click Roles and then Create role

- Choose AWS service for trust entity and Lambda for use case

- Attach AWSLambdaExecute and AmazonS3FullAccess

- Name and create the role LambdaS3AccessRole

Step 2: Create a Lambda function

- Go to the AWS Lambda console

- Click on the Create function

- Select Author from scratch, enter a function name (serverlessWebScraper)

- Choose Python 3.x for the runtime

- Set the IAM role to LambdaS3AccessRole

- Click on the Create function

Step 3: Add dependencies

1. Create a new directory on a local machine and install the required libraries

pip install requests beautifulsoup4 --target ./package2.Add your script (scraper.py) to the same directory

3. Zip the contents of the directory:

cd package

zip -r ../deployment_package.zip .

4. Upload the zip file to your Lambda function

Step 4: Implement the scraper

import requests

from bs4 import BeautifulSoup

import boto3

def lambda_handler(event, context):

URL = "http://quotes.toscrape.com/"

page = requests.get(URL)

soup = BeautifulSoup(page.content, "html.parser")

quotes = soup.findAll("span", class_="text")

quotes_text = [quote.get_text() for quote in quotes]

# Initialize a session using Boto3

session = boto3.session.Session()

s3 = session.client('s3')

# Save each quote to S3

for idx, quote in enumerate(quotes_text):

filename = f"quote_{idx}.txt"

s3.put_object(Bucket='your-bucket-name', Key=filename, Body=quote)

return {

'statusCode': 200,

'body': f"Successfully scraped and uploaded {len(quotes_text)} quotes."

}

Step 5: Test the function

- Configure a test event in the Lambda console from the dropdown near the Test button

- Click Test to execute the function

The Benefits of Web Scraping Using AWS Lambda

Web scraping using AWS Lambda allows you to run code without thinking about servers or clusters. Some of its benefits include:

1. Integration with AWS Ecosystem

Lambda can integrate well with other AWS services such as Amazon S3, Amazon DynamoDB, and CloudWatch, simplifying the architecture for complex scraping tasks.

2. Quick Deployment

Lambda provides an easy deployment process that helps the developers quickly deploy changes to their scraping code.

3. Built-in Fault Tolerance

AWS Lambda can automatically handle failures. It ensures that the scraping functions are available without any manual intervention.

4. Event-driven Execution

Lambda functions in AWS can streamline workflows by automatically starting web scraping in response to events like S3 updates or DynamoDB changes.

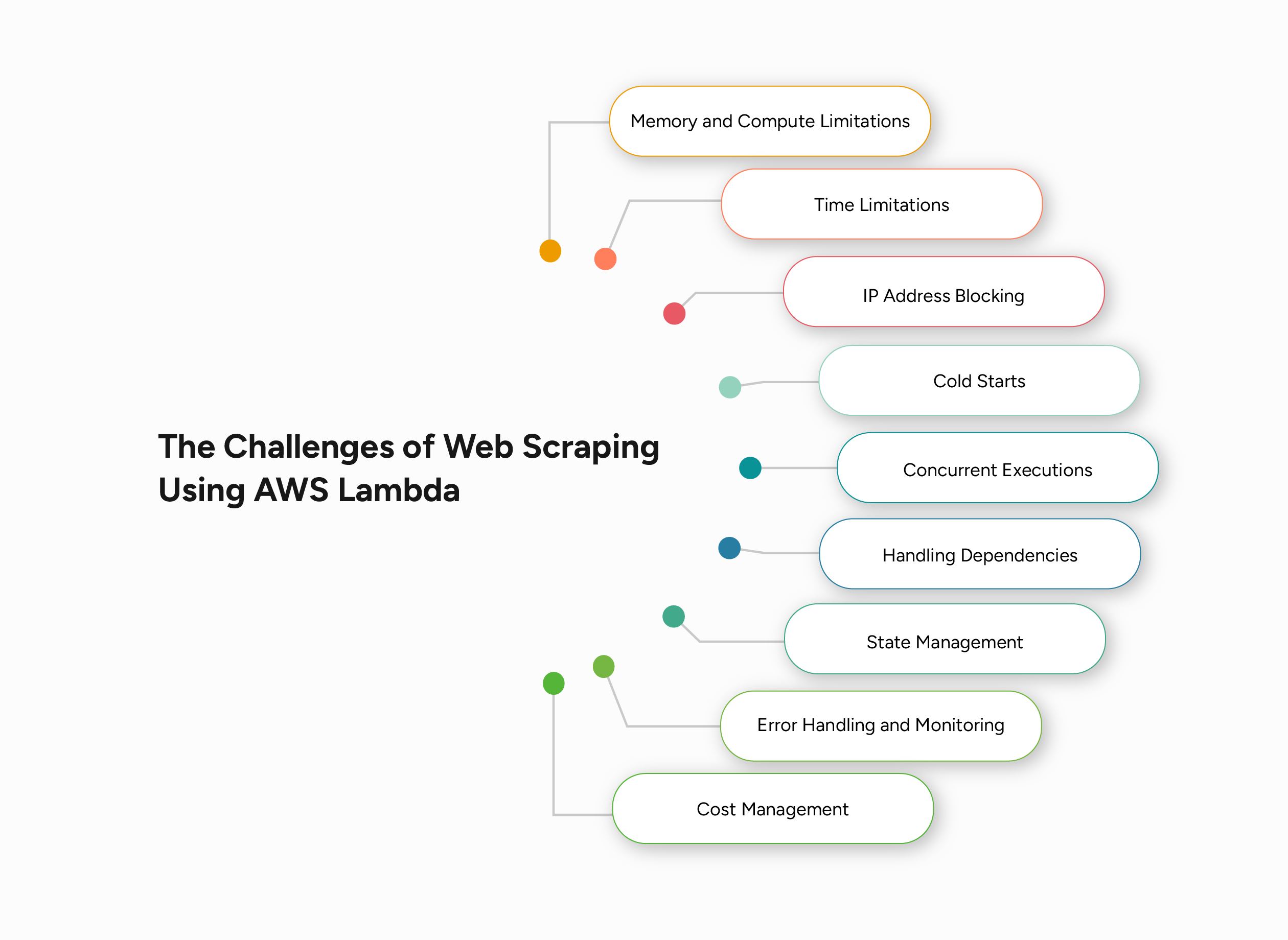

The Challenges of Web Scraping Using AWS Lambda

Along with benefits, using AWS Lambda for web scraping comes with some significant challenges, too. The issues concerned with it include:

- Time Limitations

- Memory and Compute Limitations

- IP Address Blocking

- Cold Starts

- Concurrent Executions

- Handling Dependencies

- State Management

- Error Handling and Monitoring

- Cost Management

1. Time Limitations

The maximum execution time limit of AWS Lambda functions is 15 minutes, which is a significant issue for long-running web scraping tasks.

You can overcome this limitation by splitting the tasks into multiple Lambda functions, but each function invocation incurs costs.

2. Memory and Compute Limitations

Suppose your web scraping tasks require high computational power or large amounts of memory for data processing.

In that case, it can be a challenge, as Lambda functions have limits on the amount of memory and computing power they can use.

You can choose higher memory allocation for more CPU power, but you will have to pay extra for the memory allocated.

3. IP Address Blocking

Lambda does not inherently rotate IP addresses, so it is expected to encounter blocking challenges from web servers.

To mitigate IP blocking, you have to use proxy services or AWS services like NAT Gateway, but again, this adds costs.

4. Cold Starts

Cold start refers to a situation in which there is a delay in execution, starting when a Lambda function is invoked after being idle.

Cold starts are challenging for time-sensitive scraping tasks, and to deal with it, you have to keep functions warm by scheduling regular invocations.

5. Concurrent Executions

The number of concurrent executions of Lambda functions is limited. Exceeding these limits can affect the performance and reliability of your scraping operation.

Keep in mind that AWS charges are based on the number of Lambda function invocations and their execution time. So, if you request higher concurrency limits, it can involve additional costs.

6. Handling Dependencies

Managing and packaging dependencies for Lambda is challenging, especially when using external libraries.

When larger deployment packages are involved, deployment complexity increases and cold starts occur.

7. State Management

One of the significant challenges of Lambda functions is that they are stateless. This means they do not maintain a state between executions.

So, you need to implement state management using external services like Amazon S3 or DynamoDB for storage and data transfer.

8. Error Handling and Monitoring

Lambda is a distributed environment, so monitoring and handling errors is complex. Errors often affect downstream components.

In such situations, you may have to use AWS monitoring tools like CloudWatch for logging, monitoring, and alerts.

9. Cost Management

Cost Management is a significant issue for AWS Lambda, especially as scaling increases.

Increase in the number of function invocations, unexpected usage of other AWS services, etc., lead to significant costs.

ScrapeHero’s Web Scraping Service

AWS Lambda can offer an automated solution for web scraping. However, it has several drawbacks, especially regarding cost management and additional resources to mitigate the challenges.

It is better to look for an affordable and cost-effective service that offers technical support and handles all the complexities of web scraping.

You need someone to scale your web scraping tasks without your intervention and offer transparent pricing models when compared to the variable costs with AWS Lambda.

So, it is wise to entrust your scraping tasks to a dedicated web scraping service provider like ScrapeHero.

We are a fully managed enterprise-grade web scraping service and provide custom services, from large-scale web crawling to alternative data for extensive financial analysis.

Frequently Asked Questions

Yes. When you use serverless frameworks such as AWS API Gateway, you can run a website on AWS Lambda.

Serverless functions for web scraping are cloud-based functions that can run scrapers without a dedicated server infrastructure.

AWS Lambda has many limitations. Its maximum execution time is 15 minutes, and it also has memory allocation issues and cold start delays for new requests.

You can package the Selenium WebDriver and other binaries, like ChromeDriver, with your Lambda function to set up AWS Lambda for web scraping with Python and Selenium.

You must also use a headless browser configuration to execute the scraping tasks.

We can help with your data or automation needs

Turn the Internet into meaningful, structured and usable data