The web scraping business is projected to grow to a $3.52 billion market by 2037.

Companies now see data as their most important tool for success.

However, this rapid growth has brought the risk of outsourcing web scraping to unreliable providers. While good providers give top-level solutions that help businesses grow, bad ones make big promises but cause expensive problems.

Selecting the wrong web scraping partner can put your company at risk. You could face legal troubles, data leaks, work stoppages, and lost opportunities worth millions of dollars.

Knowing how to spot these differences will help you distinguish between risky vendors and reliable partners. So, you can choose a provider that protects your business, not one that exposes it. Therefore, this article will outline 12 key warning signs that can protect you from the risks of outsourcing web scraping.

What Are the Biggest Risks of Outsourcing Web Scraping Services?

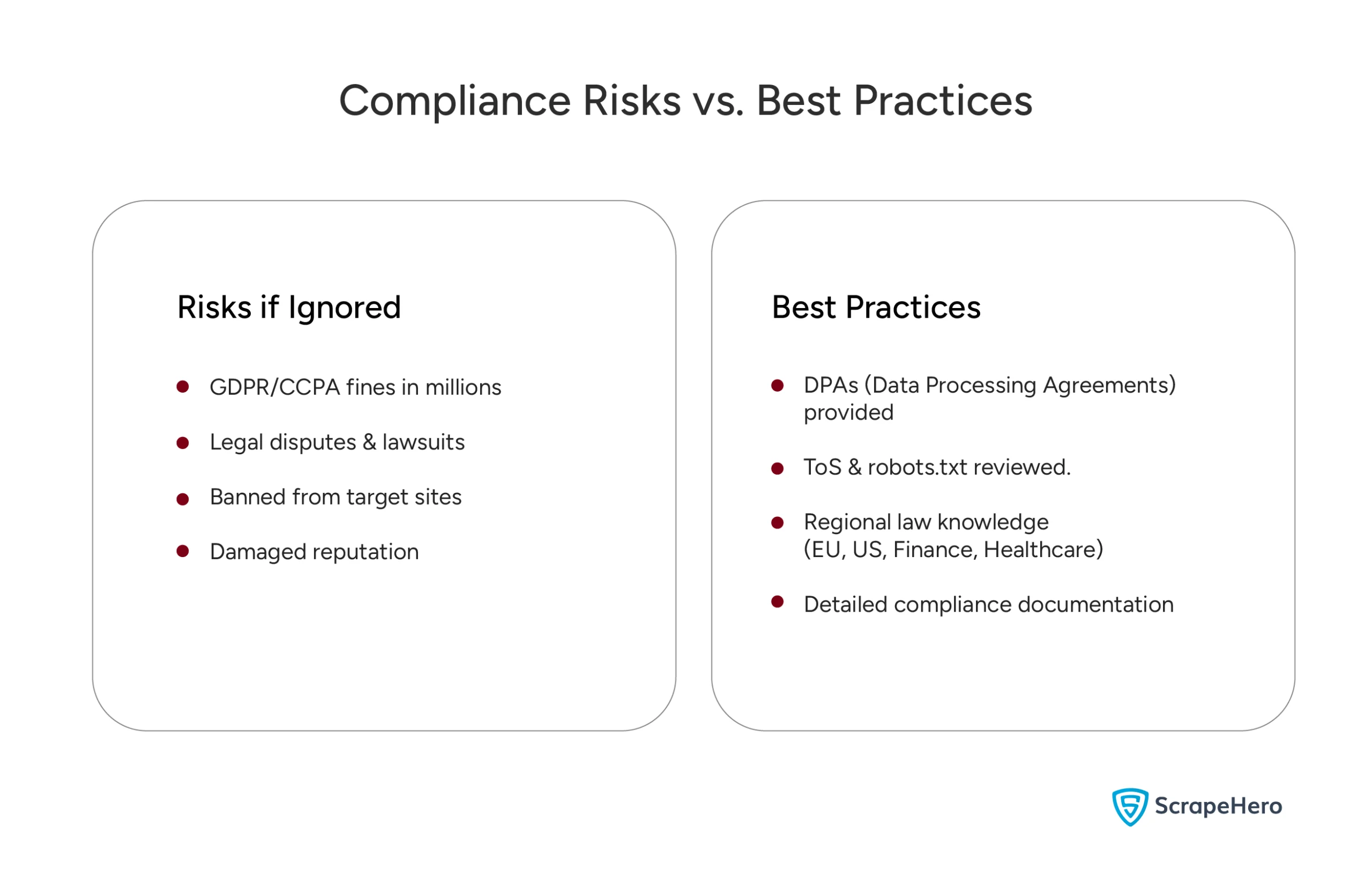

First, the major risks include legal compliance violations, data security breaches, poor data quality, and service disruptions. Additionally, companies can face GDPR fines, copyright lawsuits, and data breaches when working with inadequate providers.

Furthermore, technical failures often result in broken data pipelines, while hidden costs can inflate your budgets.

Therefore, understanding these risks in detail is crucial before selecting any web scraping partner.

1. Lack of Legal and Compliance Expertise

No warning is more significant than a provider who struggles to navigate the complex laws surrounding web scraping. In fact, rules have changed significantly. Privacy laws, such as GDPR and CCPA, are introducing new requirements that many services often overlook. So, look out for providers who cannot clearly explain their compliance plan.

In contrast, real services provide comprehensive documentation about robots.txt rules, terms of service checks, and compliance with privacy laws. Additionally, they offer Data Processing Agreements (DPAs) as usual.

Most importantly, avoid those who ignore legal compliance or claim web scraping falls into a “legal gray area.” Instead, expert services are knowledgeable about the differences in laws by country. Additionally, they are familiar with the rules governing specific industries, such as healthcare and finance.

2. Inadequate Infrastructure and Scalability Planning

Obviously, web scraping for large enterprises requires robust systems. These systems must handle vast amounts of data while maintaining consistent performance. Unfortunately, many providers use setups that break under considerable demands.

The warning signs for inadequate infrastructure include vague discussions about tech setup and a lack of precise metrics. Particularly, when providers struggle to provide setup drawings, explain backup servers, or outline data handling steps, it indicates they have weak scraping systems.

Similarly, no written plans for disaster recovery and unclear time goals should immediately disqualify them. On the other hand, top-level services utilize distributed setups with multiple data centers, auto-switch-over tools, and documented plans for ensuring business continuity.

3. Weak Data Quality and Validation Processes

Raw scraped data is often messy and inconsistent. Notably, the gap between beginners and experts lies in their approach to handling data quality.

Providers who can’t explain their data cleaning rules are at considerable risk. Expert services employ standard fixing steps, utilize innovative methods to identify duplications, and verify through multiple sources. Moreover, they promise accuracy and closely track error rates.

Additionally, providers without auto-monitoring for site changes will give you broken data flows. Since websites change frequently, expert services utilize real-time monitoring with alerts for failures and automated fix steps.

4. Limited Security Measures and Data Protection

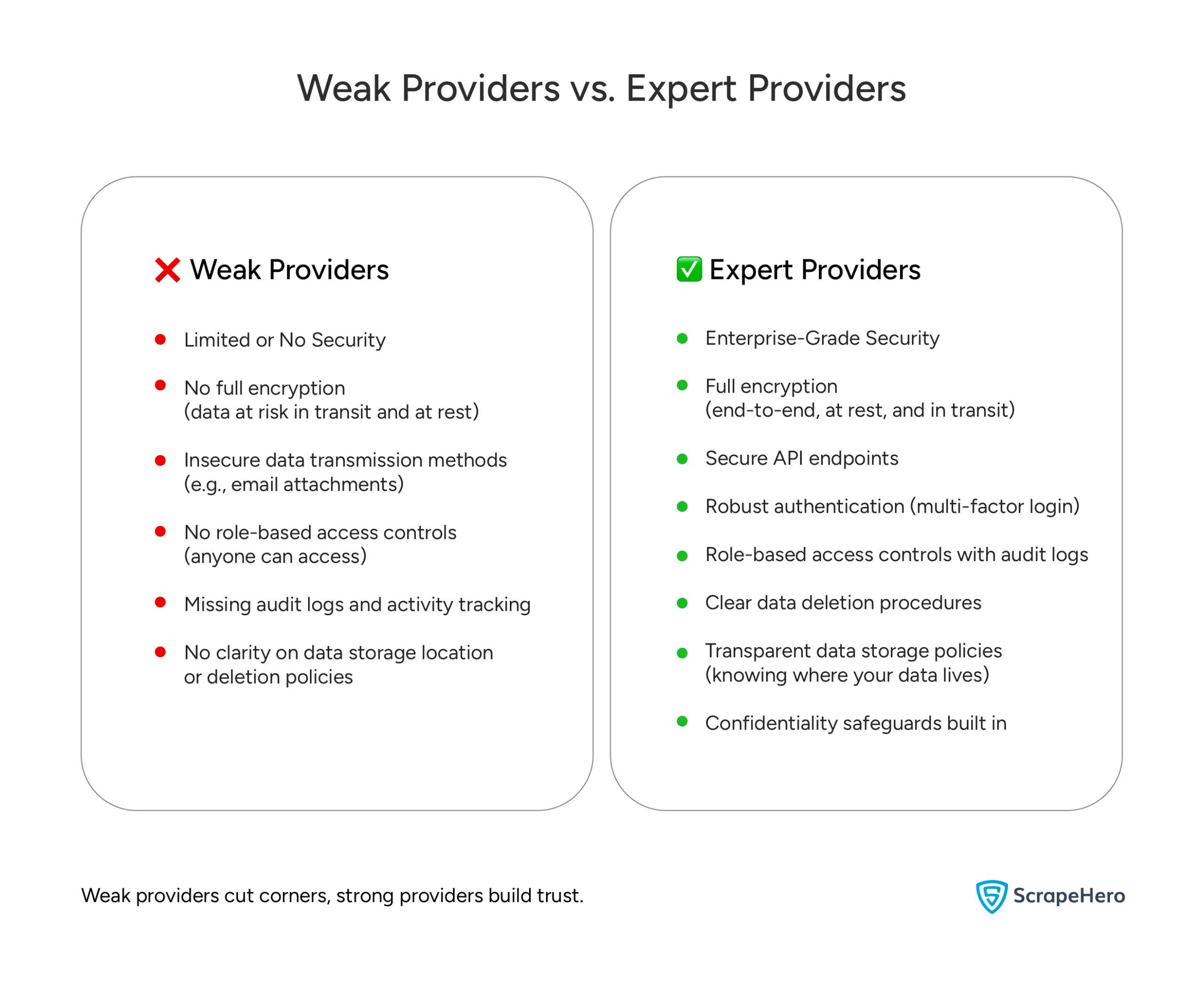

Data for big companies requires top safety, but unfortunately, many providers view safety as an added expense.

Key warnings of poor data security include the lack of full encryption and insecure data transmission. In comparison, expert providers utilize full encryption, secure API endpoints, and robust sign-in systems with multi-factor verification.

Furthermore, providers without role-based controls, check logs, and precise user handling cannot guarantee data safety. Instead, real services have clear data deletion procedures, specify where data is stored, and offer methods to maintain confidentiality as needed.

5. Unrealistic Pricing Models and Hidden Costs

Cheap web scraping service providers often hide their final costs or deliver subpar results. In reality, real web scraping requires significant setup costs and skilled teams, both of which come with substantial financial demands. So, be cautious of cheaper prices without clear service limits.

In contrast, real providers clearly display pricing breakdowns for their operational costs and provide clear information on setup costs and additional fees.

Additionally, expert providers demonstrate a return on investment through real-world examples, proving total cost benefits over building in-house, with a comprehensive assessment of resources and skills.

6. Poor Communication and Project Management

Providers without established communication guidelines, dedicated project managers, or transparent problem-solving processes will upset your team and slow projects. In contrast, expert providers offer comprehensive project tracking, detailed step-by-step reports, and thorough documentation.

Signs of poor communication and project management include the absence of real-time dashboards, no updates on status, and stiff handling. Furthermore, services that cannot utilize flexible methods or handle change effectively will fail to meet the needs of large companies.

These web scraping red flags are often overlooked but can have a severe impact on project success.

7. Insufficient Technical Expertise

Today’s web scraping requires advanced skills that many providers lack. Sites that are heavily reliant on JavaScript and feature robust anti-scraping technology require top-notch scraping skills.

Technical risks include limited experience with single-page apps, weak anti-scraping capabilities, and poor CAPTCHA handling. Moreover, providers using simple tools without changes can’t handle new web setups.

Additionally, the lack of AI-boosted pulling, automated browser tools, or a machine learning mix reveals technical limitations. In contrast, expert providers offer a wide range of data types, real-time flow, and easy integration to business information tools. Identifying these web scraping service red flags early can save your project from technical disasters.

8. Lack of Industry-Specific Experience

Basic fixes rarely fit industry needs. Hence, providers without industry skills cannot meet your special data needs, must-dos, or alternative approaches.

Here are a few Industry-Specific Risks:

E-commerce & Retail:

- Can’t handle real-time stock tracking across sites

- Missing live price changes and rival watching

- Bad product list pulling with missing data

- No experience with big platforms (Amazon, Shopify, etc.)

Real Estate:

- Not knowing the MLS rules needs and limits

- Can’t pull property photos, floor plans, or list details

- Missing knowledge of the area’s real estate rules

- No experience with big platforms (Zillow, Realtor.com, etc.)

Financial Services:

- No knowledge of SEC, FINRA, or bank rules

- Can’t handle financial data APIs correctly

- Missing experience with trade platforms and market data

- No understanding of data license needs for financial info

Travel & Hospitality:

- Bad handling of live prices and open spots data

- Can’t pull booking sites with hard user steps

- Missing experience with travel search engines

- No knowledge of airline/hotel data use limits

Clearly, one-size-fits-all approaches without custom skills cannot provide specialized field mapping and field checking. Meanwhile, the best providers offer insightful perspectives on the industry and its competitors, giving data-driven tips that help you achieve your business goals.

9. Inadequate Support and Maintenance Services

Obviously, web scraping requires ongoing updates as sites frequently change. Unfortunately, limited support hours, no tech support teams, or slow response times will leave you stuck at key moments.

Furthermore, the absence of complete documentation, inadequate training, and weak handover procedures indicates non-expert work.

On the other hand, expert providers don’t just ‘fix after’ a problem is reported; instead, they implement proactive monitoring with automated alerts and mechanisms that prevent failures from impacting your data pipeline.

10. Questionable References and Track Record

Typically, providers who cannot provide verifiable case studies or fail to connect to current clients likely lack significant industry experience. Additionally, the lack of clear information on teams, history, or financial stability raises concerns about the company’s sustainability.

Moreover, negative online reviews, being absent from industry networks, or a lack of field recognition may suggest that providers do not meet the standards of larger companies. These web scraping service red flags often indicate deeper operational issues.

11. Inflexible Contract and Exit Strategies

Complex rules, lengthy contracts without pilot projects, or missing work clauses can demonstrate a lack of flexibility, which could negatively impact your business. Similarly, fuzzy data ownership rights or hard export steps can trap you in unfavorable terms.

Fortunately, expert providers offer clear end-to-end steps, comprehensive data migration guidelines, and assistance with the transition.

12. Lack of Innovation and Future-Proofing Capabilities

Providers that lack a clear technology roadmap, investments in AI/ML capabilities, or ongoing innovation budgets often deliver outdated solutions that quickly fall behind the evolving needs of enterprises. As a result, they are also unprepared for emerging privacy regulations and the associated legal risks.

Additionally, if a provider cannot demonstrate strong integrations with leading data platforms or business intelligence tools, it signals a weak market alignment. Further, it limits the strategic value of the data you’ll receive.

Understanding these web scraping service risks helps businesses make informed decisions about their data partners.

Your Action Plan

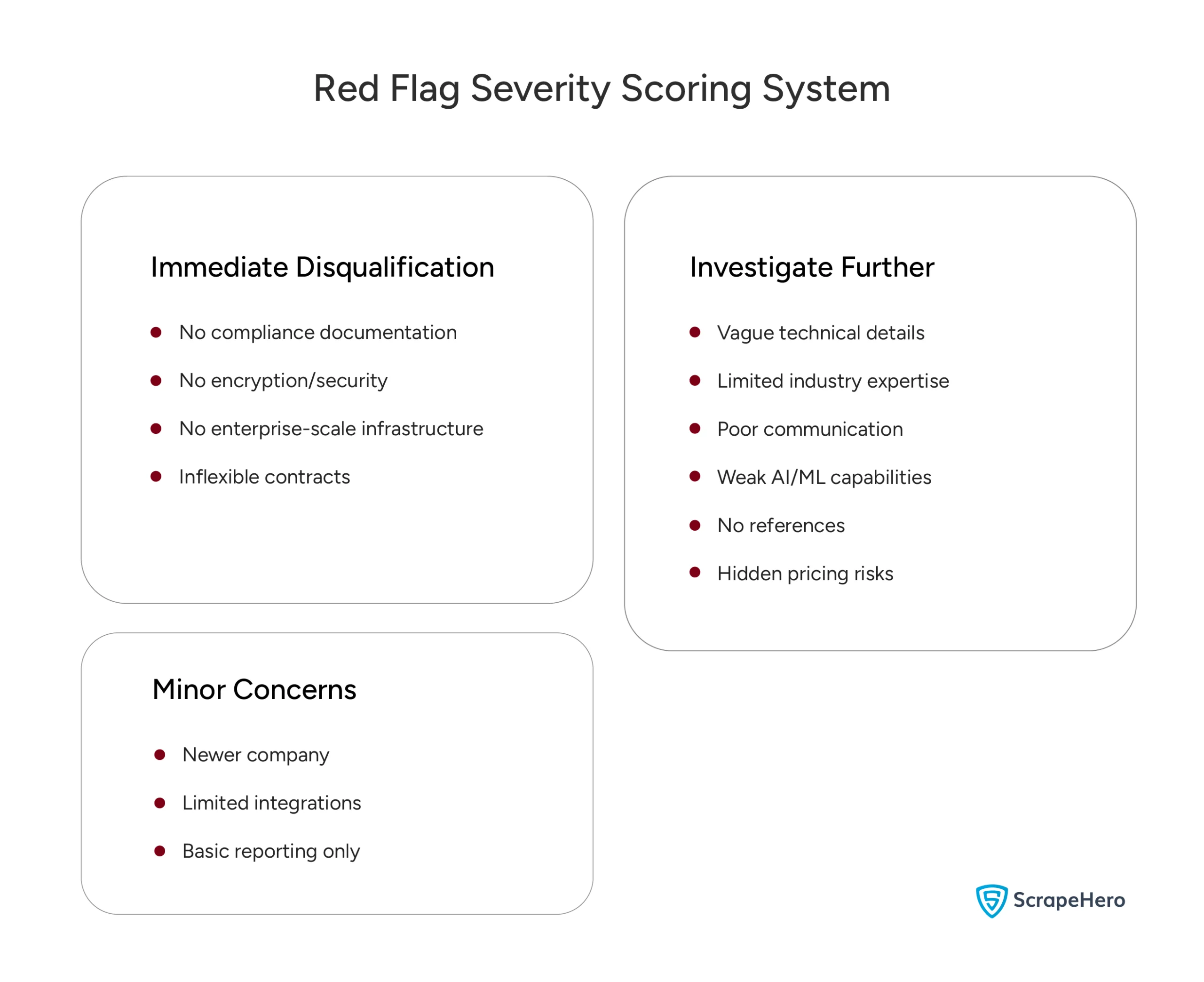

First, conduct a step-by-step review that includes technical and compliance checks, and assign a warning score to flag high-priority and medium-level concerns.

Here’s a Red Flag Severity Scoring System

Immediate Disqualification:

- Cannot provide compliance documentation

- Missing data encryption or security protocols

- Unable to demonstrate enterprise-scale infrastructure

- Refuses flexible contract terms

Investigate Further:

- Vague technical explanations without specifics

- Limited industry-specific experience

- Poor communication and response times during evaluation

- Missing AI/ML or advanced technical capabilities

- Unclear pricing structure with potential hidden costs

- No verifiable enterprise client references

Minor Concerns:

- Newer company without an extensive track record

- Limited integration options with business tools

- Basic reporting capabilities without advanced analytics

Making the Right Choice with ScrapeHero

Ultimately, selecting a web scraping provider is a crucial decision that can significantly impact your competitive edge for years to come. Bad data quality leads to incorrect choices, rules fail to mitigate legal risks, and unstable service that halts operations.

By recognizing these risks of outsourcing web scraping early, you can avoid the common challenges that lead to project failures and financial losses.

At ScrapeHero, we ensure our partners are protected from the risks discussed in this article, ranging from compliance and data security to advanced AI-powered scraping. With over a decade of experience, ScrapeHero is a trusted data partner for leading businesses across various industries in the US.

Are you ready to check your web scraping options without risk? Contact us today, and our experts will demonstrate how ScrapeHero’s web scraping services can accelerate your data plan while safeguarding your team from errors that can hinder major projects.

FAQs

Check the robots.txt file, terms of service, and privacy policy. Examine the website structure and test on a small scale. Ensure you have obtained the necessary legal clearance when required and have the appropriate data storage capabilities in place.

Respect server resources and avoid overloading websites. Don’t collect personal data without consent. Consider the impact on the website’s business model. Be transparent about data collection and follow fair use principles.

Common legal issues include copyright infringement, terms of service violations, and breaches of data protection laws. Further, computer fraud laws, contract violations, and intellectual property concerns also apply. Please note that laws vary by country.