Public web data is easy to collect. You can access product listings, reviews, and search pages openly. But many high-value business signals live behind logins.

Platforms like LinkedIn, Instagram, and X require an active account. You need one to see profiles, posts, comments, engagement metrics, and marketplace activity. The data is visible, but it’s not public.

This is where enterprise teams run into problems. Manual tracking doesn’t scale. Native exports are shallow. APIs come with access limits, high costs, or long approval cycles. Yet decisions around brand, demand, and competition still depend on these signals.

The real challenge isn’t just about access. It’s about knowing how to collect login-gated data responsibly through ethical web scraping practices. You need to do this without triggering legal exposure, account bans, or operational risk.

This article explains how enterprises should approach data collection from login-restricted platforms. We’ll cover the legal, ethical, and operational considerations you need to work within closed environments.

Go the hassle-free route with ScrapeHero

Why worry about expensive infrastructure, resource allocation and complex websites when ScrapeHero can scrape for you at a fraction of the cost?

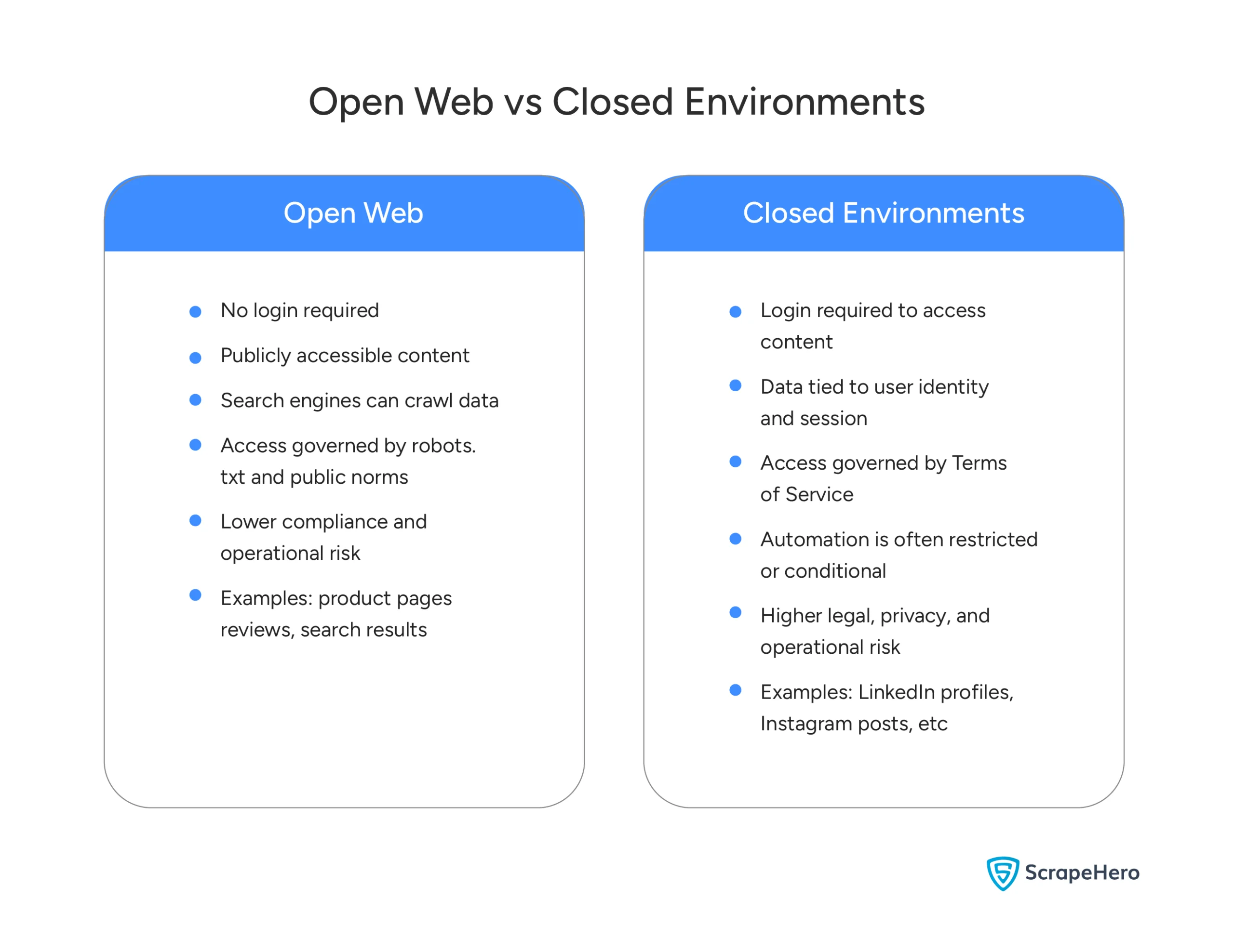

What “Closed Environments” Means in Web Scraping

Understanding what makes an environment “closed” is the first step. It helps you collect this data responsibly without crossing contractual or compliance lines.

A closed environment is any platform where you need to log in to access data. That’s it. No hidden systems. No confidential databases. Platforms like LinkedIn, Instagram, X, Facebook, and many B2B portals fall into this category. You can view profiles, posts, engagement metrics, ads, or marketplace activity only after you log in as a user. Your access is tied to an identity, session, and platform rules.

This is different from the open web. On the open web, anyone can access content without an account. Search engines can crawl it. There’s no login barrier. In closed environments, you can see the data, but there are conditions. You’re allowed to view the data as a user. But how you can collect, store, and reuse that data is governed by terms of service and usage policies.

This distinction matters for enterprises. Many of today’s most important business questions are answered inside login-gated platforms. This includes brand perception, competitor hiring, and influencer reach.

Why Enterprises Scrape Closed Environments

Enterprises don’t look at closed environments out of curiosity. They do it because critical business signals live there. But many platforms lack APIs that support real enterprise use cases. Others restrict access, cap volume, or strip historical depth. When data is only available through logged-in interfaces, teams fall back to manual collection. This quickly fails at scale.

That creates three common pressures.

- Decisions require current data

- Manual workflows break at scale

- Visibility is fragmented across teams

1. Decisions require current data

Pricing moves, competitor actions, hiring trends, and brand conversations change daily. Manual exports arrive after the window to act has closed.

2. Manual workflows break at scale

Teams copy data into spreadsheets, miss updates, and introduce errors. The real cost isn’t just time. Its decisions are made on partial or outdated signals.

3. Visibility is fragmented across teams

Sales, marketing, compliance, and strategy each track the same platforms in isolation. No one sees the full picture. This drives enterprises toward direct access to login-gated data.

The goal is simple: replace guesswork with consistent, repeatable access to the data the business already relies on.

Why Ethical Scraping Matters More in Closed Ecosystems

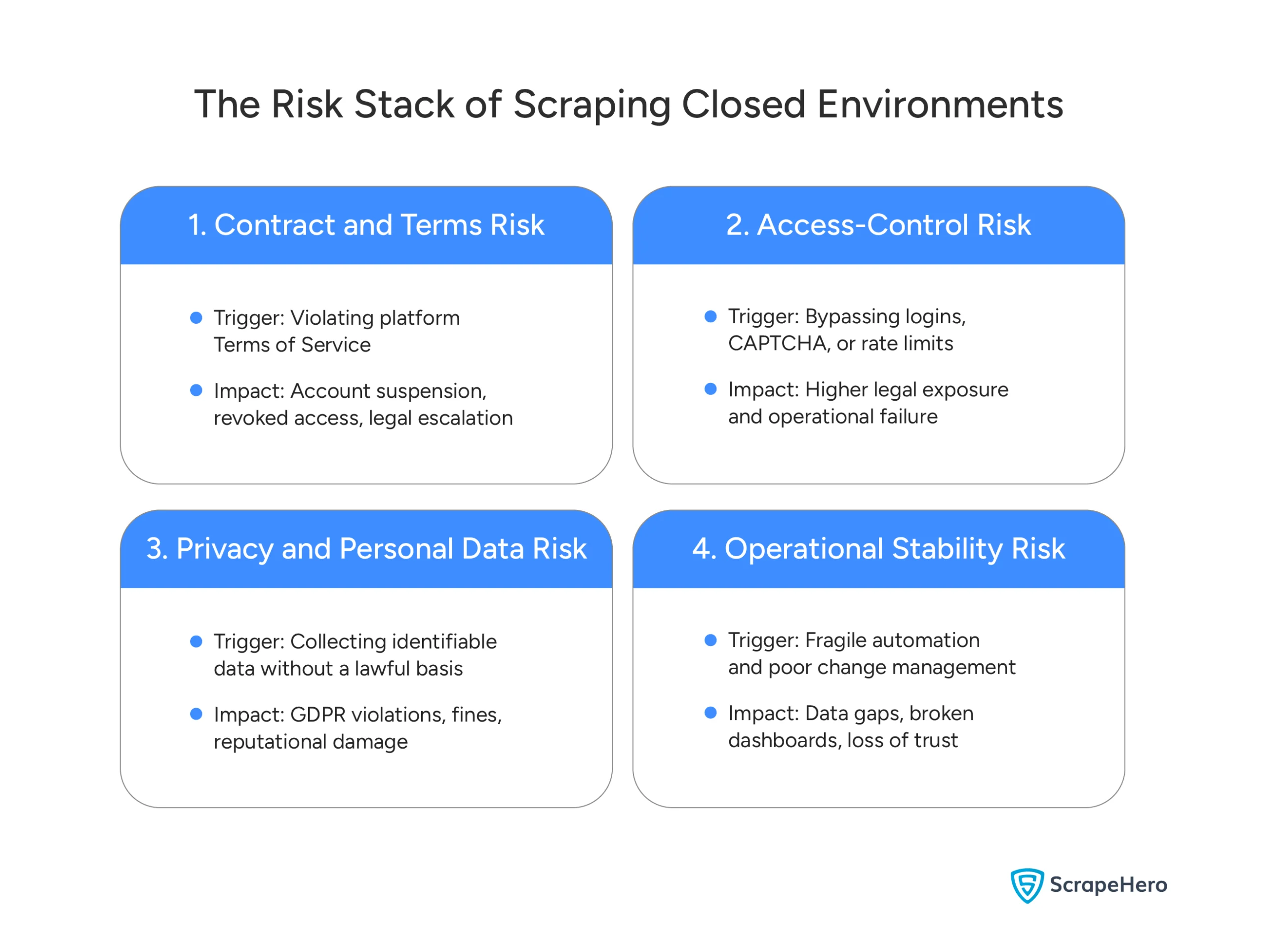

For business leaders, clarity matters. Collecting data from login-gated platforms isn’t inherently risky. Risk comes from how the data is accessed, handled, and governed. Many teams assume the danger lies in the data itself. But most issues stem from ignored platform rules, weak controls, or poor execution. The same dataset can be low-risk or high-risk depending on the approach taken.

Let’s break down the four specific risk areas you should understand before moving forward.

- Terms of Service and Contract Risk

- Access-Control and Anti-Circumvention Risk

- Privacy and Personal Data Risk

- Operational Risk

1. Terms of Service and Contract Risk

When you log into platforms like LinkedIn or Instagram, you agree to their terms of service. This matters more than most teams realize. For closed environments, access is governed by contractual rules, not just visibility. Even if you can see the data, automated collection may be restricted by these terms.

Risk often arises not from “illegal scraping” but from breaching these terms. If automation violates usage limits or data-sharing clauses, the issue is contractual, not technical.

For enterprises, this can lead to account suspension, loss of access, or legal escalation. It disrupts teams that rely on these platforms for sales, hiring, or marketing intelligence.

The solution is awareness, not avoidance. Before you collect data, understand what the terms allow and restrict. This review should happen before data extraction, not after something breaks.

2. Access-Control and Anti-Circumvention Risk

Closed environments rely on access controls such as logins, CAPTCHA, and rate limits. These controls are deliberate signals of expected user behavior, not random obstacles. Ignoring them increases risk.

The key distinction is intent. Collecting data through a standard, authenticated session is fundamentally different from bypassing safeguards designed to block automation. Bypassing these controls creates much higher legal and operational exposure.

3. Privacy and Personal Data Risk

Closed platforms often contain personal user data. This brings privacy regulations like GDPR into scope, especially in the EU. If data can identify an individual, you need a lawful basis for collecting and using it. You also need controls on storage, access, and retention. Visibility alone doesn’t justify collection.

Risk often increases when teams collect more data than necessary. The fix is governance. Limit what you collect, secure it properly, and define clear retention policies. When handled correctly, data from closed environments can support decisions without creating compliance exposure.

4. Operational Risk

Even after legal and privacy risks are addressed, operational risk remains. Closed environments evolve constantly, and data systems must adapt in real time.

Why systems break

Closed platforms frequently change how access works. Login flows update, sessions expire, and rate limits shift, causing previously stable automation to fail without warning.

- Login flows change without notice

- Sessions expire or reset unexpectedly

- Rate limits tighten or fluctuate

- What works today may fail tomorrow

What failure looks like

When data collection is fragile, failures surface quickly and visibly. These breakdowns interrupt access and erode confidence across teams.

- Accounts get locked or restricted

- Data gaps appear in pipelines

- Dashboards stop updating

- Teams revert to manual work

The hidden cost

Operational failure consumes time and damages trust. The impact extends beyond engineering into analytics and leadership decision-making.

- Engineers spend time fixing breakages instead of improving systems

- Analysts question data accuracy and completeness

- Leadership doubts the liability of insights

- Confidence in automation declines

What stability requires

Stability is not accidental. It requires disciplined operations, proactive oversight, and clear ownership.

- Continuous monitoring of access, sessions, and uptime

- Fallback plans for platform changes

- Defined ownership for maintenance and change management

For enterprises, operational discipline is what separates reliable data pipelines from systems that demand constant repair.

Safer Alternatives to Scraping Closed Environments

Scraping is a powerful tool, but it shouldn’t always be the starting point for closed environments. In many cases, safer and more stable access paths exist. Identifying them early reduces long-term risk without sacrificing insight.

- Start with Official APIs

- Leverage Licensed Data and Partnerships

- Combine First-Party with Second-Party Data

1. Start with Official APIs

Begin with the most secure and sanctioned method. Official APIs are built for automation. They come with documented rate limits and offer predictable, programmatic access. While coverage may not be perfect, the compliance and legal risks are much lower.

Key benefits: Designed for scale, clear terms of use, and direct platform support.

2. Leverage Licensed Data and Partnerships

When APIs are limited, turn to formal commercial agreements. Many platforms and data providers offer licensed feeds or partnership programs for enterprise use. This path is often more efficient and cost-effective than maintaining brittle, in-house scraping solutions.

Consider:

Licensed Data Feeds: Legally purchased access to paywalled or restricted data.

Research Partnerships: Direct relationships with providers for curated data.

3. Combine First-Party with Second-Party Data

Get the most value from the data you already own. When you enrich it with second-party data from trusted partners, your first-party data can often answer critical questions. You can do this without requiring direct access to restricted systems. Partners share this data via agreement.

But what happens when APIs, partnerships, and shared data still don’t provide the signals your business needs?

How to Safely Scrape Authenticated Data?

When alternatives aren’t enough, governance becomes non-negotiable. Ethical web scraping in closed ecosystems requires collecting data from login-gated platforms, and it should never be an ad hoc decision made by a single team. It needs structure, review, and accountability.

- Start with a Legal Review

- Document Business Purpose and Scope

- Enforce Strict Security Protocols

- Set Clear Retention and Deletion Rules

1. Start with a Legal Review

Confirm what the platform explicitly allows and restricts before any automation starts.

- Review terms of service, API agreements, and data usage policies

- Identify limits on automation, access, and data sharing

- Use this review as the foundation for compliant web scraping

2. Document Business Purpose and Scope

Be explicit about why the data is needed and how it will be used.

- Clearly define the business objective

- Specify the exact data fields required

- Follow the principle of minimum necessary data collection

3. Enforce Strict Security Protocols

Treat access to login-gated data as a privileged operation.

- Restrict access to authorized personnel only

- Log all data access and usage for audit trails

- Securely manage credentials, tokens, and sessions to prevent leaks or misuse

4. Set Clear Retention and Deletion Rules

Control how long data exists within your systems.

- Define retention timelines based on business need

- Establish secure deletion policies

- Reduce long-term legal and compliance exposure through lifecycle management

For enterprise leaders, governance is what turns a risky workaround into a controlled, auditable data operation.

When to Outsource Closed-Environment Data Collection

There comes a point where doing this in-house stops making sense. If you need data from multiple login-gated platforms, maintenance overhead grows fast. Internal teams spend more time managing sessions, handling breakages, and chasing data gaps than using the data itself.

Often, compliance is the tipping point. When privacy, access control, and auditability are non-negotiable, ad hoc scripts and shared credentials pose a risk. This is where specialized providers add real value.

For secure, compliant data collection, trust a managed web scraping service like ScrapeHero. A managed solution like ScrapeHero helps you meet platform compliance requirements through responsible data scraping practices. It ensures data minimization and guarantees secure delivery. With built-in change monitoring, quality checks, and comprehensive documentation, you get a solution that’s both reliable and robust.

Beyond that, outsourcing also improves reliability. Dedicated teams track platform changes. They manage access responsibly. They deliver clean data through formats your systems already support.

The right partner doesn’t just collect data. They remove operational risk and protect your teams. They let you focus on decisions, not maintenance.

Final Thoughts

Closed environments offer some of the most valuable business signals available today. They also demand more discipline than the open web. The difference between a sound data strategy and a risky one comes down to intent, authorization, and control.

Platforms like LinkedIn or Instagram aren’t off-limits. But they are governed environments. Logging in changes the rules.

For enterprises, the safest path is clear. Use permissioned access where possible. Apply governance by default. Design for stability, not shortcuts. Closed ecosystem scraping requires ethical data extraction methods to ensure that when done right, closed-environment data becomes a reliable input for decision-making. When done poorly, it becomes a source of risk.

If your team is evaluating access to closed environments, ScrapeHero helps enterprises design ethical web scraping strategies without operational risk.

FAQs

Start by reviewing the platform’s terms of service and using official APIs or licensed data feeds whenever possible. For complex needs, partner with a professional managed web scraping service that implements strict governance controls, including legal review, data minimization, secure credential management, and clear retention policies.

Work with a professional web scraping provider that collects data through ethical practices. They ensure you only gather the minimum data needed for your specific business purpose while maintaining proper governance, legal oversight, and audit trails for all data access.

Use sanctioned access methods like official APIs, licensed data partnerships, or professionally managed web scraping services that respect platform terms of service. These providers ensure you have a lawful basis for collecting personal data, implement security protocols for credential management, and maintain documentation of your compliance framework.

Access data through official channels such as platform APIs, commercial data licensing agreements, or professionally managed web scraping services that comply with terms of service. These providers conduct legal reviews before automation begins, document your business purpose and scope, and implement governance controls to ensure ongoing compliance with privacy regulations and platform policies.