In most web scraping projects, poor planning is often a greater threat than technical challenges. You see this happen when teams jump in without a clear goal. Web scraping project planning is critical because, without it, teams might sign with a cheap vendor or skip service contracts that hold the vendor to a deadline.

Consequently, the result is always the same: work stops, you have to fix things, and the data you receive is useless to your teams.

The good news is you can dodge all that noise with a concrete plan. When you draw the target early and set expectations that actually work, your project changes. This establishes the project as a consistent, measurable business activity rather than an uncertain technical experiment.

[Service 1]

This article will discuss the 10 practical steps to scope your next web scraping project. It will help you align your teams, select the right partner, and deliver the exact data your business demands without operational disruptions.

Step 1: Start with the Outcome

Step 2: Write Down the Decisions

Step 3: Define the Exact Data You Need

Step 4: Choose One-Time or Regular Delivery

Step 5: Align Your Internal Teams

Step 6: Plan for Legal Compliance

Step 7: Pick the Right Vendor.

Step 8: Define Service Contracts

Step 9: Plan the Data Delivery

Step 10: Set Up a Monitoring Process

Step 1. Start With the Outcome

The foundation of any successful web scraping project is a precise understanding of the business challenge it is designed to solve.

- Competitor Tracking: Are you trying to track every price your competitor lists?

- Catalog Mapping: Do you need to map product catalogs across fifty different stores?

- Market Audit: Are you auditing your market share for the quarter?

- Product Research: You may be collecting review quotes to reshape a product.

Every single goal requires precise data. The choices you make here cast the shape of every action that follows.

What Counts as “Good Data”? Good data is accurate, complete, consistent, and up to date.

Step 2. Write Down the Decisions Your Teams Need to Make

The next step is to systematically outline the specific decisions your teams need to inform with this data. Why is this important? Because once you grasp the decision, it becomes simple to define the specific data fields, the source websites, and the refresh rate you truly need.

How is this different from the Outcome? The Outcome is your strategic goal. The Decisions are the tactical choices your teams will make using the data to achieve that goal.

This focus helps you avoid the single biggest mistake companies make: asking the vendor to “collect everything.” Collecting everything adds pointless cost. It unnecessarily delays data delivery and introduces a significant volume of irrelevant data that your teams will never use. When you keep your focus fixed on the outcome, you pay only for the data that actually pushes your business forward.

To see what this looks like in practice, here are examples of the specific decisions each outcome from Step 1 enables:

1. Outcome: Competitor Tracking

- Should you run a promotion on Product X this week?

- Which competitor’s sale should you match?

2. Outcome: Catalog Mapping

- Which retailer should you prioritize for your new product launch?

- Are your product descriptions and images consistent across all major online stores?

3. Outcome: Market Audit

- Did you gain or lose market share last quarter?

- Which new competitor in your market is growing the fastest?

4. Outcome: Product Research

- What is the one thing your customers hate most about current products?

- Which feature should you highlight most in your upcoming ad campaign?

- Should you fix the durability issue or add the requested new feature first?

Step 3. Define the Exact Data You Must Have

Once the outcome is clear, your next step is to decide which information to extract from the site. List the specific fields your analysts need. Understanding how to plan a web scraping project means being specific about your data requirements from the start.

For example, in an e-commerce setting, if you’re tracking competitor price changes, you often only need the product name, current price, active discounts, star rating, and stock status.

Step 4. Decide Between One-Time or Regular Delivery

It is essential to define the project’s scope as either a one-time data delivery or a recurring process requiring ongoing updates. A one-time snapshot is fine for a market study or a catalog check. But if you need to monitor prices, stock levels, or competitor moves, you will need updates on a routine basis.

Web scraping project planning at this stage helps you determine the correct delivery frequency for your business needs. Daily, weekly, or monthly deliveries help you track changes without having to run a new project every time the need arises.

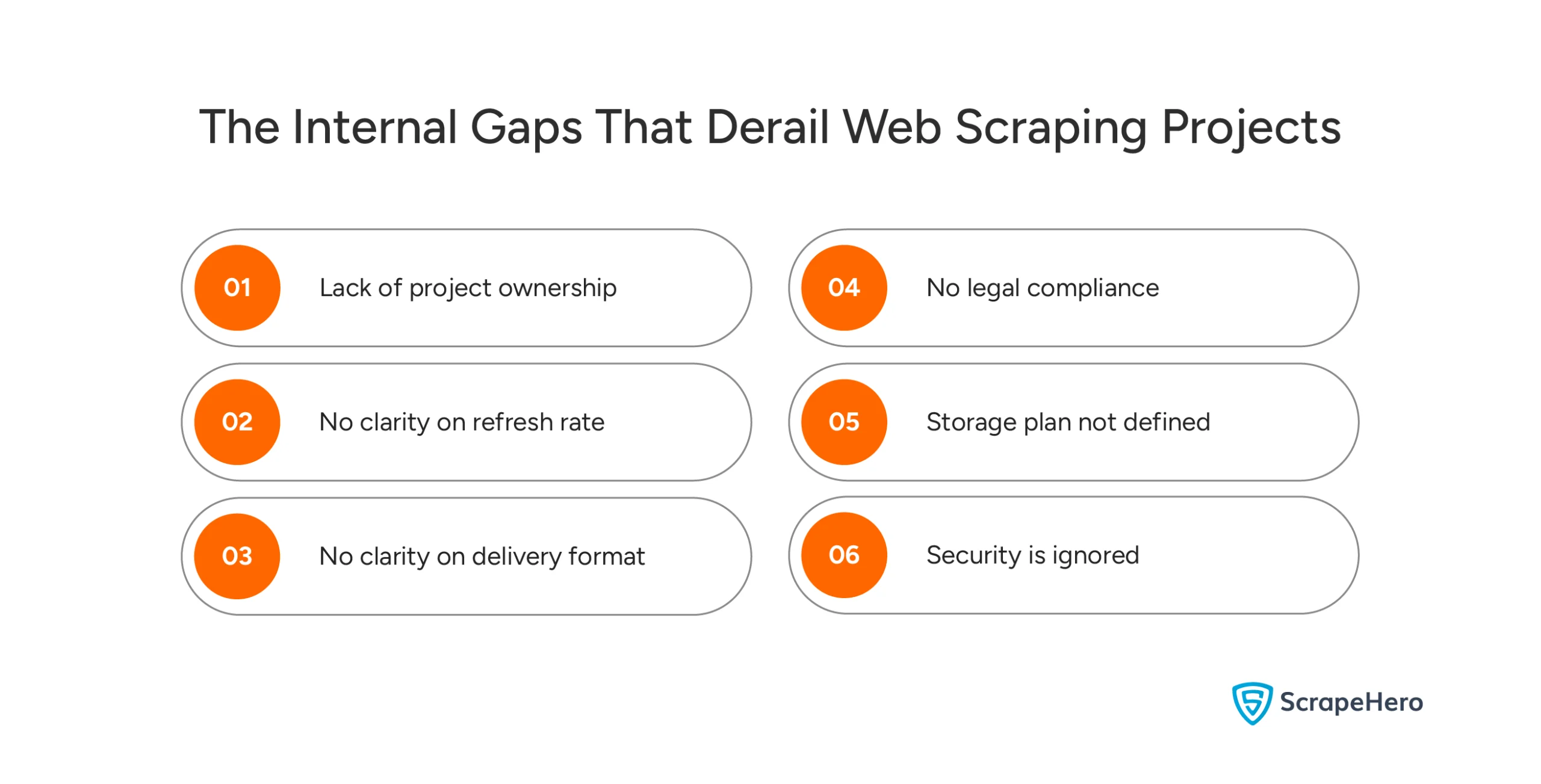

Step 5. Align Your Internal Teams

Do not speak to a single vendor before you align your internal teams. Every team has unique needs. Getting their input now prevents expensive, panic-driven changes later.

Effective web data extraction planning ensures that all teams are on the same page from day one. For example:

- BI & Analytics teams focus on data structure and delivery formats.

- The Product Management team requires specific technical specifications and standardized category names.

- Marketing is focused on actionable intelligence, such as pricing movements and review quotes.

- Competitor Intelligence needs broad site coverage for a complete market view.

- Security & IT are responsible for ensuring adherence to access rules, storage policies, and legal compliance.

Set up short talks with each team and ask these questions:

- What data do you actually need?

- How exactly will you use it?

- What file format works for you right now?

- How fresh does this data need to be?

- What security rules must we obey?

When you pull this information upfront, you prevent the slow spread of scope creep. You also avoid the awful last-minute requests that wreck timelines and budgets. Early alignment keeps the whole project clean, easy to predict, and far simpler to steer.

Step 6. Plan for Legal Compliance

Following the steps to plan web scraping includes addressing compliance early. Collecting data from websites is not as simple as pulling information and using it—you must deal with legal and ethical risks.

Making sure you obey the law keeps you from IP blocks, data privacy lawsuits, and legal consequences.

Here is what you must pay attention to in the planning stage:

Look at the Website’s Terms of Service: Many websites explicitly prohibit scraping. Before you begin, read these terms to make sure you are not breaking any rules.

Mind the Data Privacy Laws: Depending on the type of data you collect, you must follow privacy rules such as GDPR in Europe or CCPA in the US. For instance, scraping personal information exposes your business to severe legal trouble if you skip the correct steps.

Understand Rate Limits and IP Blocks: Websites often put a ceiling on how much data you can scrape at once to protect their servers. If you ignore these limits, you will find your IP address banned or your scraping job dead. Know how often you need the data, and build a strategy to manage rate limits.

This is why bringing in an expert web scraping service changes everything. A managed service like ScrapeHero not only provides the systems you need to pull data reliably, but also ensures you follow the rules at every turn. They grasp the deep details of website rules, data privacy, and how to scrape data without getting banned.

What GDPR and CCPA Mean for Your Web Scraping Project GDPR (General Data Protection Regulation): CCPA (California Consumer Privacy Act):

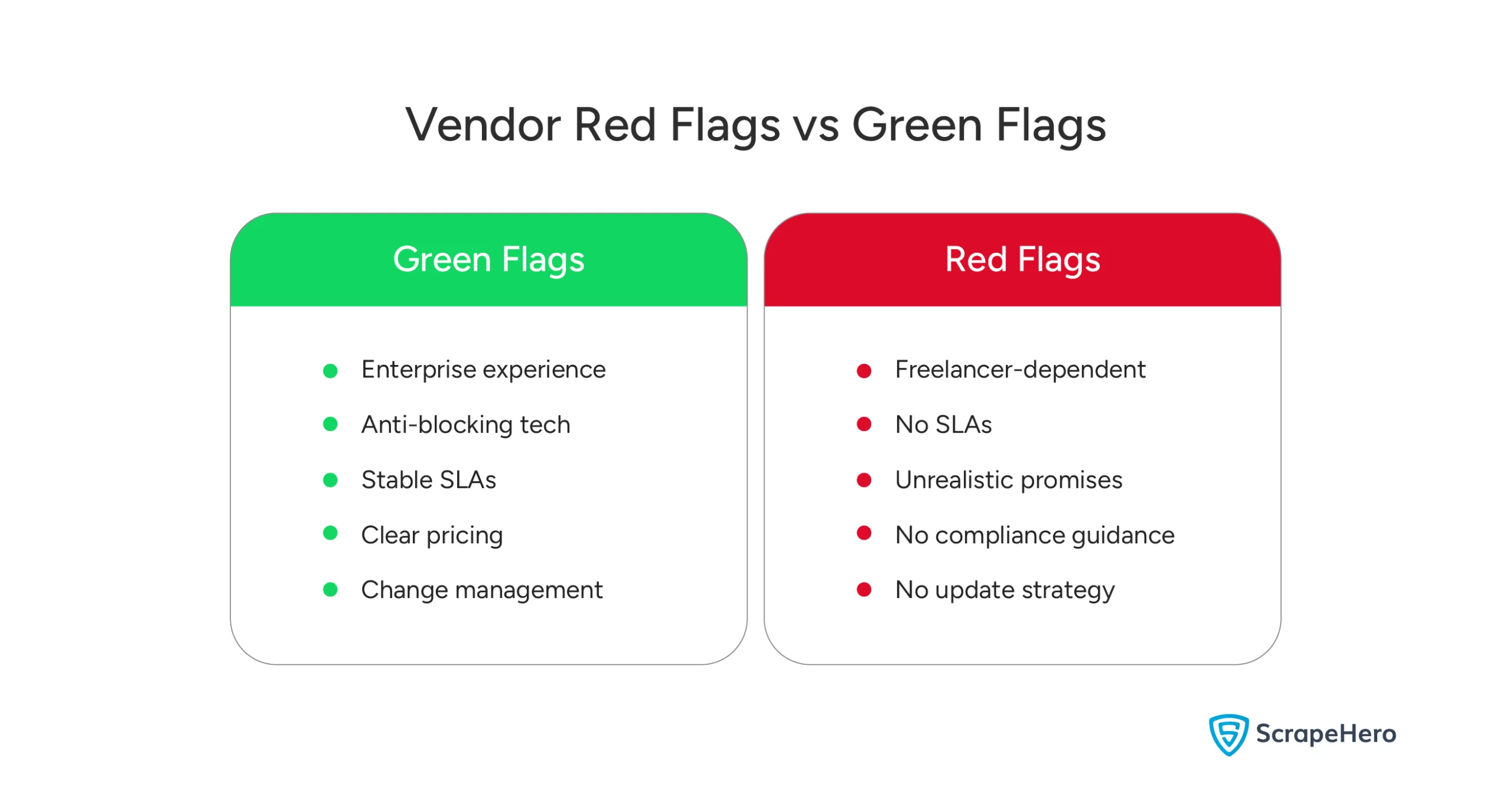

Step 7. Pick the Right Vendor

Selecting the right vendor is more critical to your project’s success than any technical decision. Web scraping project planning becomes significantly easier when you partner with the right team that understands enterprise requirements. Your ideal partner should meet the following key criteria:

- Technical Robustness: They must demonstrate the ability to navigate a vast range of websites. They need to handle complex, dynamic content and maintain seamless operations despite changes in site layout.

- Proven Enterprise Experience: A documented history of working with major companies is key. This proves they understand strict deadlines, corporate approval processes, and the need for rock-solid data consistency.

- Proactive Project Management: They must commit to transparent communication. They should provide regular, proactive updates that ensure clear visibility into progress without requiring constant follow-up.

- Transparent and Stable Pricing: You need a clear pricing model with no hidden costs. This ensures predictability and stability throughout the project.

Also, be aware of these critical red flags during vendor selection, as they represent significant long-term risks:

- Over-reliance on Low-Cost Freelancers: This often indicates a lack of institutional reliability and scalability, creating a risk of failure.

- Unrealistic Promises: Vague or overly ambitious commitments that lack a clear methodological foundation suggest a lack of experience or integrity.

- Absence of a Formal Service Contract: Operating without a contract leaves your project unprotected. It fails to define scope, service-level agreements (SLAs), liabilities, and data ownership.

- Undefined Security Protocols: Failing to clearly explain and document your security measures for data handling and access exposes your company to potential breaches.

- No Clear Maintenance Process: The lack of a coherent strategy for managing website changes results in future data disruptions and unplanned rework.

Choosing a vendor based on these factors may lower initial costs. However, it inevitably leads to massive technical debt, security vulnerabilities, and costly rework later.

A managed service like ScrapeHero simply removes the risk. You get an expert team, a solid process, and a system built specifically to deliver reliable data. That means you get data delivered on time, clear timelines, and a project that does not wobble from start to finish.

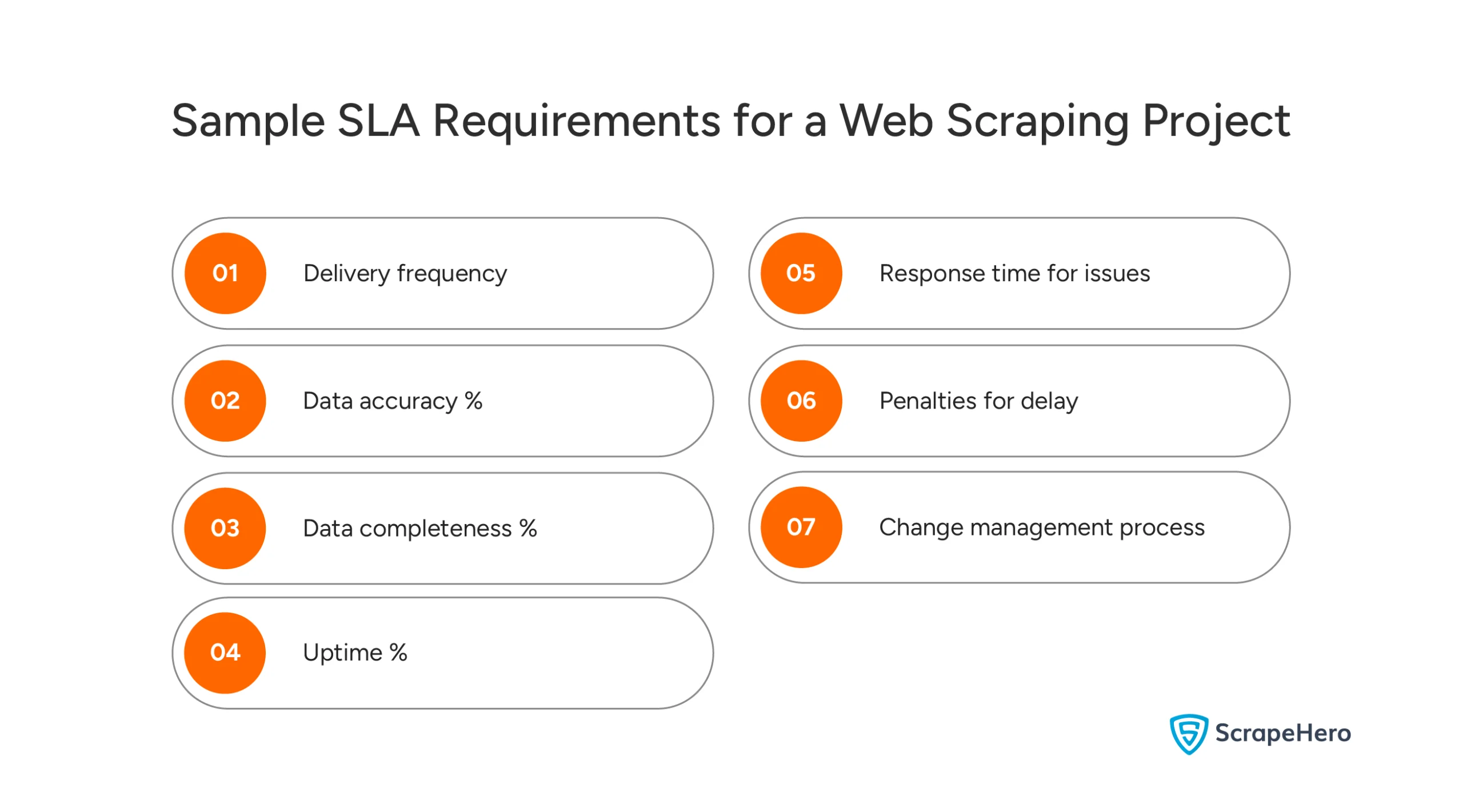

Step 8. Draft Service Contracts That Shield Your Project

Your service contract and SLA serve as the primary safeguard against project failure. It must specify the delivery times, the level of data completeness you demand, and the accuracy standard your analysts rely on.

You also must define how often the vendor will send updates. Define the escalation path for issues, and exactly how problems will be fixed if the system breaks. These specific details kill all guesswork. They ensure everyone knows what “winning” looks like before the work even starts.

Step 9. Plan The Data Delivery

A solid web scraping strategy includes planning how data will be formatted and delivered to match your team’s workflow. This choice determines how quickly your teams can actually use the data.

Therefore, keep it dead simple and align with your current workflow. Formats such as CSV and Excel are widely preferred for their practicality. They enable easy access, sharing, and seamless integration into existing systems.

There is no magical, single best option. The right format is the one your team can use instantly without any extra steps or new software.

Step 10. Set Up a Simple Monitoring and Review Process

Upon project initiation, it is essential to establish a streamlined monitoring process. This provides clear visibility into progress without requiring deep technical involvement. A qualified vendor will facilitate this through structured weekly reporting that details completed milestones, current initiatives, and upcoming priorities.

You must also request early data samples to confirm the structure, fields, and formatting before the complete data extraction begins. Error reports and change logs are equally vital. They show you exactly how the vendor is handling site changes, roadblocks, and other issues. They keep you aware of any risks without you having to ask for updates.

Your purpose is to stay informed, not to control every mouse click. You do not need to check every single row or sit through hour-long review calls. You only need steady updates to confirm the work is pointed in the right direction.

When you give quick feedback on early samples and weekly summaries, you reduce the risk of major surprises later. Making minor corrections early stops major disruptions at the end.

A simple monitoring process makes the project boringly predictable. This ensures transparency and accuracy for you. It provides clarity for the vendor and delivers the expected data to your teams without unnecessary delays.

Why ScrapeHero Is the Right Partner for Your Web Scraping Project

ScrapeHero is built for companies that want reliable data without taking on technical risk. When you work with ScrapeHero, you’re not getting DIY tools or some freelancers. You’re getting a fully managed web scraping service that handles every part of the data pipeline. That includes extraction, cleaning, validation, and delivery. Your teams receive ready-to-use data without worrying about failures, quality issues, or maintenance.

Built for Enterprise Needs

For enterprise needs, this model is safer and far more predictable. ScrapeHero is designed to handle complex, dynamic, or high-volume websites that usually break DIY tools. It also delivers consistent data even when sites change suddenly or implement anti-scraping measures.

You also get enterprise-grade compliance, strong security practices, and SLAs that match your internal standards. Every project includes dedicated project management, responsive support, and clear communication so you always know what’s happening.

Scale Without Limits

Most importantly, ScrapeHero scales. Whether you need one site or hundreds, thousands, or millions of pages, the delivery stays stable. Choosing ScrapeHero means your teams spend less time managing vendors and more time using high-quality data to drive decisions. It’s the easiest way to get predictable outcomes and data you can trust.

Why Planning Is Essential for Web Scraping Projects

Planning your web scraping project the right way saves you from the problems that derail most web scraping initiatives. When you take the time to define your goals, align your teams, and choose a vendor that can actually deliver at scale, you reduce risk across every stage of the project. Successful web scraping project planning ensures your initiative delivers measurable value from start to finish.

If you want expert guidance as you map out your project, you can speak with our experts. They’ll help you clarify your scope, align your teams, and design a plan that gets you clean, accurate data without the usual headaches.

FAQs

Start with the business outcome and list only the data fields needed to support that decision. Set clear limits for site coverage, delivery frequency, and formatting to avoid scope creep.

Align all teams early, define data requirements, set refresh rates, and plan for compliance. Keep delivery formats simple so the data fits directly into existing workflows.

Pick tools or services that can handle dynamic sites, avoid blocking, and consistently deliver accurate data. For enterprise needs, a managed service is usually more reliable than DIY tools.

Set clear SLAs, ask for early samples, and review weekly updates. A simple, steady monitoring process prevents late-stage surprises and keeps the project predictable.