Google no longer allows you to access their search results without enabling JavaScript. That means you can not simply use HTTP requests based methods for Google search scraping.

However, there are a couple of solutions:

- Read-made scrapers such as the Google Search Results scraper on ScrapeHero Cloud

- Headless browsers such as Playwright

This tutorial discusses both of these methods.

With ScrapeHero Cloud, you can download data in just two clicks!Don’t want to code? ScrapeHero Cloud is exactly what you need.

Google Search Scraping: The No-Code Method

For a hassle-free method for Google Search data extraction, a no-code solution is often the best choice. You can use ScrapeHero Cloud’s Google Search Results Scraper. It eliminates the need for maintaining code, bypassing anti-bot measures, and handling infrastructure.

Steps:

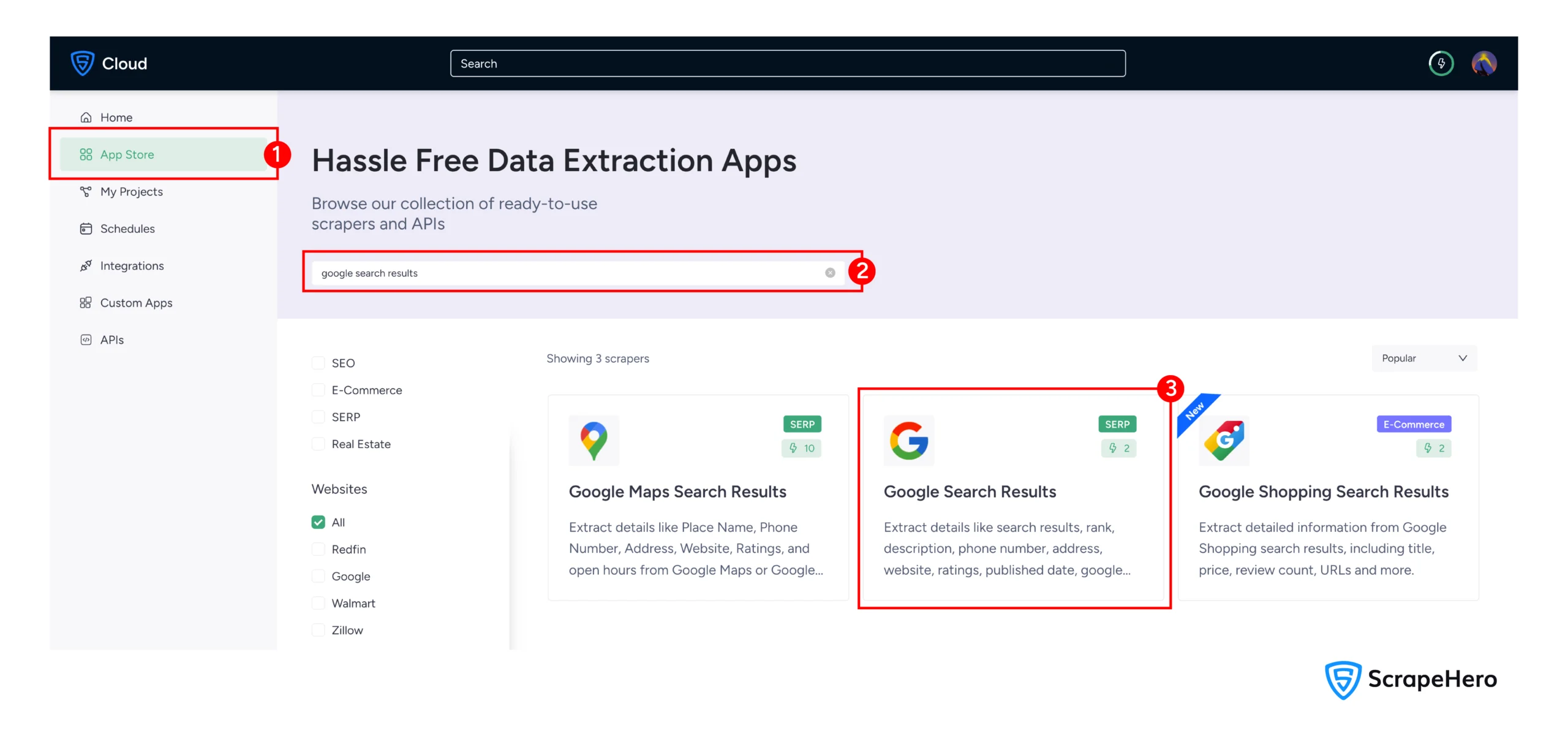

1. Log in to your ScrapeHero Cloud account

2. Navigate to the Google Search Results Scraper in the Scrapehero App store

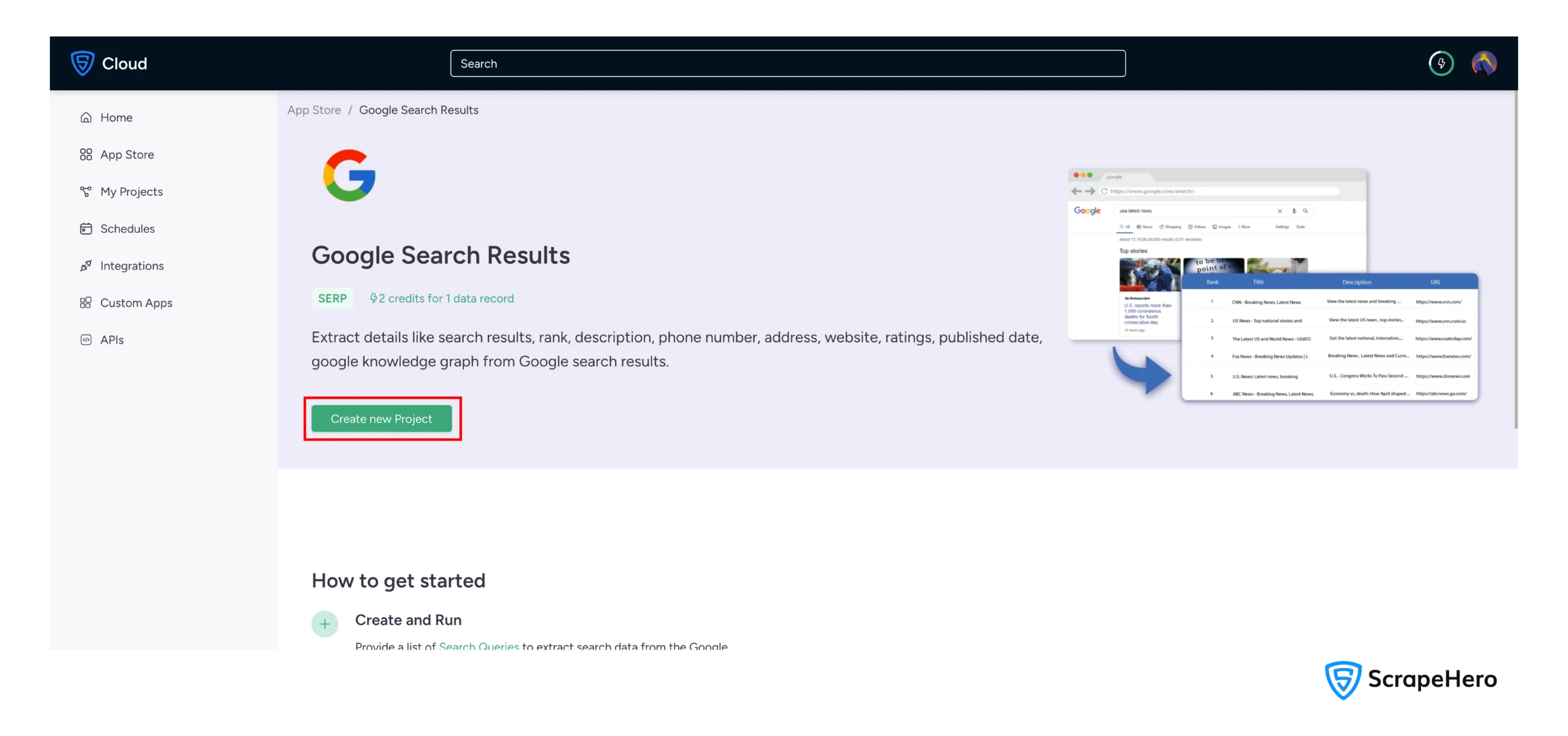

3. Click on “Create New Project”

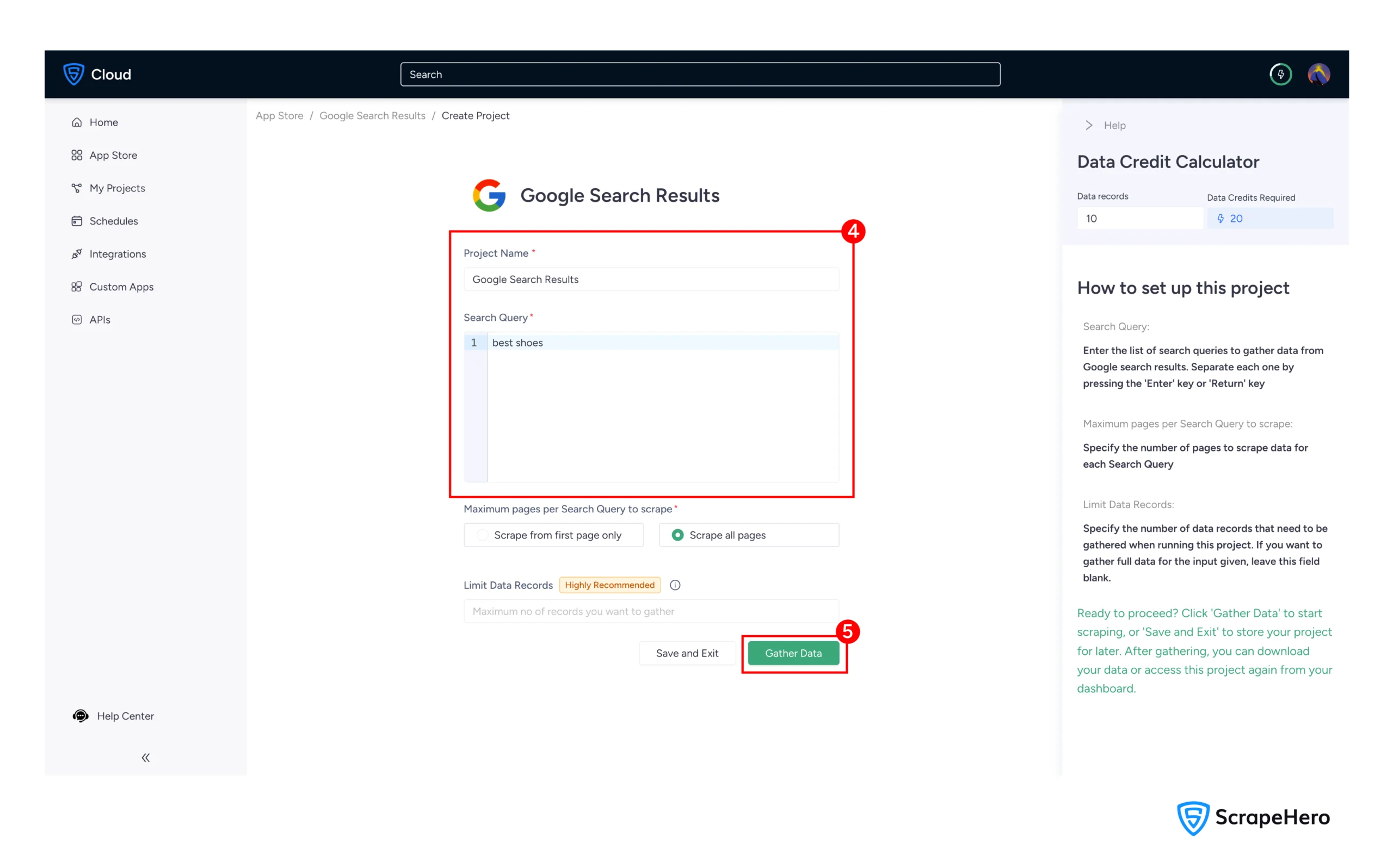

4. Enter project name and search queries

5. Click “Gather Data” to begin the data extraction process

Once complete, download your data in your preferred format (CSV, JSON, or Excel).

With ScrapeHero paid plans, you can also enjoy these features:

- Cloud storage integration: Automatically send your scraped data to cloud services like Google Drive, S3, or Azure.

- Scheduling: Run your scrapes daily, weekly, or monthly to monitor trends over time.

- API integration: Access your data programmatically via an API for use in your own applications and dashboards.

Google Search Scraping: The Code-Based Method

If you want more flexibility and don’t mind handling the coding and maintenance of a scraper, this detailed tutorial covers scraping Google search results with Python.

Setting Up the Environment

Before starting to write the code, you need to install the necessary Python library. The code uses Playwright, a modern browser automation library that can handle dynamic, JavaScript-heavy websites.

Install the Playwright library using PIP.

pip install playwright

Also install the Playwright browser.

playwright install

Data Scraped from Google Search Results

The provided code extracts specific data points from each result on the Google results page. Here’s what it collects and how:

- Title: The blue, clickable headline of the search result—extracted from the h3 element with the class LC20lb.

- URL: The full hyperlink address—retrieved from the href attribute of the a tag with the class zReHs.

- Domain: The root website address (e.g., example.com)—parsed from the full URL.

- Description: The snippet of text that summarizes the page content—taken from the div element with the class VwiC3b.

Writing The Code

If you want to get to work right away, here’s the complete code to scrape Google results page.

from playwright.sync_api import sync_playwright

import os, json

user_data_dir = os.path.join(os.getcwd(), "user_data")

if not os.path.exists(user_data_dir):

os.makedirs(user_data_dir)

with sync_playwright() as p:

user_agent="Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"

context = p.chromium.launch_persistent_context(

headless=False,

user_agent=user_agent,

user_data_dir=user_data_dir,

viewport={'width': 1920, 'height': 1080},

java_script_enabled=True,

locale='en-US',

timezone_id='America/New_York',

permissions=['geolocation'],

# Mask automation

bypass_csp=True,

ignore_https_errors=True,

channel="msedge",

args=[

'--disable-blink-features=AutomationControlled',

'--disable-automation',

'--disable-infobars',

'--disable-dev-shm-usage',

'--no-sandbox',

'--disable-gpu',

'--disable-setuid-sandbox'

]

)

page = context.new_page()

page.set_extra_http_headers({

'Accept-Language': 'en-US,en;q=0.9',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Encoding': 'gzip, deflate, br',

'Connection': 'keep-alive'

})

search_term = 'how to teach my cat to use laptop'

search_url = 'https://google.com'

page.goto(search_url)

page.wait_for_timeout(5000)

search_box = page.get_by_role('combobox',name='Search')

search_box.fill(f'{search_term}')

page.wait_for_timeout(1000)

search_box.press('Enter')

page.wait_for_selector('div.N54PNb')

for _ in range(5):

page.mouse.wheel(0, 1000)

page.wait_for_timeout(1000)

results = page.locator('div.N54PNb').all()

print("results fetched")

details = []

for result in results:

url = result.locator('a.zReHs').get_attribute('href')

domain = url.split('/')[2] if url else None

title = result.locator('h3.LC20lb').inner_text()

description = result.locator('div.VwiC3b').inner_text()

details.append(

{

'url':url,

'domain':domain,

'title':title,

'description':description

}

)

with open('search_results.json','w',encoding='utf') as f:

json.dump(details,f,ensure_ascii=False,indent=4)

Let’s break down this code step-by-step to understand how Google search scraping is implemented.

First, the script imports the necessary libraries:

- playwright is for browser automation

- os for handling file paths, and json for saving the data.

from playwright.sync_api import sync_playwright

import os, json

To make the browser instance appear more like a real user and potentially avoid blocks, the script creates a persistent user data directory. This allows the browser to save cookies and cache, creating a consistent session across runs.

user_data_dir = os.path.join(os.getcwd(), "user_data")

if not os.path.exists(user_data_dir):

os.makedirs(user_data_dir)

The sync_playwright context manager launches a Chromium browser with the configuration that helps you evade detection.

For instance, it runs in headed mode (headless=False), sets a realistic user agent, and uses the persistent context. The arguments (like –disable-blink-features=AutomationControlled) help mask automation indicators.

with sync_playwright() as p:

user_agent="Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"

context = p.chromium.launch_persistent_context(

headless=False,

user_agent=user_agent,

user_data_dir=user_data_dir,

viewport={'width': 1920, 'height': 1080},

java_script_enabled=True,

locale='en-US',

timezone_id='America/New_York',

permissions=['geolocation'],

# Mask automation

bypass_csp=True,

ignore_https_errors=True,

channel="msedge",

args=[

'--disable-blink-features=AutomationControlled',

'--disable-dev-shm-usage',

'--no-sandbox',

'--disable-gpu',

'--disable-setuid-sandbox'

]

)

Next, the code creates a new page (tab) within the browser context and sets extra HTTP headers to further mimic a browser request from an English-speaking user.

page = context.new_page()

page.set_extra_http_headers({

'Accept-Language': 'en-US,en;q=0.9',

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Encoding': 'gzip, deflate, br',

'Connection': 'keep-alive'

})The script now defines the search query and navigates to Google’s homepage. It uses Playwright’s get_by_role() to find the search box by its ARIA role and name, fill() to enter the search term, and press() to press the Enter key.

search_term = 'how to teach my cat to use laptop'

search_url = 'https://google.com'

page.goto(search_url)

page.wait_for_timeout(5000)

search_box = page.get_by_role('combobox',name='Search')

search_box.fill(f'{search_term}')

page.wait_for_timeout(1000)

search_box.press('Enter')

After initiating the search, the code waits for the results container (div.N54PNb) to appear. Google uses lazy-loading, so the code simulates scrolling using the mouse wheel five times and loads more results.

page.wait_for_selector('div.N54PNb')

for _ in range(5):

page.mouse.wheel(0, 10000)

page.wait_for_timeout(1000)

With the page fully scrolled, the script now locates all individual search result elements. It then loops through each result extracts these data points using the CSS selectors discussed earlier:

- Title

- URL

- Domain

- Description

And then it appends this data to a list.

results = page.locator('div.N54PNb').all()

print("results fetched")

details = []

for result in results:

url = result.locator('a.zReHs').get_attribute('href')

domain = url.split('/')[2] if url else None

title = result.locator('h3.LC20lb').inner_text()

description = result.locator('div.VwiC3b').inner_text()

details.append(

{

'url':url,

'domain':domain,

'title':title,

'description':description

}

)

Finally, the script writes the scraped data, stored in the details list, to a JSON file named search_results.json.

with open('search_results.json','w',encoding='utf') as f:

json.dump(details,f,ensure_ascii=False,indent=4)

Code Limitations

While this code is a functional example, consider these limitations for production use:

- Google frequently changes the CSS classes of its HTML elements (like N54PNb, LC20lb). A small change by Google will break the scraper.

- Despite the stealth measures, Google’s advanced anti-bot systems may still detect and block the automated browser, leading to CAPTCHAs or IP bans as the script doesn’t rotate IP addresses.

Wrapping Up: Why Use a Web Scraping Service

Building a custom scraper with Python and Playwright gives you a great learning experience and fine-grained control.

However, if your business relies on accurate, consistent, and large-scale Google Search data extraction, the maintenance overhead and risk of blocks are significant.

A professional web scraping service like ScrapeHero solves these problems by providing enterprise-grade data. We can handle proxy rotation, CAPTCHAs, and changes in Google’s layout, allowing you to focus on analyzing the data, not collecting it.

Connect with ScrapeHero to make data collection hassle free.

FAQs

This is a complex area and not legal advice. Generally, scraping publicly accessible data for fair use (e.g., analysis, research) may be permissible. However, always consult with a legal professional.

The most common reason is that Google has updated its HTML, and the CSS selectors (like div.N54PNb or h3.LC20lb) in the code doesn’t target the correct element. You now need to manually inspect the new Google Search Results Page and update the selectors accordingly.

To improve robustness, you should:

1. Implement random delays between actions

2. Rotate user agents and use a pool of residential proxies

3. Regularly monitor and update the CSS selectors.