Manual web scraping doesn’t scale. A change in a website’s HTML or a blocked IP can break your entire data pipeline.

Web scraping in a CI/CD pipeline can address this issue. By combining Python with tools like Docker and GitHub Actions, you can automate testing, deployment, and recovery.

This article walks you through how to automate web scraping in CI/CD step by step, helping you build a reliable system for continuous data extraction.

What Is a CI/CD Pipeline? Why Does It Matter?

Web scraping is fragile, and sites often change HTML, block IPs, or throttle requests. A CI/CD for web scraping setup catches failures early, auto-fixes or rolls back broken scrapers, and keeps extraction running with minimal human intervention.

That is, Continuous Integration (CI) can automatically check your Python scraping code whenever changes are made.

Continuous Deployment (CD) then pushes tested, working code to production without requiring any manual steps. This means your scrapers are tested and deployed automatically.

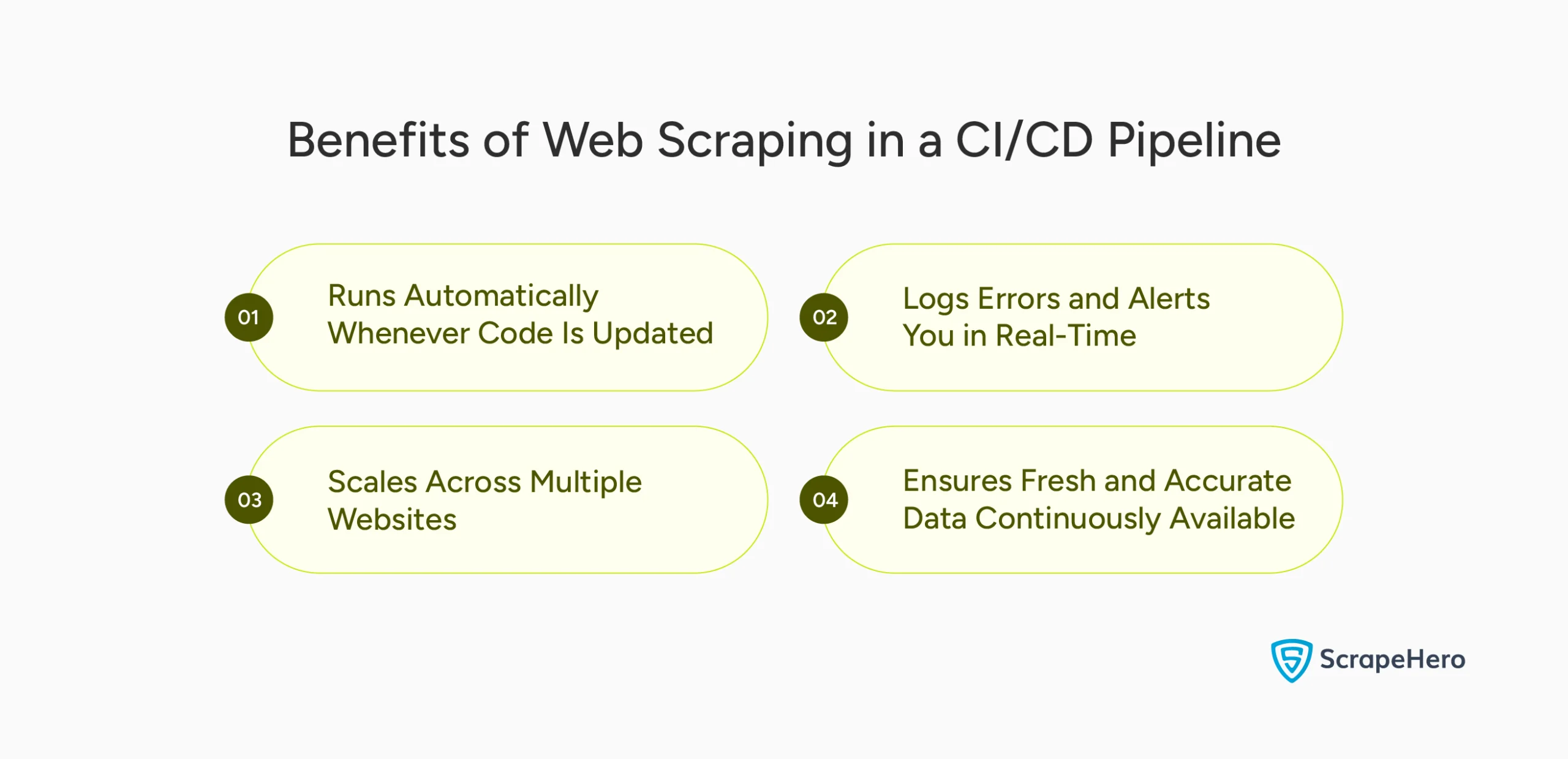

What are the Benefits of Web Scraping in a CI/CD Pipeline?

Your scrapers can stay accurate, reliable, and fast without constant manual work in a CI/CD pipeline. Here’s how it helps:

- Runs Automatically Whenever Code Is Updated

- Logs Errors and Alerts You in Real-Time

- Scales Across Multiple Websites

- Ensures Fresh and Accurate Data Continuously Available

1. Runs Automatically Whenever Code Is Updated

When you update a scraper to handle a new HTML structure With CI/CD, as soon as you push the change to GitHub, it runs tests and deploys the updated scraper automatically.

No manual steps are involved, which means quicker updates and fewer deployment errors. This is especially useful when managing many scrapers across different sites.

Here’s an example:

When you push code, your CI/CD tool (like GitHub Actions) pulls your Python scraper and runs it.

def run_scraper():

print("Scraper running...")

# Your actual scraping logic here

if __name__ == "__main__":

run_scraper()

The CI/CD pipeline automatically calls this script every time you push code. You do not need to trigger it manually.

2. Logs Errors and Alerts You in Real-Time

If a website changes its layout and a selector breaks, the scraper will fail to function correctly. With a CI/CD setup, failure gets logged instantly, and you get notifications through email, Slack, or GitHub.

For example:

import logging

logging.basicConfig(filename='errors.log', level=logging.ERROR)

def run_scraper():

try:

print("Scraping data...")

# Simulate failure

raise ValueError("Page layout changed")

except Exception as e:

logging.error(f"Error occurred: {e}")

raise

if __name__ == "__main__":

run_scraper()

With CI/CD, you can add a notification step to alert you when this script fails.

3. Scales Across Multiple Websites

Say you’re scraping 50 e-commerce sites for pricing data. Without CI/CD, deploying changes to each script would be a manual and chaotic process.

You can test and deploy all 50 scrapers in parallel with the help of a pipeline. You can even use Docker to containerize each one, keeping environments clean and consistent.

An example is:

sites = ["https://site1.com", "https://site2.com"]

def scrape(url):

print(f"Scraping {url}")

# Add actual scraping logic here

if __name__ == "__main__":

for site in sites:

scrape(site)

You can scale this with CI/CD to run in parallel or on different schedules per site.

4. Ensures Fresh and Accurate Data Continuously Available

All web data, including prices, inventory, and content, changes rapidly. A CI/CD pipeline lets you run scrapers on a schedule, either hourly or daily. It can also trigger runs after each update.

You can use a scheduler, such as Cron or APScheduler, to run the scraper periodically.

For example:

from apscheduler.schedulers.blocking import BlockingScheduler

import datetime

def scrape():

print(f"Scraping at {datetime.datetime.now()}")

# Actual scraping code here

scheduler = BlockingScheduler()

scheduler.add_job(scrape, 'interval', hours=1) # Runs every hour

scheduler.start()

Even outside CI/CD, Python can schedule recurring runs. You can combine this with CI/CD to auto-deploy updated versions.

Step-by-Step: Build a Python-Based Web Scraping CI/CD Pipeline

Here’s a step-by-step process to build a Python-based web scraping CI/CD pipeline.

1. Choose Your Tools

You can choose

- requests + BeautifulSoup for static pages

- Selenium for JavaScript-heavy websites

- pandas or json for saving results

2. Create a Python Scraper

Let’s build a Python scraper that fetches book titles from books.toscrape.com using requests and BeautifulSoup.

It extracts book titles from <h3><a> tags inside each product block and prints them as a list.

import requests

from bs4 import BeautifulSoup

def scrape_products():

url = "https://books.toscrape.com/"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

books = []

for item in soup.select('article.product_pod h3 a'):

books.append(item['title'])

return books

if __name__ == "__main__":

data = scrape_products()

print(data)

3. Create Dockerfile

You can use a Dockerfile to containerize your Python scraper. It ensures your scraper runs in a clean, consistent environment—on your machine, in CI/CD, or the cloud.

# Use an official Python image

FROM python:3.10

# Set working directory

WORKDIR /app

# Copy files

COPY . .

# Install dependencies

RUN pip install requests beautifulsoup4

# Run scraper

CMD ["python", "scraper.py"]

With this Dockerfile, you can eliminate environment-related bugs and deploy the scraper anywhere including AWS, Google Cloud, or any container-based service.

4. Set Up Git Repository

Using Git allows version control, team collaboration, and integration with platforms like GitHub Actions to automate workflows.

/scraper

├── scraper.py

├── Dockerfile

└── .github/workflows/

5. Create GitHub Actions CI/CD Workflow

GitHub Actions can automate your scraping pipeline. This YAML workflow runs your scraper every time you push to the main branch.

<name: Python Web Scraper CI/CD

on:

push:

branches: [ main ]

jobs:

build-and-run:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.10'

- name: Install dependencies

run: |

pip install requests beautifulsoup4

- name: Run scraper

run: python scraper.py

Every time you push changes, GitHub installs your dependencies and runs scraper.py. This gives you automatic testing and deployment.

6. Deploy on Cloud

To run your scraper continuously or on a schedule, you’ll want to deploy it in the cloud. Cloud deployment allows automation, scalability, and integration with data pipelines.

You can run the scraper using:

- AWS Lambda (for short tasks under 15 mins)

- AWS EC2 (for longer or more frequent scraping)

- Google Cloud Run (scales containers automatically)

7. Add Logging and Monitoring

Logging is critical for debugging and tracking scraper health.

In Python, log to a file:

import logging

logging.basicConfig(filename='scraper.log', level=logging.INFO)

def log_message(msg):

logging.info(msg)For real-time alerts, you can send logs to AWS CloudWatch, Loggly, and Datadog.

To run scrapers consistently at scale, it is advisable to use reliable schedulers to control frequency. Some of the options include GitHub Actions (on.schedule), Apache Airflow, and AWS EventBridge.

Why ScrapeHero Web Scraping Service

Web scraping in a CI/CD pipeline can save hours of manual work, prevent silent data failures, and scale your operations.

But if you don’t want to manage the technical setup, ScrapeHero web scraping service can take care of the entire scraping process for you.

We offer enterprise-grade solutions to build and maintain robust scrapers so you can focus on analyzing data and making decisions—without dealing with code, maintenance, or infrastructure.

Frequently Asked Questions

To easily automate web scraping with a simple data pipeline, you can use Python with Docker and GitHub Actions. This setup runs scrapers automatically on every code update.

You can start with GitHub for version control, write a Dockerfile, and deploy to cloud platforms like AWS or Google Cloud.

You can use requests and BeautifulSoup for static pages. For JavaScript-rendered content, it is better to use Selenium or Playwright.