Scraping data from interactive web maps requires understanding the source of the data. Most methods for building interactive maps—including Leaflet, OpenLayers, and Mapbox—rely on external APIs to retrieve data, so to scrape them, you need to identify the endpoints.

And to identify the endpoints, you need to understand what kind of data to look for. This article gives an overview of extracting data from interactive maps using Leaflet, OpenLayers, or Mapbox maps by identifying the API endpoints.

Leaflet Maps

Leaflet is a lightweight, open-source JavaScript library designed for mobile-friendly interactive maps. It often preloads or caches data, making it accessible via browser storage or network requests.

This JavaScript library fetches data as needed, typically in response to map view changes (e.g., zoom or pan). This data is frequently served from a backend API and may be cached locally by the browser to improve performance.

Typical endpoints:

- Public or open data APIs serving JSON or GeoJSON (e.g., USGS Volcano API, Socrata open data portals).

- Static files (GeoJSON, CSV) hosted on the same domain or external servers.

- Example: https://data.americorps.gov/resource/yie5-ur4v.json?stabbr=ND

OpenLayers Maps

OpenLayers is a high-performance library for rendering complex map data. It often uses Web Map Services (WMS) or vector layers that can be queried directly.

OpenLayers can fetch data from various sources, including WMS, Web Feature Services (WFS), and vector tiles. WMS serves raster data (images), while WFS serves vector data (features with geometries and attributes).

Typical endpoints:

- WFS endpoints returning GeoJSON or XML feature collections.

- Example: https://example.com/geoserver/wfs?service=WFS&request=GetFeature&typename=layername&outputFormat=application/json

Mapbox Maps

Unlike OpenLayers and Leaflet, which are JavaScript libraries, Mapbox is a robust platform for building custom maps with rich, interactive data layers. It also provides additional features, including geocoding, routing, and tile serving.

Mapbox uses a combination of vector tiles and data layers. Data can be fetched via REST APIs and sometimes GraphQL. Mapbox also requires access tokens for API authentication.

Typical endpoints:

- Tile endpoints serving vector tiles, usually requiring an access token.

- REST APIs for geocoding, directions, or custom data layers.

- Example: https://api.mapbox.com/v4/{tileset_id}/{z}/{x}/{y}.vector.pbf?access_token=YOUR_TOKEN

How to Find The Endpoints for Web Map Data Scraping

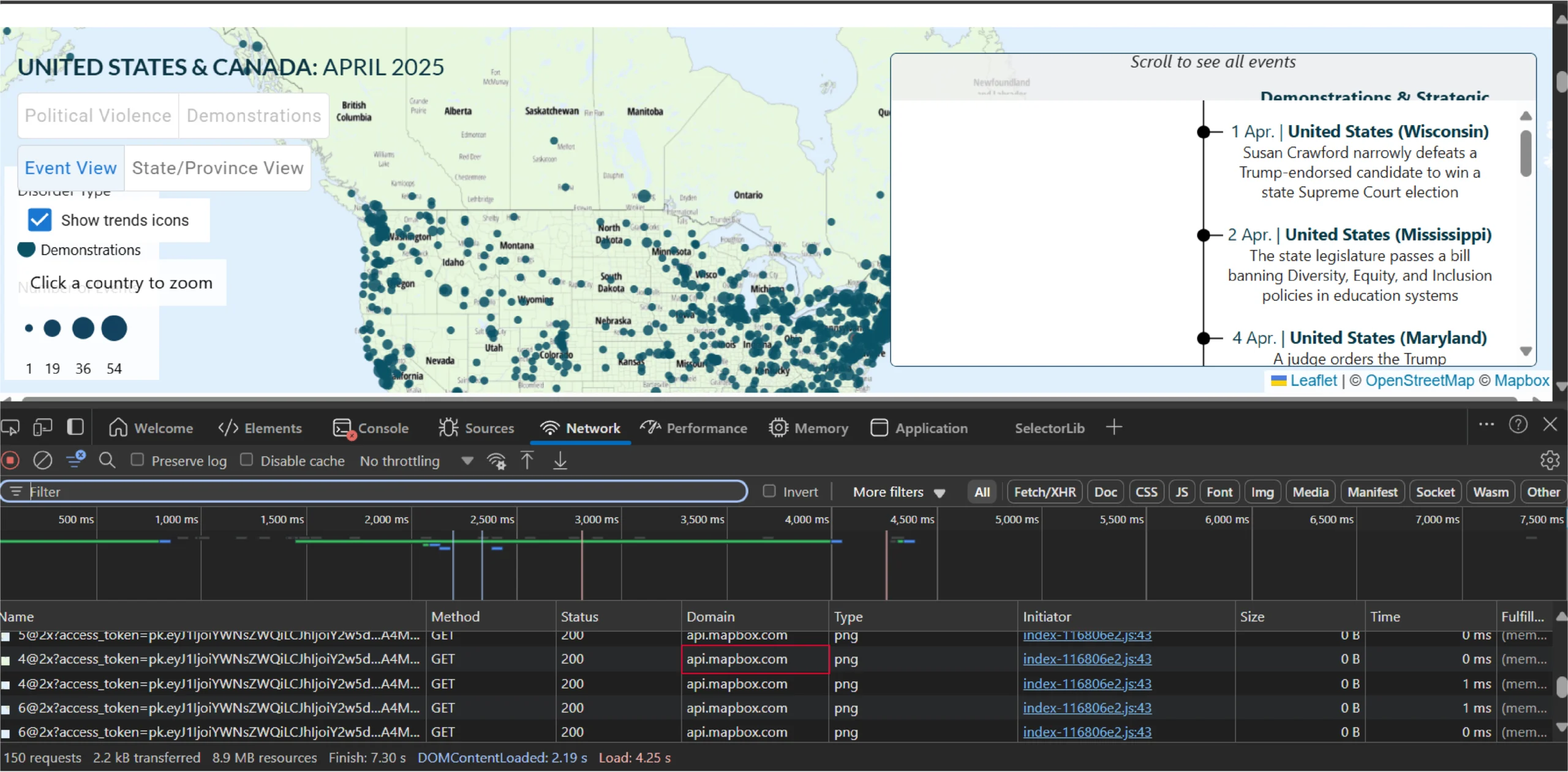

The endpoints for data scraping interactive maps can be found using the browser’s Developer Tools:

- Visit the interactive map and open Developer Tools (F12 or right-click and select “Inspect”).

- Go to the Network tab.

- Reload the page or interact with the map by zooming, panning, etc.

- Filter requests by XHR or Fetch.

- Look for the URL patterns mentioned above.

- Examine the response tab to confirm it contains map features.

Scraping Data from Interactive Web Maps: An Example

Whether the website uses Leaflet, OpenLayers, or Mapbox, the browser’s Inspect feature will reveal the data source.

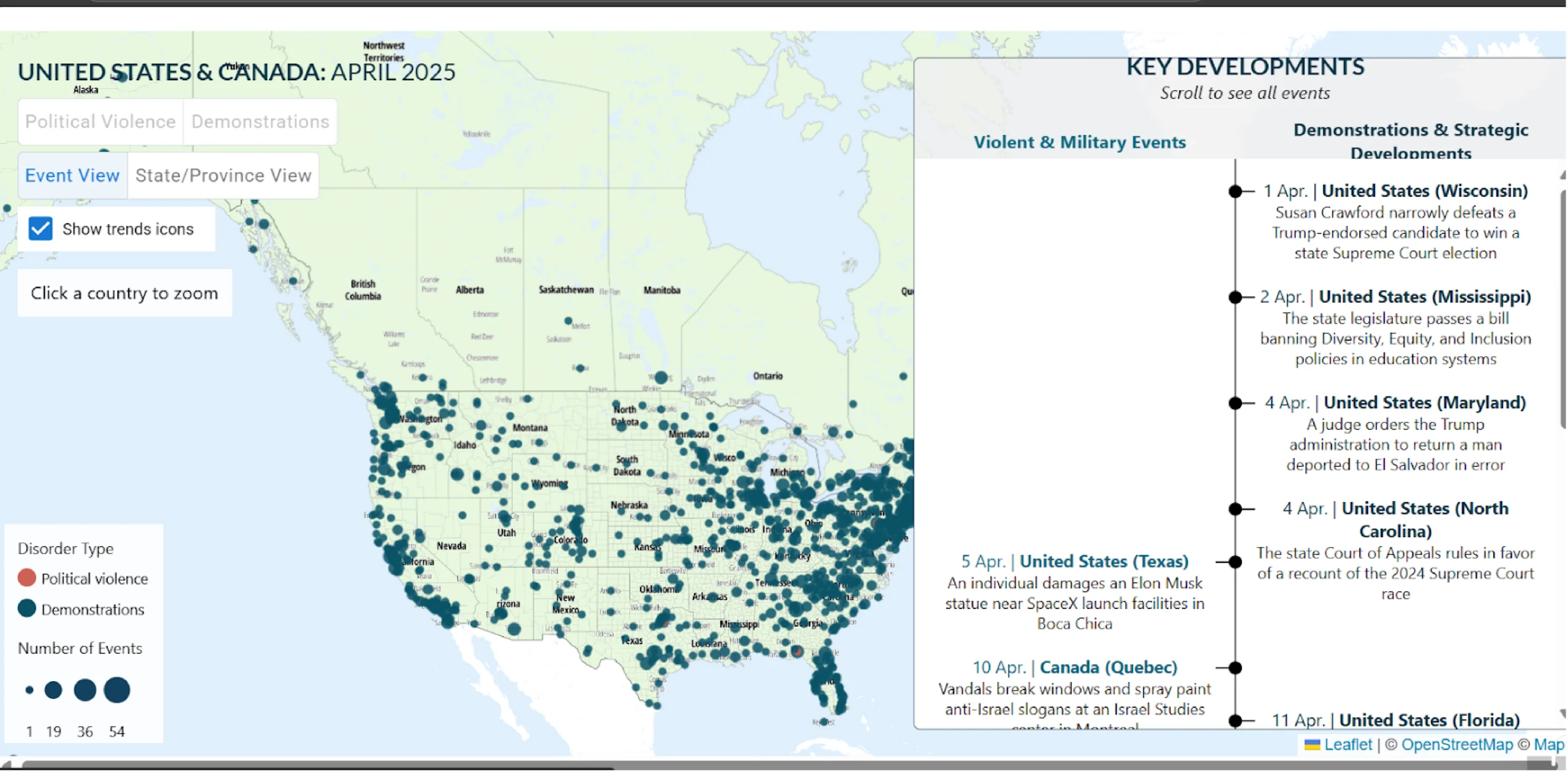

Consider the interactive map from acleddata.com.

By using the Inspect feature, you can see that the site uses the Mapbox API.

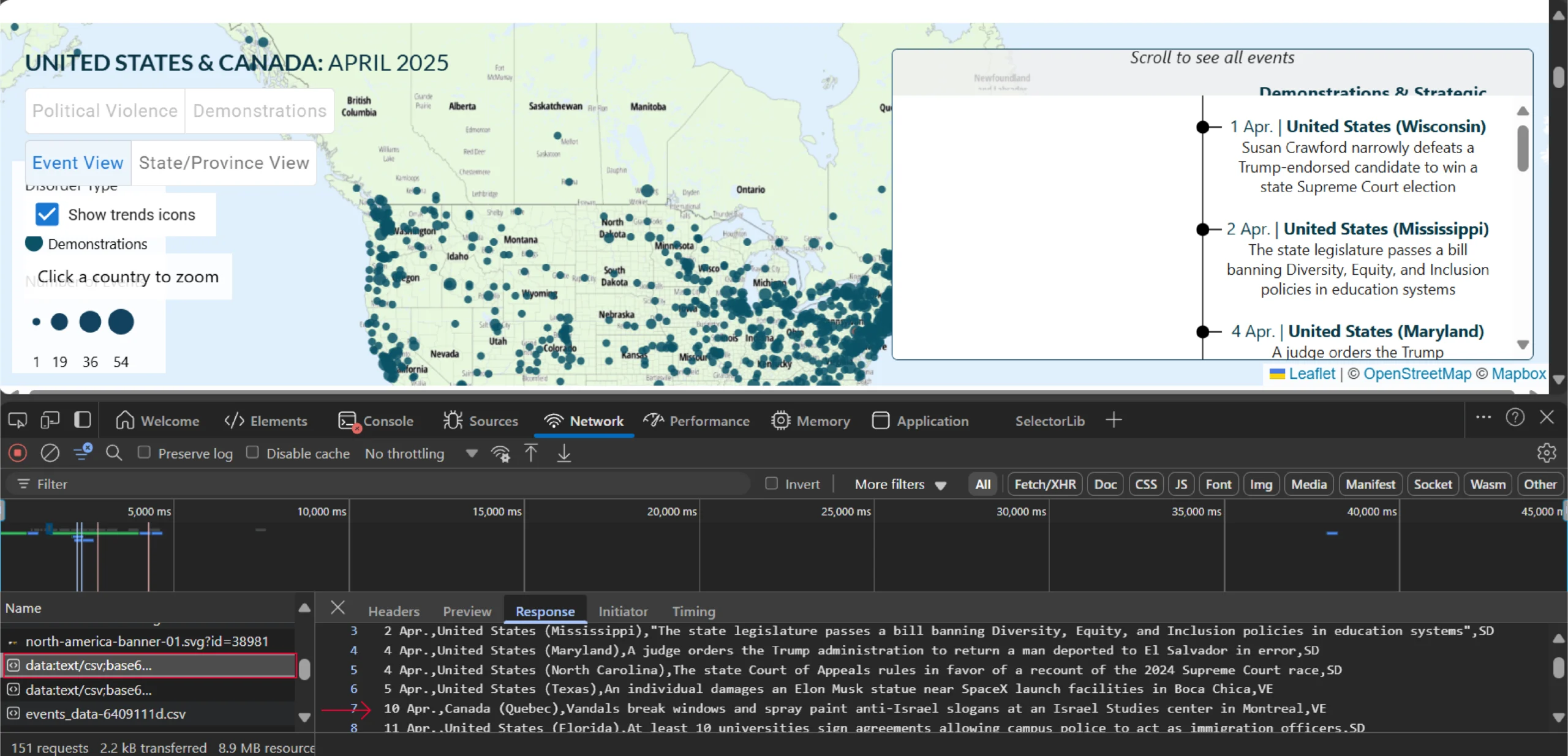

However, by scrolling through the requests, you can find one that fetches data from a CSV file.

You can copy this request URL and scrape the data using Python’s requests library.

Start by importing requests, which allows you to fetch the CSV data.

import requests

Next, make an HTTP request to the copied URL using the requests library’s get() method.

response = requests.get('https://acleddata.com/wp-content/data-viz/ro/v2/us-canada/assets/events_data-6409111d.csv')

Finally, save the response text—the CSV data—to a file.

with open('events_data.csv', 'w') as f:

f.write(response.text)

Do you want to scrape data from Google Maps instead? Try the Google Maps Scraper from ScrapeHero Cloud for free.

Best Practices

- Data Validation and Transformation: Verify scraped data for consistency, especially with paginated or cached responses. Use tools to clean and structure the data.

- Handle Pagination: Adjust API parameters (e.g., page, offset) to fetch all data for large maps.

- Authentication: For maps requiring login or API keys, use Selenium to automate access or include the keys in your API requests (if permitted). Handle API keys securely (do not embed them directly in code).

- Error Handling: Implement robust error handling in your scraping scripts to manage network issues, API errors, and unexpected data formats gracefully.

With ScrapeHero Cloud, you can download data in just two clicks!Don’t want to code? ScrapeHero Cloud is exactly what you need.

Ethical and Legal Considerations

- Respect robots.txt: Check the site’s robots.txt (e.g., example.com/robots.txt) for scraping rules. This file specifies which parts of the site your scraper can access.

- Minimize Server Load: Implement delays (e.g., 1–2 seconds between requests) to avoid overwhelming servers. Consider using exponential backoff for retries.

- Terms of Service: Review and comply with the website’s terms of service to avoid violations. Pay close attention to clauses related to automated access and data usage.

- Legal Awareness: Understand copyright and data usage rights—consult a legal expert for commercial scraping. Be aware of data protection regulations (e.g., GDPR) when scraping data that includes personal information.

Additional Tips

- Rate Limiting: Use delays or proxies to avoid IP bans when scraping large datasets. Implement exponential backoff for retries after rate limit errors.

- Data Storage: Save scraped data in structured formats like CSV or JSON for analysis. Consider using a database for large datasets.

- Error Handling: Add checks for failed requests or incomplete data in your scripts. Log errors to aid debugging and recovery.

- Geospatial Data Formats: Be prepared to handle standard geospatial data formats like GeoJSON, KML, and WKT. Libraries like geopandas (Python) and turf.js (JavaScript) can help parse and manipulate this data.

Access thousands of global brands and millions of POI location data points ready for instant purchase and download.Power Location Intelligence with Retail Store Location Datasets

Wrapping Up: Why Use a Web Scraping Service

For scraping data from interactive web maps, the best approach is to identify the APIs they use, which you can do using the browser’s Inspect feature. However, finding accessible APIs can be challenging, and a web scraping service may be more efficient.

A web scraping service like ScrapeHero can handle all the technical aspects of data scraping. ScrapeHero is an enterprise-grade web scraping service capable of building high-quality web scrapers.

Our experts at ScrapeHero will manage finding APIs and extracting data, allowing you to focus on data analysis rather than collection.