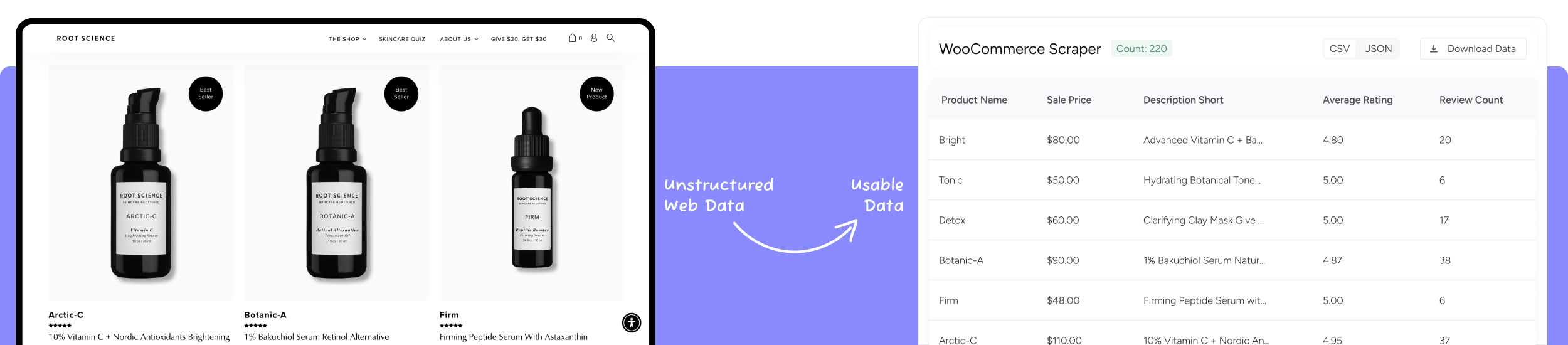

Seamlessly extract all product details from any WooCommerce-powered website, providing you with a comprehensive product data set.

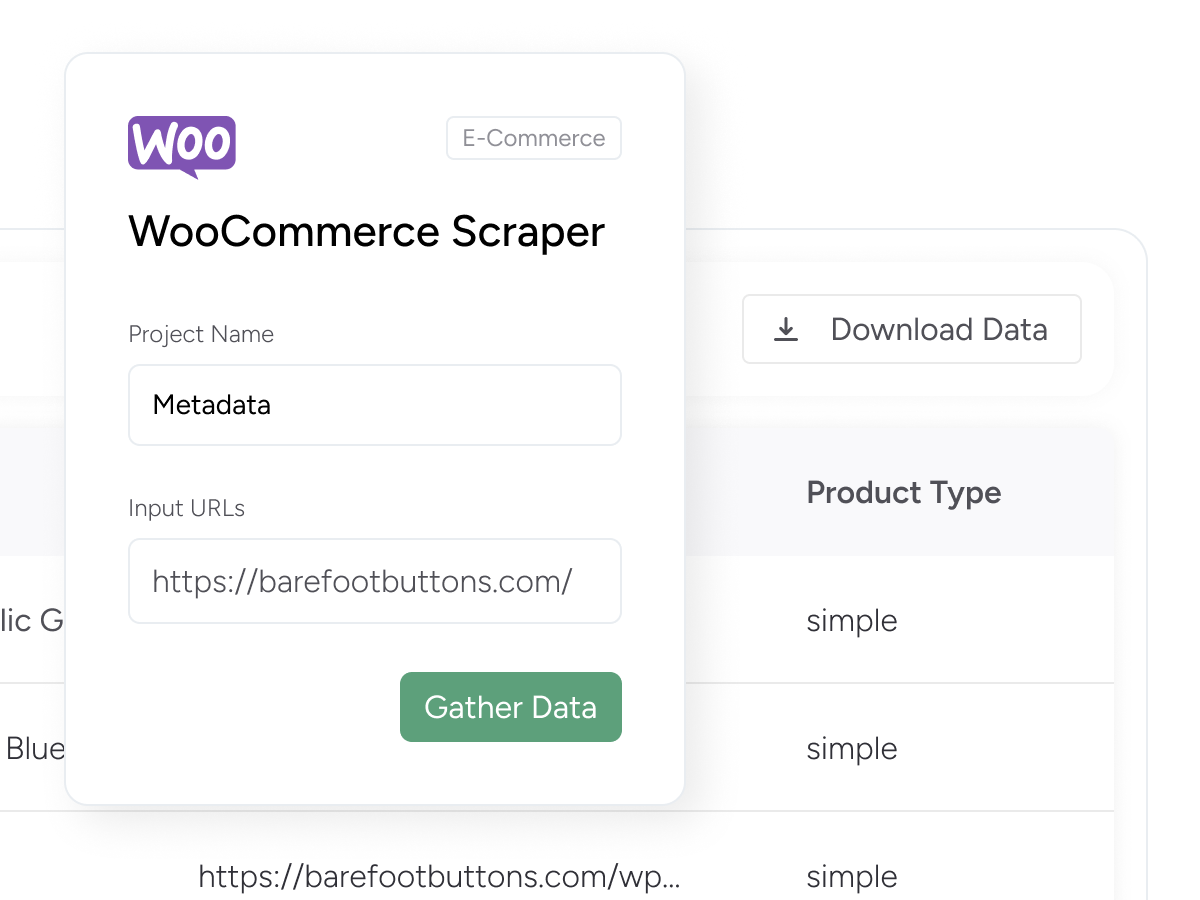

It’s as easy as Copy and Paste

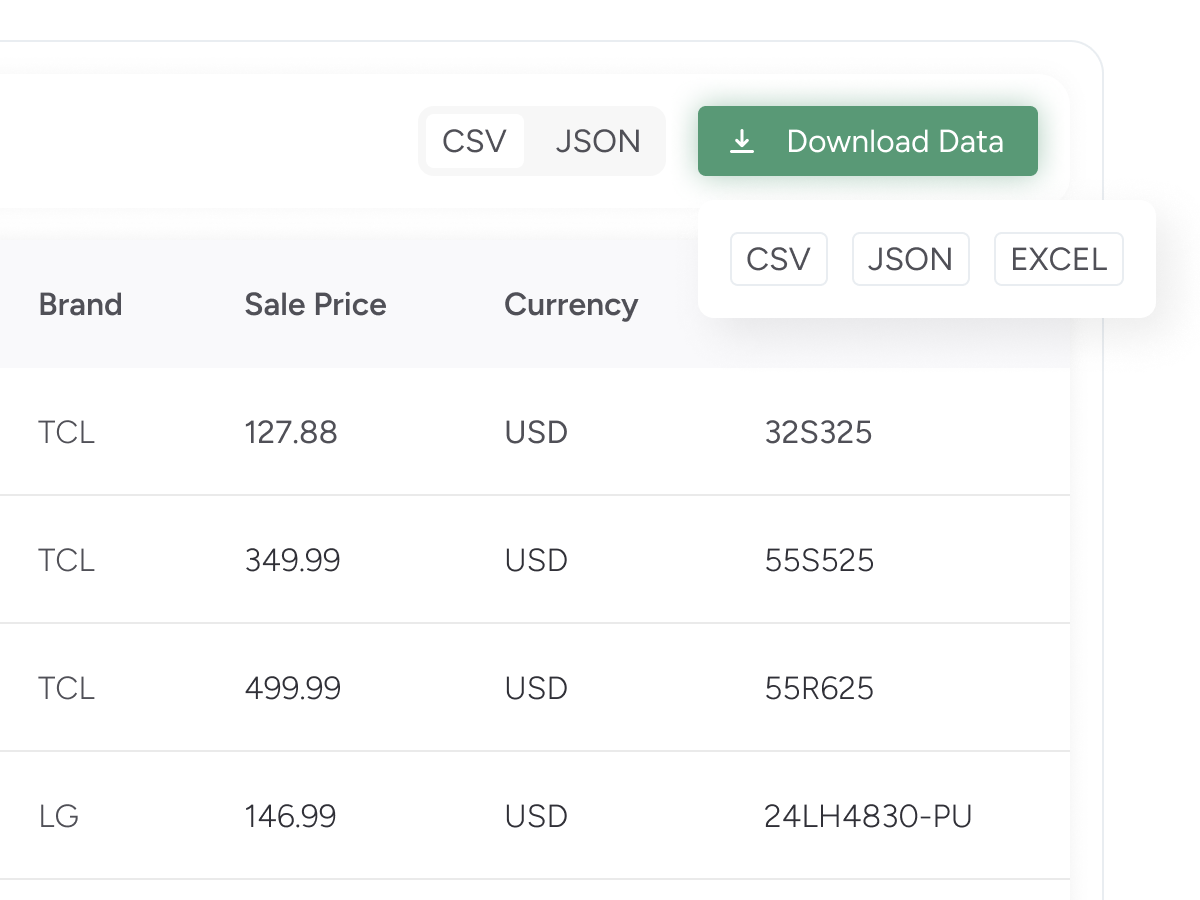

Download the data in Excel, CSV, or JSON formats. Link a cloud storage platform like Dropbox to store your data.

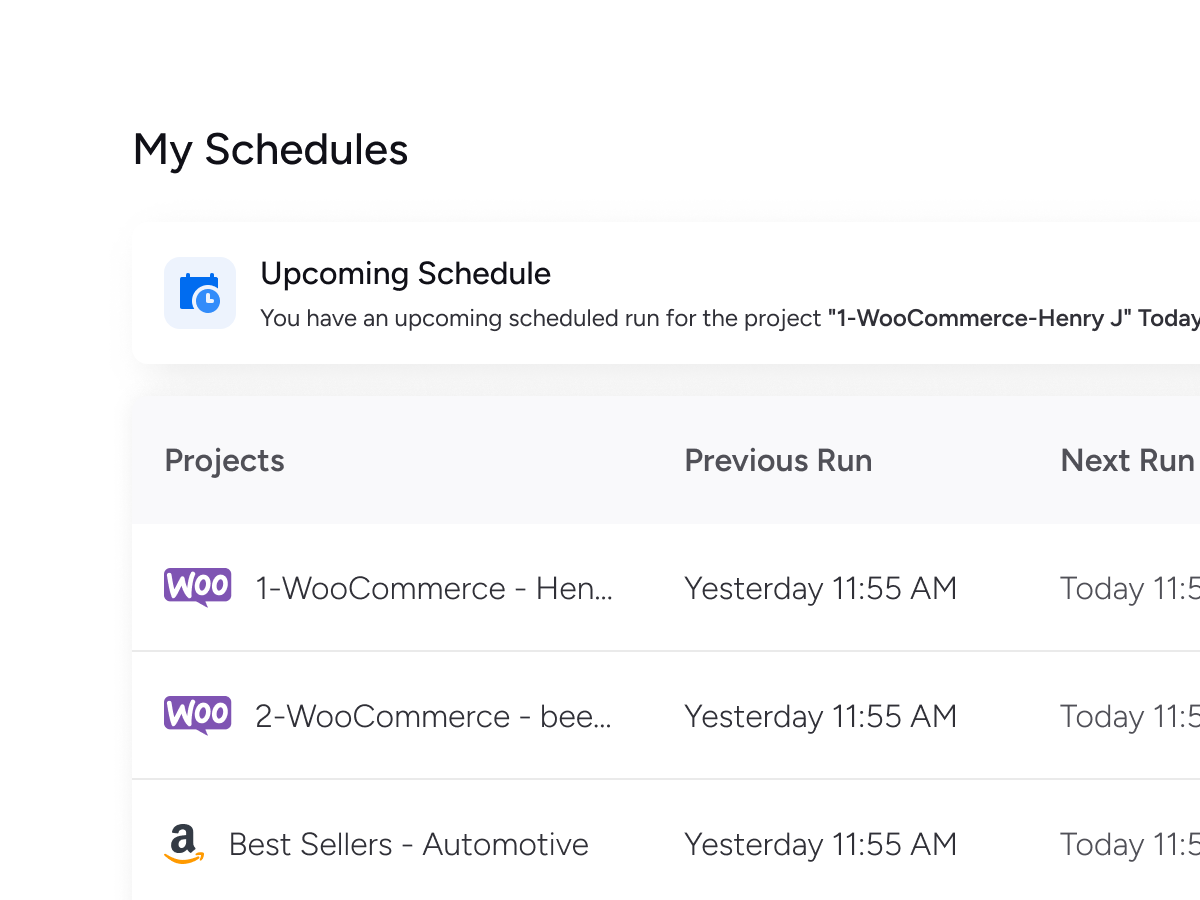

Schedule automated crawls to deliver fresh data to Dropbox, AWS S3, Google Drive, or to your app via API. Choose hourly, daily, or weekly updates to stay current.

| Product ID | Product Name | Product Type | Regular Price | Sale Price | Currency | Product URL | In Stock | Images | Average Rating | Review Count | SKU | Categories | Description | Description Short | Variations | Input URL | Pagination URL |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 29316 | WingMan Yacht Rock Yellow | simple | 995 | 995 | USD | https://barefootbuttons.com/product/wingman-yellow/ | True | https://barefootbuttons.com/wp-content/uploads/2021/11/yachtrockyellow.png, https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png | 0 | 0 | WMY | [{"link": "https://barefootbuttons.com/product-category/accessories/", "name": "Accessories"}, {"link": "https://barefootbuttons.com/product-category/wingman/", "name": "WingMan"}] | - | Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet. | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

| 29317 | WingMan Co-Pilot Purple | simple | 995 | 995 | USD | https://barefootbuttons.com/product/wingman-purple/ | True | https://barefootbuttons.com/wp-content/uploads/2021/11/copilotpurple.png, https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png | 0 | 0 | WMP | [{"link": "https://barefootbuttons.com/product-category/accessories/", "name": "Accessories"}, {"link": "https://barefootbuttons.com/product-category/wingman/", "name": "WingMan"}] | - | Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet. | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

| 29321 | WingMan Go For Takeoff Green | simple | 995 | 995 | USD | https://barefootbuttons.com/product/wingman-green/ | True | https://barefootbuttons.com/wp-content/uploads/2021/11/gofortakeoffgreen.png, https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png | 0 | 0 | WMG | [{"link": "https://barefootbuttons.com/product-category/wingman/", "name": "WingMan"}] | - | Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet. | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

| 31130 | Barefoot Buttons V1 Skirtless Big Bore Silver | simple | 995 | 995 | USD | https://barefootbuttons.com/product/22-v1-sb-sv/ | False | https://barefootbuttons.com/wp-content/uploads/2022/10/Skirtless_BigBore_Silver.png, https://barefootbuttons.com/wp-content/uploads/2022/10/Skirtless_BigBore_Silver_2.png | 0 | 0 | 22-V1-SB-SV | [{"link": "https://barefootbuttons.com/product-category/big-bore/", "name": "Big Bore"}, {"link": "https://barefootbuttons.com/product-category/big-bore/skirtless-bigbore/", "name": "Skirtless Big Bore"}] | - | The Skirtless Big Bore button has a flat bottom to solve clearance issues on pedals that have low mounted switches or led lights that interfere with a standard button depressing. CLICK HERE TO MAKE SURE YOUR SIZING IS CORRECT FREE SHIPPING OVER $28.00 IN THE US | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

| 32667 | Barefoot Buttons V1 Skirtless Big Bore Acrylic Clear | simple | 995 | 995 | USD | https://barefootbuttons.com/product/barefoot-buttons-v1-skirtless-big-bore-acrylic-clear/ | True | https://barefootbuttons.com/wp-content/uploads/2023/03/Skirtless_BigBore_Acrylic_Clear.png | 0 | 0 | 22-V1-SB-CR | [{"link": "https://barefootbuttons.com/product-category/big-bore/", "name": "Big Bore"}, {"link": "https://barefootbuttons.com/product-category/big-bore/skirtless-bigbore/", "name": "Skirtless Big Bore"}] | - | - | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

| 29307 | WingMan Afterburner Orange | simple | 995 | 995 | USD | https://barefootbuttons.com/product/wingman-orange/ | True | https://barefootbuttons.com/wp-content/uploads/2021/11/afterburnerorange.png, https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png | 0 | 0 | WMO | [{"link": "https://barefootbuttons.com/product-category/accessories/", "name": "Accessories"}, {"link": "https://barefootbuttons.com/product-category/wingman/", "name": "WingMan"}] | - | Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet. | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

| 29314 | WingMan Back In Black | simple | 995 | 995 | USD | https://barefootbuttons.com/product/wingman-black/ | True | https://barefootbuttons.com/wp-content/uploads/2021/11/backinblack.png, https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png | 0 | 0 | WMBK | [{"link": "https://barefootbuttons.com/product-category/accessories/", "name": "Accessories"}, {"link": "https://barefootbuttons.com/product-category/wingman/", "name": "WingMan"}] | - | Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet. | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

| 29318 | WingMan Infra-Red | simple | 995 | 995 | USD | https://barefootbuttons.com/product/wingman-red/ | True | https://barefootbuttons.com/wp-content/uploads/2021/11/infrared.png, https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png | 0 | 0 | WMR | [{"link": "https://barefootbuttons.com/product-category/accessories/", "name": "Accessories"}, {"link": "https://barefootbuttons.com/product-category/wingman/", "name": "WingMan"}] | - | Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet. | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

| 29320 | WingMan Sky High Blue | simple | 995 | 995 | USD | https://barefootbuttons.com/product/wingman-blue/ | True | https://barefootbuttons.com/wp-content/uploads/2021/11/skyhighblue.png, https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png | 0 | 0 | WMB | [{"link": "https://barefootbuttons.com/product-category/accessories/", "name": "Accessories"}, {"link": "https://barefootbuttons.com/product-category/wingman/", "name": "WingMan"}] | - | Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet. | - | https://barefootbuttons.com/ | https://barefootbuttons.com/wp-json/wc/store/products?page=1 |

[

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/accessories/",

"name": "Accessories"

},

{

"link": "https://barefootbuttons.com/product-category/wingman/",

"name": "WingMan"

}

],

"currency": "USD",

"description": "",

"description_short": "Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet.",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2021/11/yachtrockyellow.png",

"https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png"

],

"in_stock": true,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 29316,

"product_name": "WingMan Yacht Rock Yellow",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/wingman-yellow/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "WMY",

"variations": []

},

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/accessories/",

"name": "Accessories"

},

{

"link": "https://barefootbuttons.com/product-category/wingman/",

"name": "WingMan"

}

],

"currency": "USD",

"description": "",

"description_short": "Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet.",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2021/11/copilotpurple.png",

"https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png"

],

"in_stock": true,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 29317,

"product_name": "WingMan Co-Pilot Purple",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/wingman-purple/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "WMP",

"variations": []

},

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/wingman/",

"name": "WingMan"

}

],

"currency": "USD",

"description": "",

"description_short": "Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet.",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2021/11/gofortakeoffgreen.png",

"https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png"

],

"in_stock": true,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 29321,

"product_name": "WingMan Go For Takeoff Green",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/wingman-green/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "WMG",

"variations": []

},

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/big-bore/",

"name": "Big Bore"

},

{

"link": "https://barefootbuttons.com/product-category/big-bore/skirtless-bigbore/",

"name": "Skirtless Big Bore"

}

],

"currency": "USD",

"description": "",

"description_short": "The Skirtless Big Bore button has a flat bottom to solve clearance issues on pedals that have low mounted switches or led lights that interfere with a standard button depressing. CLICK HERE TO MAKE SURE YOUR SIZING IS CORRECT FREE SHIPPING OVER $28.00 IN THE US",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2022/10/Skirtless_BigBore_Silver.png",

"https://barefootbuttons.com/wp-content/uploads/2022/10/Skirtless_BigBore_Silver_2.png"

],

"in_stock": false,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 31130,

"product_name": "Barefoot Buttons V1 Skirtless Big Bore Silver",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/22-v1-sb-sv/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "22-V1-SB-SV",

"variations": []

},

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/big-bore/",

"name": "Big Bore"

},

{

"link": "https://barefootbuttons.com/product-category/big-bore/skirtless-bigbore/",

"name": "Skirtless Big Bore"

}

],

"currency": "USD",

"description": "",

"description_short": "",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2023/03/Skirtless_BigBore_Acrylic_Clear.png"

],

"in_stock": true,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 32667,

"product_name": "Barefoot Buttons V1 Skirtless Big Bore Acrylic Clear",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/barefoot-buttons-v1-skirtless-big-bore-acrylic-clear/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "22-V1-SB-CR",

"variations": []

},

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/accessories/",

"name": "Accessories"

},

{

"link": "https://barefootbuttons.com/product-category/wingman/",

"name": "WingMan"

}

],

"currency": "USD",

"description": "",

"description_short": "Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet.",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2021/11/afterburnerorange.png",

"https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png"

],

"in_stock": true,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 29307,

"product_name": "WingMan Afterburner Orange",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/wingman-orange/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "WMO",

"variations": []

},

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/accessories/",

"name": "Accessories"

},

{

"link": "https://barefootbuttons.com/product-category/wingman/",

"name": "WingMan"

}

],

"currency": "USD",

"description": "",

"description_short": "Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet.",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2021/11/backinblack.png",

"https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png"

],

"in_stock": true,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 29314,

"product_name": "WingMan Back In Black",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/wingman-black/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "WMBK",

"variations": []

},

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/accessories/",

"name": "Accessories"

},

{

"link": "https://barefootbuttons.com/product-category/wingman/",

"name": "WingMan"

}

],

"currency": "USD",

"description": "",

"description_short": "Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet.",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2021/11/infrared.png",

"https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png"

],

"in_stock": true,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 29318,

"product_name": "WingMan Infra-Red",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/wingman-red/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "WMR",

"variations": []

},

{

"average_rating": "0",

"categories": [

{

"link": "https://barefootbuttons.com/product-category/accessories/",

"name": "Accessories"

},

{

"link": "https://barefootbuttons.com/product-category/wingman/",

"name": "WingMan"

}

],

"currency": "USD",

"description": "",

"description_short": "Awarded “Best In Show” at the 2017 Summer NAMM show for the Accessories & Add-Ons Category. The WingMan is an effects pedal foot controller that allows you to change effects parameters with your feet while playing. This is our first Universal Solution that comes with two shaft inserts enabling it to fit both classic and boutique style pedals. WingMan has a more compact wing design giving it an even smaller footprint on your pedalboard, and comes standard in glowin-the-dark configuration. Simply replace any factory knob with the WingMan and instantly gain real-time control over your effects parameters with your feet.",

"images": [

"https://barefootbuttons.com/wp-content/uploads/2021/11/skyhighblue.png",

"https://barefootbuttons.com/wp-content/uploads/2018/09/PRODUCT-Wingman2_.png"

],

"in_stock": true,

"input_url": "https://barefootbuttons.com/",

"pagination_url": "https://barefootbuttons.com/wp-json/wc/store/products?page=1",

"product_id": 29320,

"product_name": "WingMan Sky High Blue",

"product_type": "simple",

"product_url": "https://barefootbuttons.com/product/wingman-blue/",

"regular_price": "995",

"review_count": "0",

"sale_price": "995",

"sku": "WMB",

"variations": []

}

]

You can download all product data from any WooCommerce website.

Just provide the links to the WooCommerce website, and the scraper will get you the complete list of products in a spreadsheet.

Examples:

Does not renew

Compare all features &

choose what works best for you

Need More?

Contact us for a custom plan based on your needs.

Join 12400+ customers who love working with ScrapeHero

A few mouse clicks and copy/paste is all that it takes!

Get data like the pros without knowing programming at all.

The crawlers are easy to use, but we are here to help when you need help.

Schedule the crawlers to run hourly, daily, or weekly and get data delivered to your Dropbox.

We will take care of all website structure changes and blocking from websites.

Can’t find what you’re looking for? Check out Cloud Support for assistance!

All our plans require a subscription that renews monthly. If you only need to use our services for a month, you can subscribe to our service for one month and cancel your subscription in less than 30 days.

Yes. You can set up the crawler to run periodically by clicking and selecting your preferred schedule. You can schedule crawlers to run on a Monthly, Weekly, or Hourly interval.

No, We won’t use your IP address to scrape the website. We’ll use our proxies and get data for you. All you have to do is, provide the input and run the scraper.

Unfortunately, we will not be able to provide you a refund/data credits if you made a mistake.

Here are some common scenarios we have seen for quota refund requests

If you cancel, you’ll be billed for the current month, but you won’t be charged again. If you have any page credits, you can still use our service until it reaches its limit.

Some crawlers can collect multiple records from a single page, while others might need to go to 3 pages to get a single record. For example, our Amazon Bestsellers crawler collects 50 records from one page, while our Indeed crawler needs to go through a list of all jobs and then move into each job details page to get more data.

All our data credit reset at the end of the billing period. Any unused credits do not carry over to the next billing period and also are nonrefundable. This is consistent with most software subscription services.

Sure, we can build custom solutions for you. Please contact our Sales team using this link, and that will get us started. In your message, please describe in detail what you require.

Most sites will display product pricing, availability and delivery charges based on the user location. Our crawler uses locations from US states so that the pricing may vary. To get accurate results based on a location, please contact us.

Contact us to schedule a brief, introductory call with our experts and learn how we can assist your needs.

Legal Disclaimer: ScrapeHero is an equal opportunity data service provider, a conduit, just like an ISP. We just gather data for our customers responsibly and sensibly. We do not store or resell data. We only provide the technologies and data pipes to scrape publicly available data. The mention of any company names, trademarks or data sets on our site does not imply we can or will scrape them. They are listed only as an illustration of the types of requests we get. Any code provided in our tutorials is for learning only, we are not responsible for how it is used.